@GaelVaroquaux: neural networks, tabular data, uncertainty, active learning, atomistic ML, learning theory.

https://dholzmueller.github.io

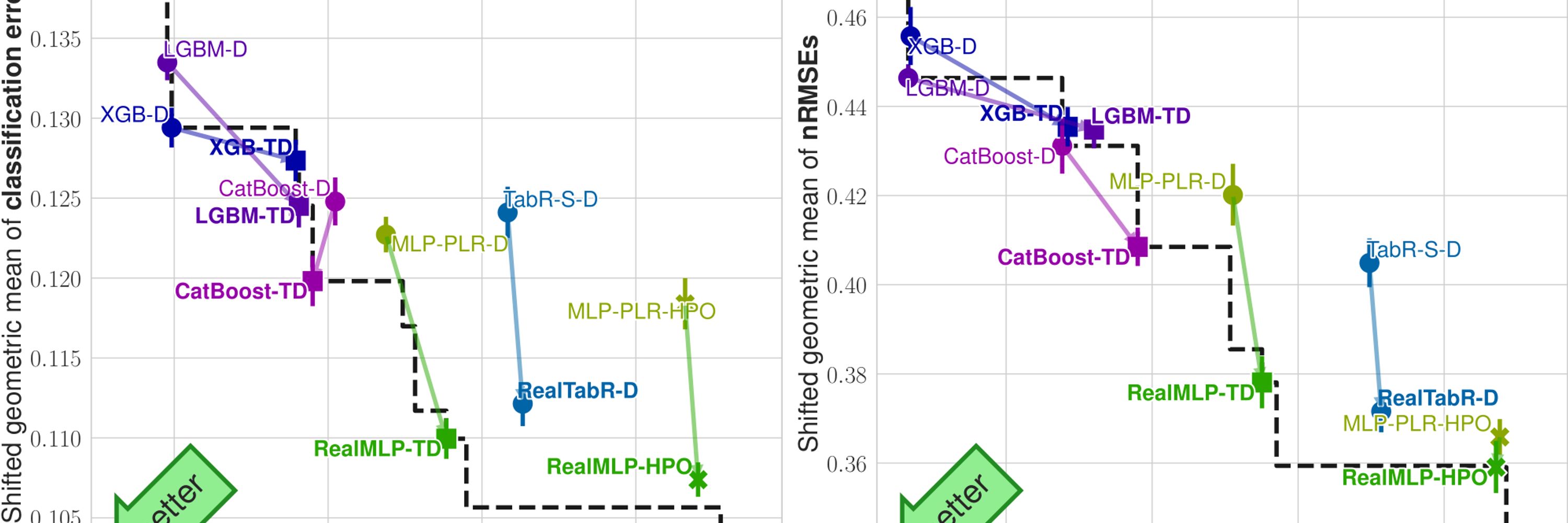

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

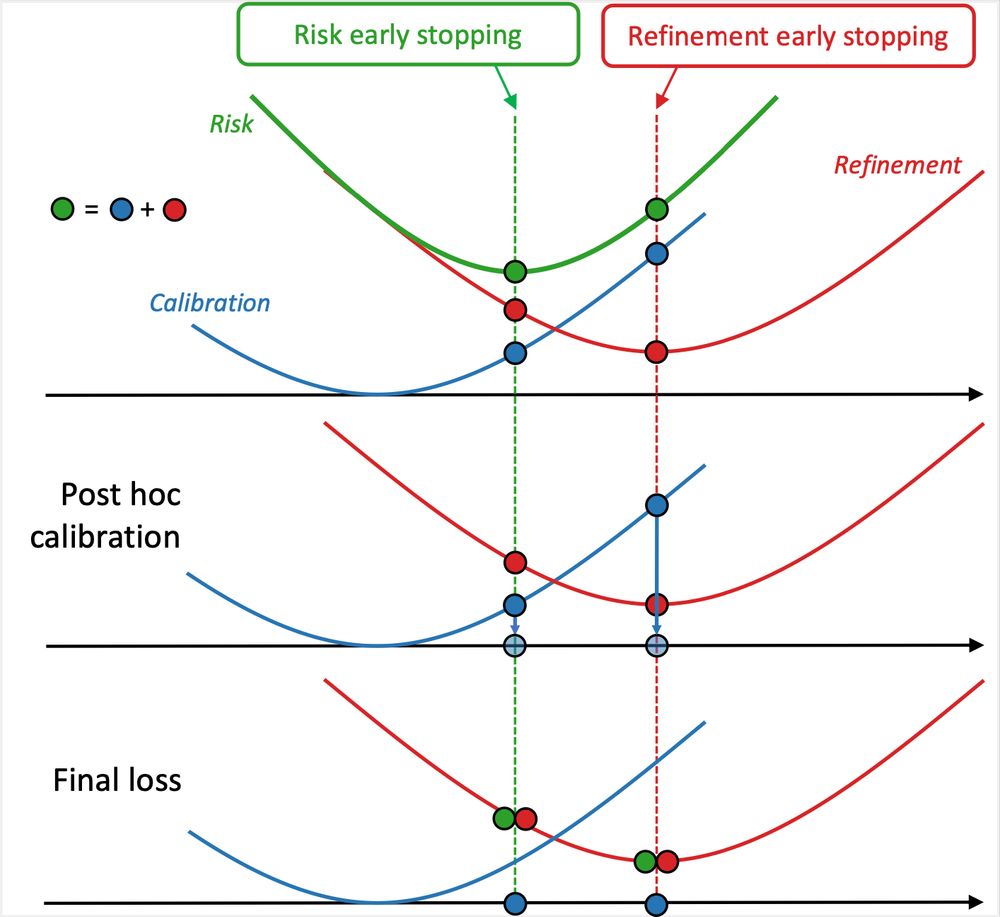

With @dholzmueller.bsky.social, Michael I. Jordan and @bachfrancis.bsky.social we argue that with well designed regularization, more expressive models like matrix scaling can outperform simpler ones across calibration set sizes, data dimensions, and applications.

With @dholzmueller.bsky.social, Michael I. Jordan and @bachfrancis.bsky.social we argue that with well designed regularization, more expressive models like matrix scaling can outperform simpler ones across calibration set sizes, data dimensions, and applications.

My solution is short (48 LOC) and relatively general-purpose – I used skrub to preprocess string and date columns, and pytabkit to create an ensemble of RealMLP and TabM models. Link below👇

My solution is short (48 LOC) and relatively general-purpose – I used skrub to preprocess string and date columns, and pytabkit to create an ensemble of RealMLP and TabM models. Link below👇

🚀 Major update! Skrub DataOps, various improvements for the TableReport, new tools for applying transformers to the columns, and a new robust transformer for numerical features are only some of the features included in this release.

With Jingang Qu, @dholzmueller.bsky.social, and Marine Le Morvan

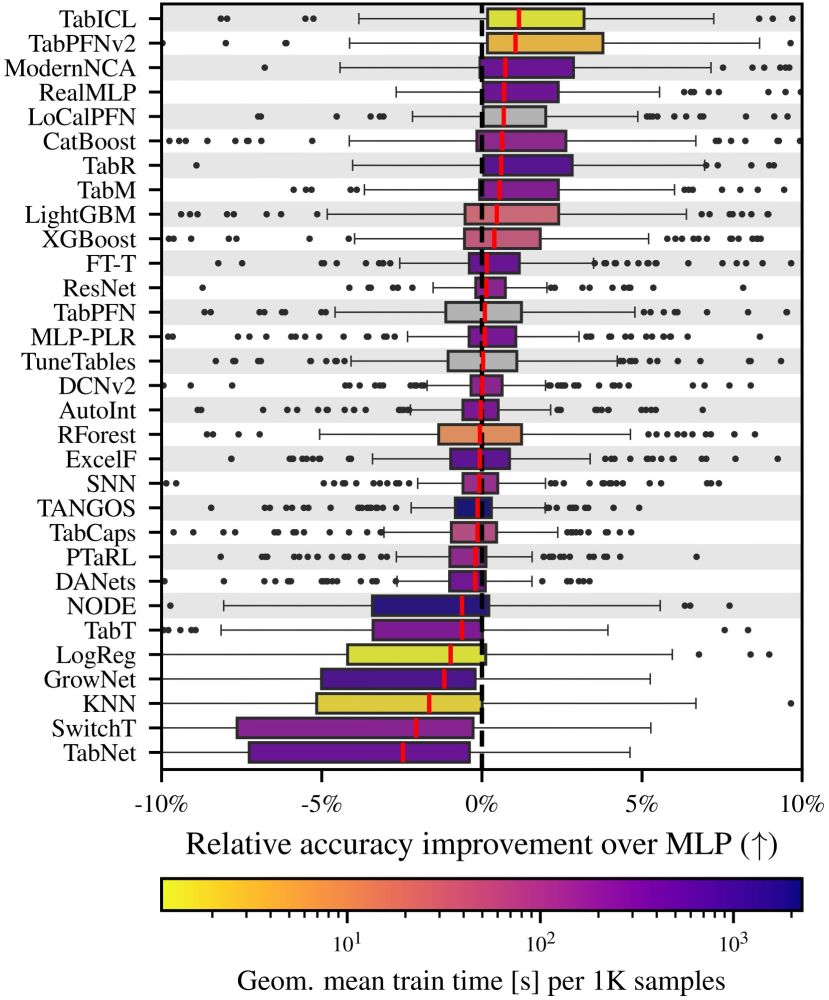

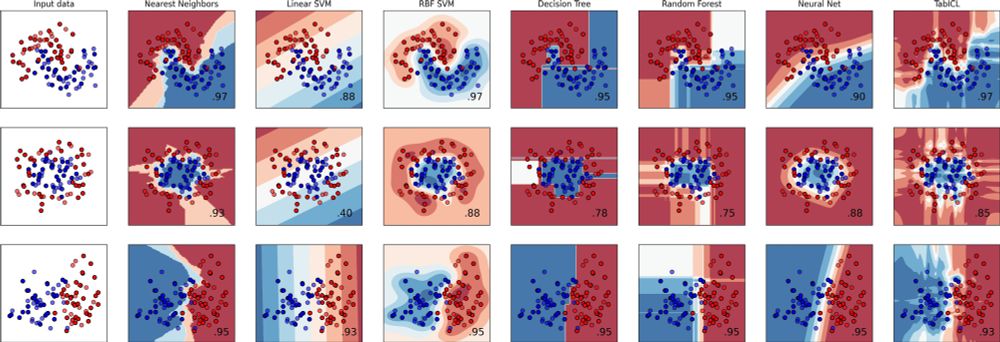

TL;DR: a well-designed architecture and pretraining gives best tabular learner, and more scalable

On top, it's 100% open source

1/9

With Jingang Qu, @dholzmueller.bsky.social, and Marine Le Morvan

TL;DR: a well-designed architecture and pretraining gives best tabular learner, and more scalable

On top, it's 100% open source

1/9

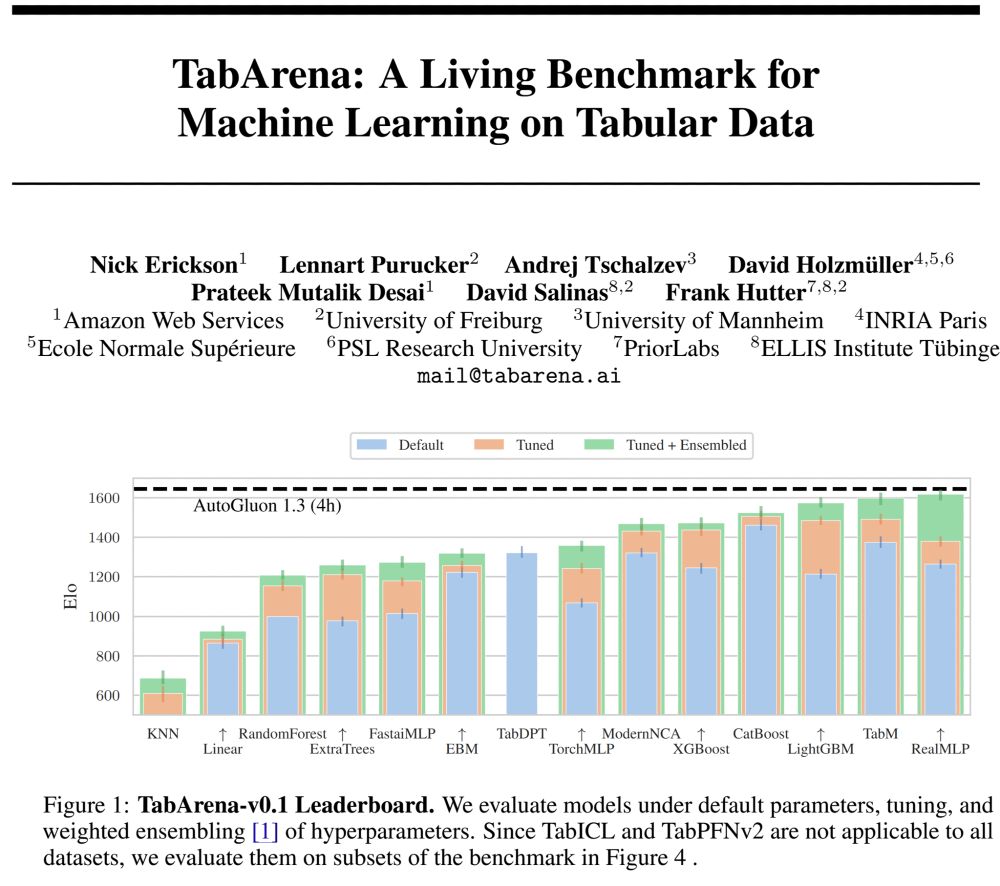

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

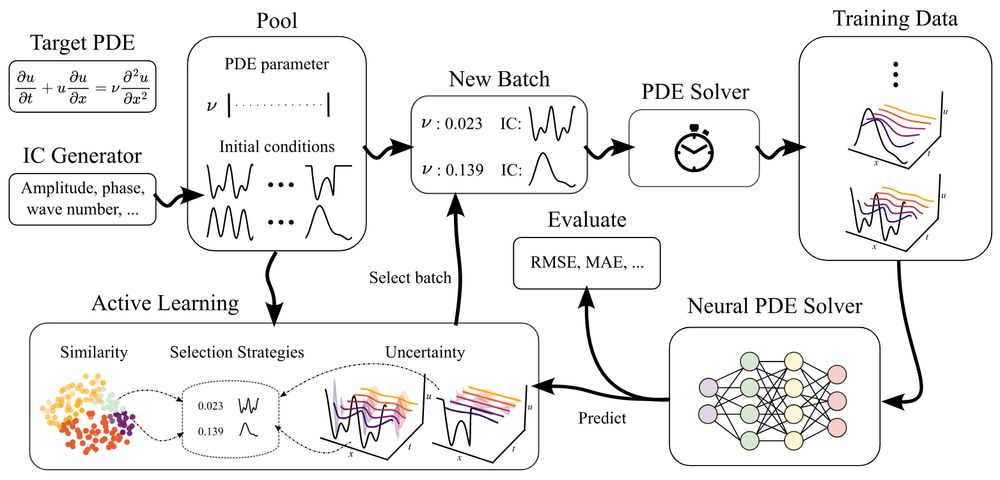

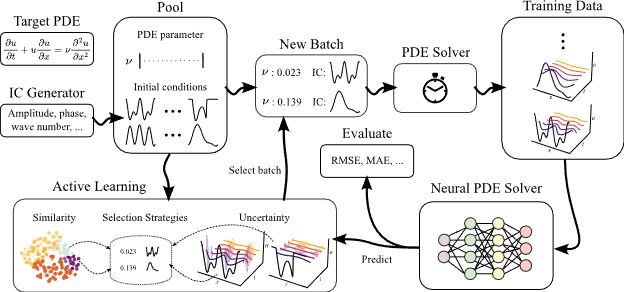

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

✅ Filter columns

🔎 Look at each column's distribution

📊 Get a high level view of the distributions through stats and plots, including correlated columns

🌐 Export the report as html

✅ Filter columns

🔎 Look at each column's distribution

📊 Get a high level view of the distributions through stats and plots, including correlated columns

🌐 Export the report as html

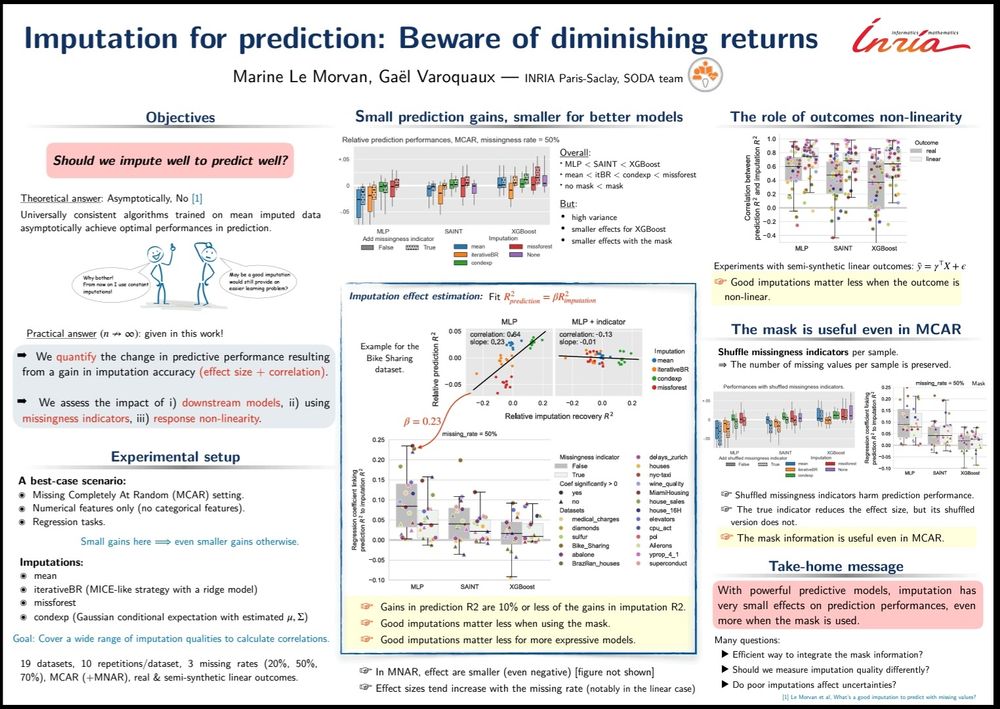

arxiv.org/abs/2407.19804

Concludes 6 years of research on prediction with missing values: Imputation is useful but improvements are expensive, while better learners yield easier gains.

arxiv.org/abs/2407.19804

Concludes 6 years of research on prediction with missing values: Imputation is useful but improvements are expensive, while better learners yield easier gains.

Who: Marine Le Morvan, Inria (in-person)

When: Friday 11 April 4-5pm (+drinks)

Where: L3.36 Lab42 Science Park / Zoom

trl-lab.github.io/trl-seminar/

Who: Marine Le Morvan, Inria (in-person)

When: Friday 11 April 4-5pm (+drinks)

Where: L3.36 Lab42 Science Park / Zoom

trl-lab.github.io/trl-seminar/

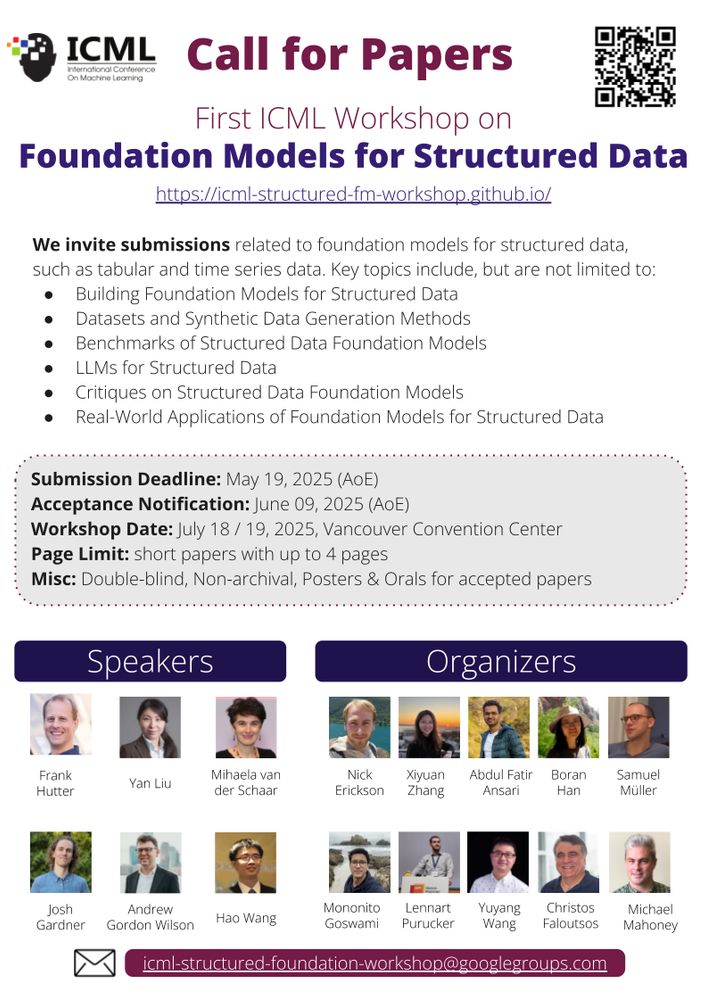

Call for Papers: icml-structured-fm-workshop.github.io

Call for Papers: icml-structured-fm-workshop.github.io

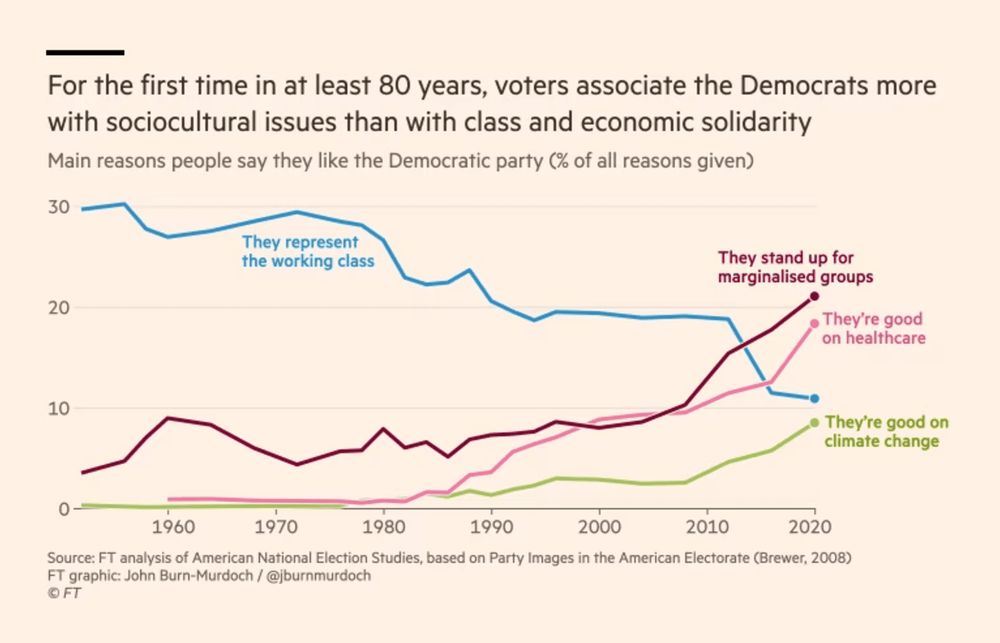

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

📝LOGLO-FNO: Efficient Learning of Local and Global Features in Fourier Neural Operators

📷 Join us on April 27 at #ICLR2025!

#AI #ML #ICLR #AI4Science

📝LOGLO-FNO: Efficient Learning of Local and Global Features in Fourier Neural Operators

📷 Join us on April 27 at #ICLR2025!

#AI #ML #ICLR #AI4Science

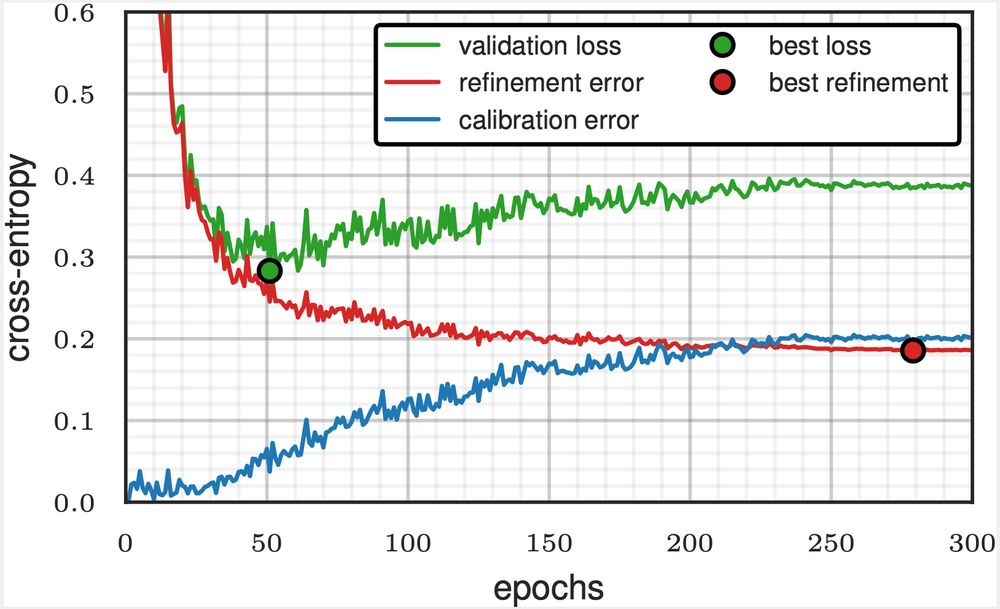

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

With @dholzmueller.bsky.social, Michael I. Jordan, and @bachfrancis.bsky.social, we propose a method that integrates with any model and boosts classification performance across tasks.

With @dholzmueller.bsky.social, Michael I. Jordan, and @bachfrancis.bsky.social, we propose a method that integrates with any model and boosts classification performance across tasks.

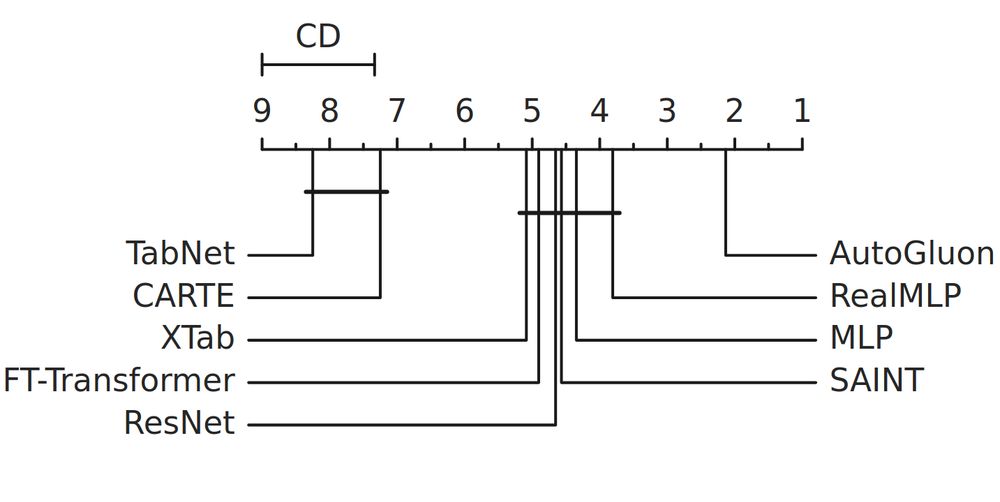

On a recent 300-dataset benchmark with many baselines, RealMLP takes a shared first place overall. 🔥

Importantly, RealMLP is also relatively CPU-friendly, unlike other SOTA DL models (including TabPFNv2 and TabM). 🧵 1/

On a recent 300-dataset benchmark with many baselines, RealMLP takes a shared first place overall. 🔥

Importantly, RealMLP is also relatively CPU-friendly, unlike other SOTA DL models (including TabPFNv2 and TabM). 🧵 1/

sites.google.com/view/rl-and-...

Inspiring talks by @eisenjulian.bsky.social, @neuralnoise.com, Frank Hutter, Vaishali Pal, TBC.

We welcome extended abstracts until 31 Jan!

sites.google.com/view/rl-and-...

Inspiring talks by @eisenjulian.bsky.social, @neuralnoise.com, Frank Hutter, Vaishali Pal, TBC.

We welcome extended abstracts until 31 Jan!

A blog post to celebrate and present it: francisbach.com/my-book-is-o...

A blog post to celebrate and present it: francisbach.com/my-book-is-o...

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

I was happy to be a co-advisor on this project - most of the credit goes to Daniel and Marimuthu.

I was happy to be a co-advisor on this project - most of the credit goes to Daniel and Marimuthu.