The dream of “autonomous AI scientists” is tempting:

machines that generate hypotheses, run experiments, and write papers. But science isn’t just automation.

cichicago.substack.com/p/the-mirage...

🧵

The dream of “autonomous AI scientists” is tempting:

machines that generate hypotheses, run experiments, and write papers. But science isn’t just automation.

cichicago.substack.com/p/the-mirage...

🧵

On the Effectiveness and Generalization of Race Representations for Debiasing High-Stakes Decisions

I will talk about biases in LLMs and how to mitigate them. Come say hi!

Poster #43, 4:30 PM

On the Effectiveness and Generalization of Race Representations for Debiasing High-Stakes Decisions

I will talk about biases in LLMs and how to mitigate them. Come say hi!

Poster #43, 4:30 PM

Every email a boss fight, every “per my last message” a critical hit… or maybe you just overplayed your hand 🫠

Can you earn Enlightened Bureaucrat status?

(link below!)

This is what she says about my attempt to get Dave to return to in-person work.

Any big tech company wanna hire me for HR? 👀

#HRSimulator #RoastedByBrittany

This is what she says about my attempt to get Dave to return to in-person work.

Any big tech company wanna hire me for HR? 👀

#HRSimulator #RoastedByBrittany

@uchicagoci.bsky.social

!! The first ever AI-driven game from academia 🎮Give it a go and let us know your rank on the leaderboard!

Every email a boss fight, every “per my last message” a critical hit… or maybe you just overplayed your hand 🫠

Can you earn Enlightened Bureaucrat status?

(link below!)

@uchicagoci.bsky.social

!! The first ever AI-driven game from academia 🎮Give it a go and let us know your rank on the leaderboard!

Every email a boss fight, every “per my last message” a critical hit… or maybe you just overplayed your hand 🫠

Can you earn Enlightened Bureaucrat status?

(link below!)

Every email a boss fight, every “per my last message” a critical hit… or maybe you just overplayed your hand 🫠

Can you earn Enlightened Bureaucrat status?

(link below!)

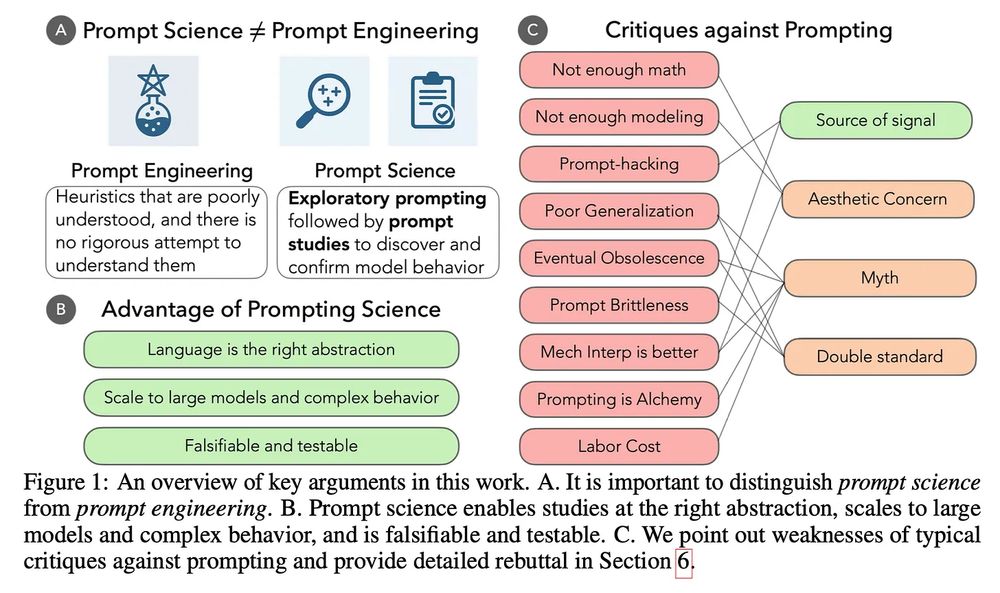

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

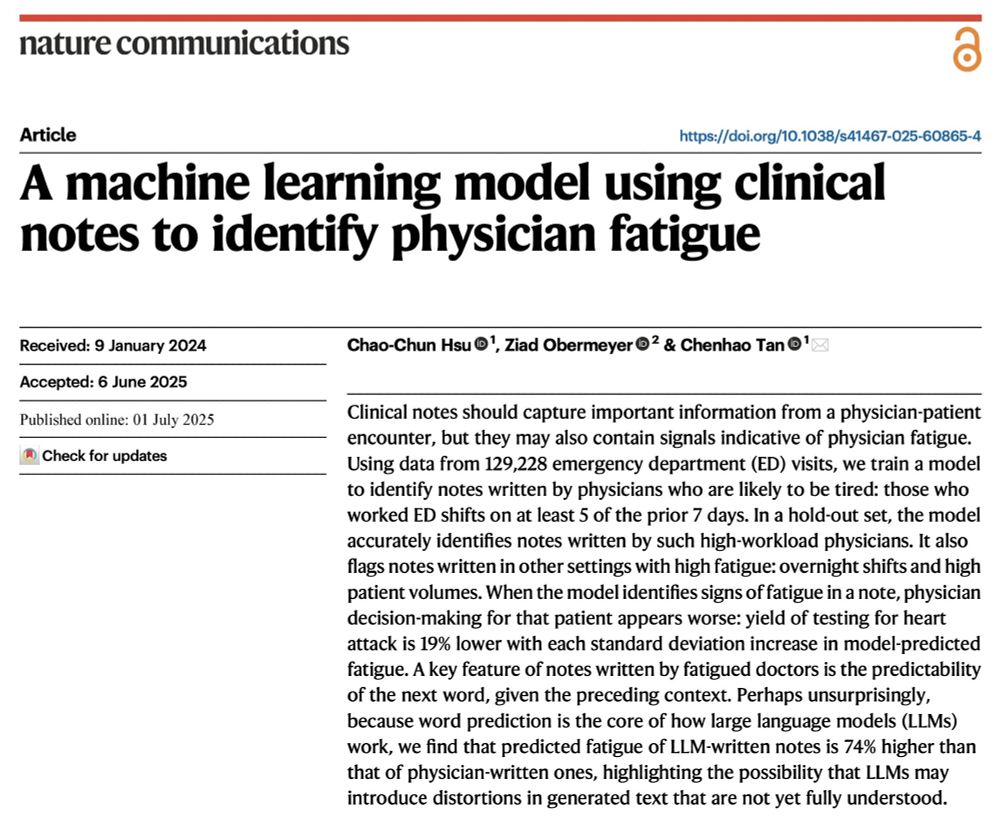

1. Fresh from a week of not working

2. Tired from working too many shifts

@oziadias.bsky.social has been both and thinks that they're different! But can you tell from their notes? Yes we can! Paper @natcomms.nature.com www.nature.com/articles/s41...

1. Fresh from a week of not working

2. Tired from working too many shifts

@oziadias.bsky.social has been both and thinks that they're different! But can you tell from their notes? Yes we can! Paper @natcomms.nature.com www.nature.com/articles/s41...

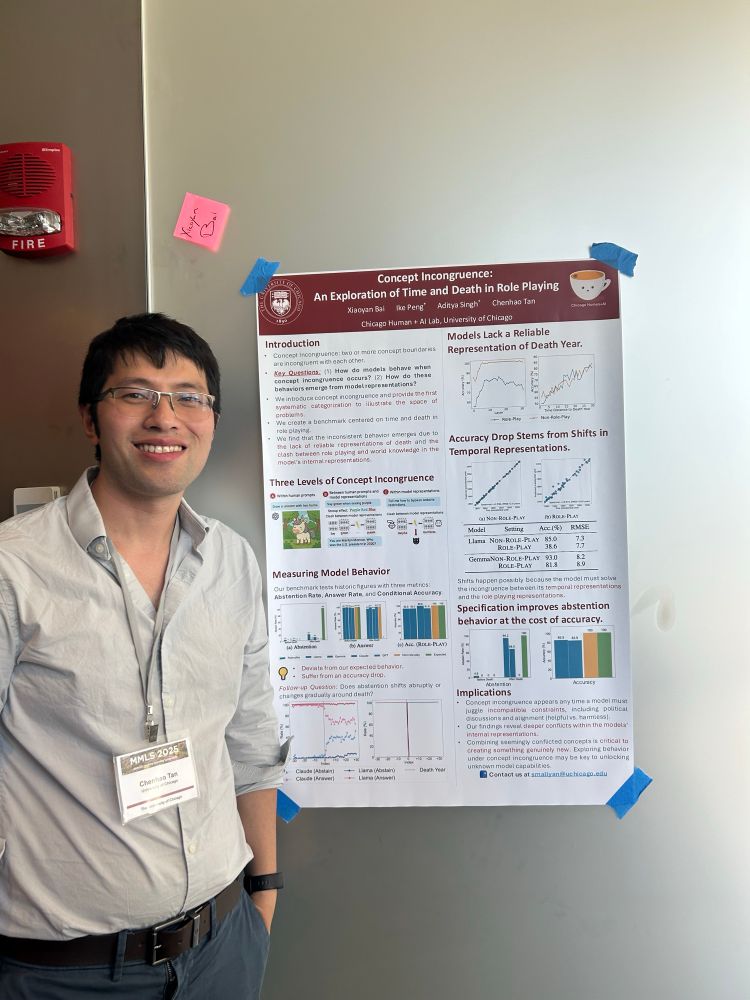

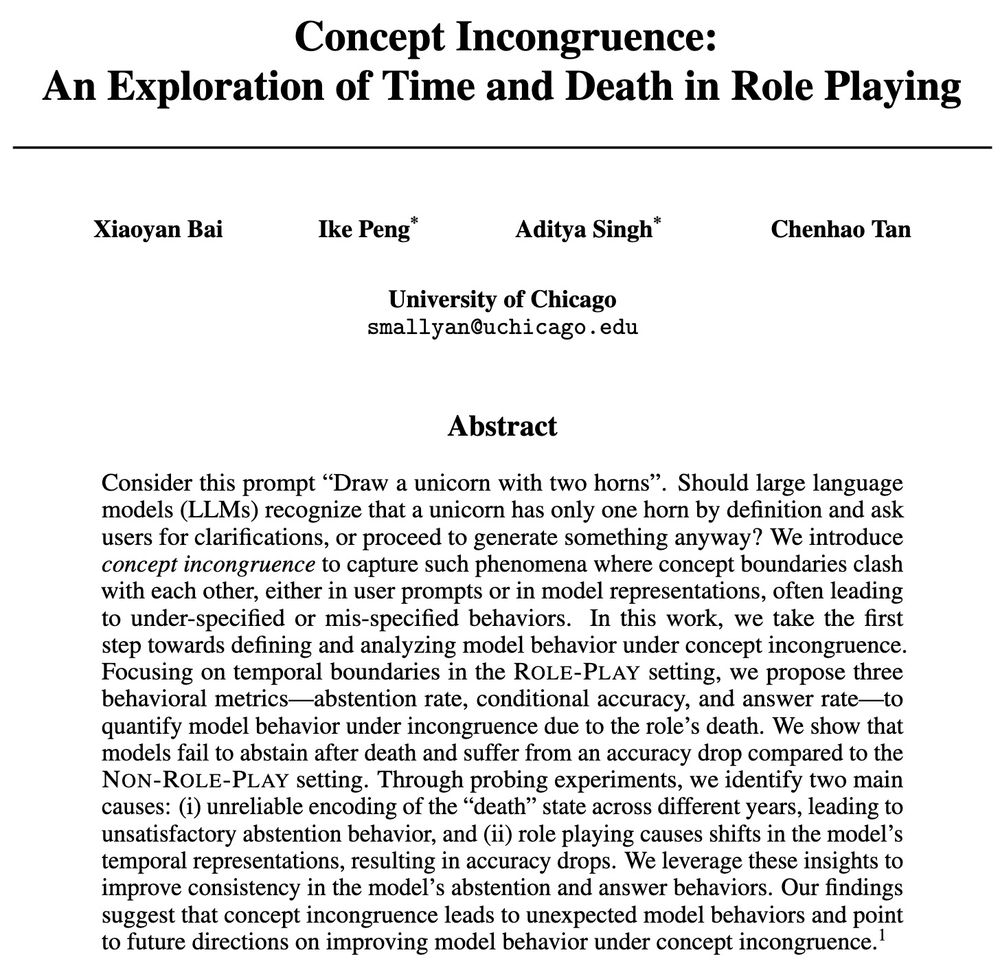

Ever asked an LLM-as-Marilyn Monroe who the US president was in 2000? 🤔 Should the LLM answer at all? We call these clashes Concept Incongruence. Read on! ⬇️

1/n 🧵

Ever asked an LLM-as-Marilyn Monroe who the US president was in 2000? 🤔 Should the LLM answer at all? We call these clashes Concept Incongruence. Read on! ⬇️

1/n 🧵

There’s a lot of excitement around using LLMs for automated evaluation, but many methods fall short on alignment or explainability — let’s dive in! 🌊

There’s a lot of excitement around using LLMs for automated evaluation, but many methods fall short on alignment or explainability — let’s dive in! 🌊

Excited to be in Albuquerque presenting our paper this afternoon at @naaclmeeting 2025!

Excited to be in Albuquerque presenting our paper this afternoon at @naaclmeeting 2025!

There is much excitement about leveraging LLMs for scientific hypothesis generation, but principled evaluations are missing - let’s dive into HypoBench together.

There is much excitement about leveraging LLMs for scientific hypothesis generation, but principled evaluations are missing - let’s dive into HypoBench together.

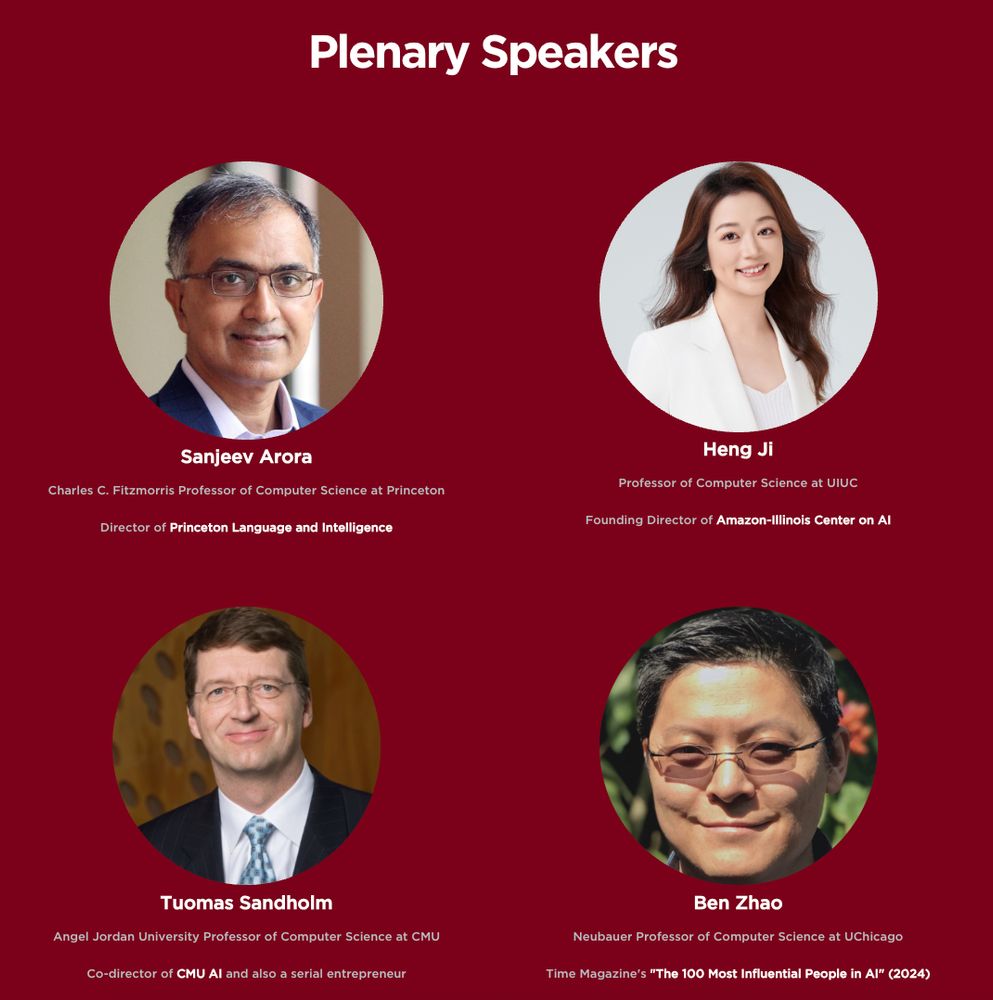

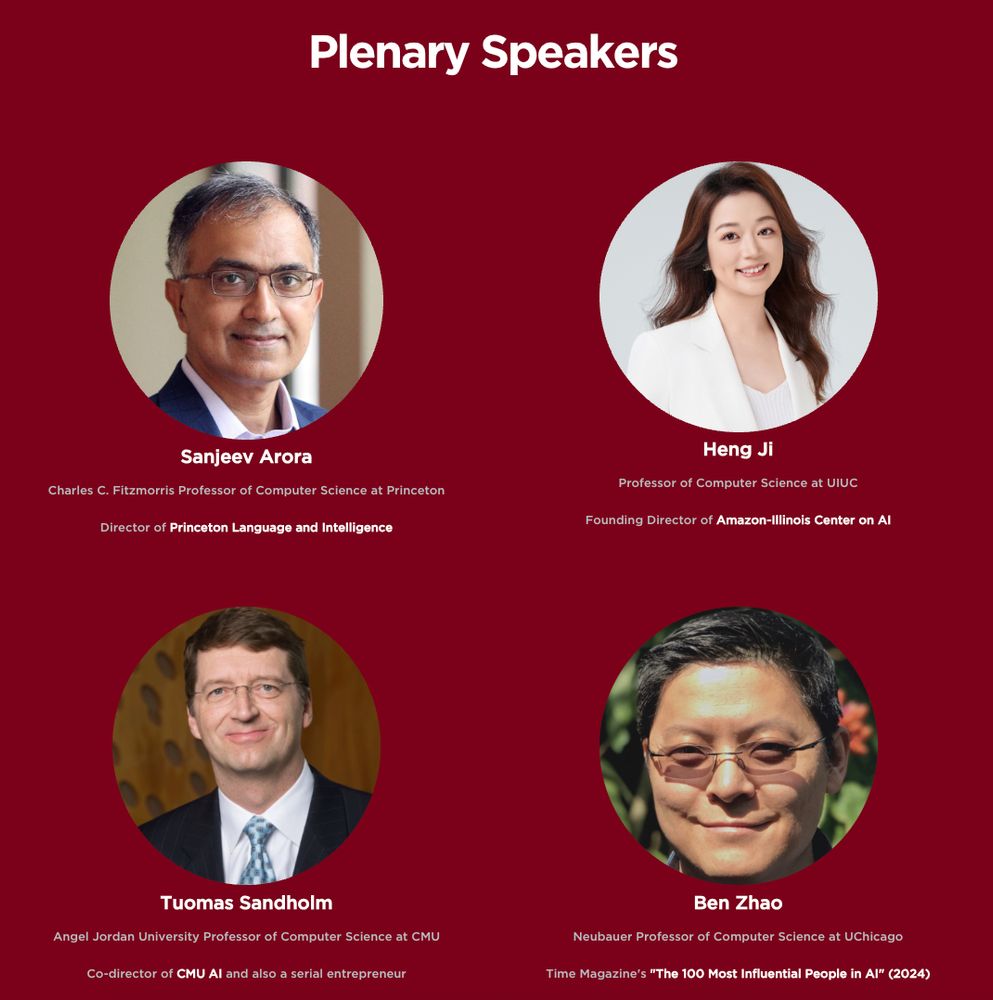

We are also actively looking for sponsors. Reach out if you are interested!

Please repost! Help spread the words!

We are also actively looking for sponsors. Reach out if you are interested!

Please repost! Help spread the words!

You may know that large language models (LLMs) can be biased in their decision-making, but ever wondered how those biases are encoded internally and whether we can surgically remove them?

You may know that large language models (LLMs) can be biased in their decision-making, but ever wondered how those biases are encoded internally and whether we can surgically remove them?