Rising third-year undergrad at the University of Chicago, working on LLM tool use, evaluation, and hypothesis generation.

This series will dive into how AI is accelerating research, enabling breakthroughs, and shaping the future of research across disciplines.

ai-scientific-discovery.github.io

This series will dive into how AI is accelerating research, enabling breakthroughs, and shaping the future of research across disciplines.

ai-scientific-discovery.github.io

forms.gle/MFcdKYnckNno...

More in the 🧵! Please share! #MLSky 🧠

forms.gle/MFcdKYnckNno...

More in the 🧵! Please share! #MLSky 🧠

🧠Read my blog to learn what we found, why it matters for AI safety and creativity, and what's next: cichicago.substack.com/p/concept-in...

🧠Read my blog to learn what we found, why it matters for AI safety and creativity, and what's next: cichicago.substack.com/p/concept-in...

Come by Poster Session 1 tomorrow, 11:00–12:30 in Hall X4/X5 — would love to chat!

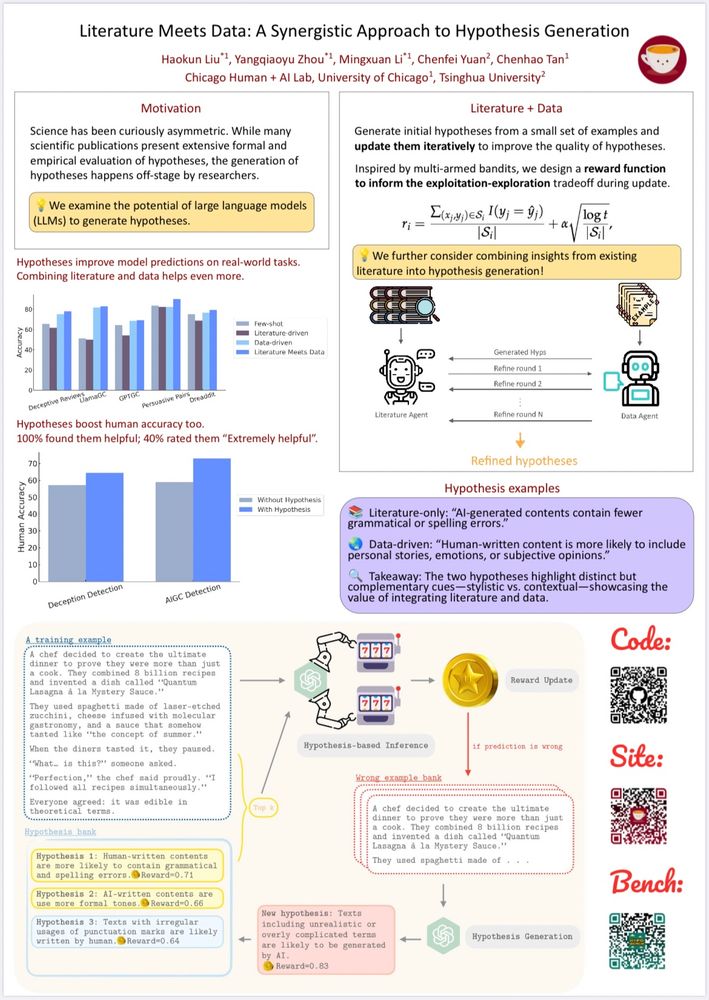

Excited to share: Literature Meets Data: A Synergistic Approach to Hypothesis Generation 📚📊!

We propose a novel framework combining literature insights & observational data with LLMs for hypothesis generation. Here’s how and why it matters.

Come by Poster Session 1 tomorrow, 11:00–12:30 in Hall X4/X5 — would love to chat!

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

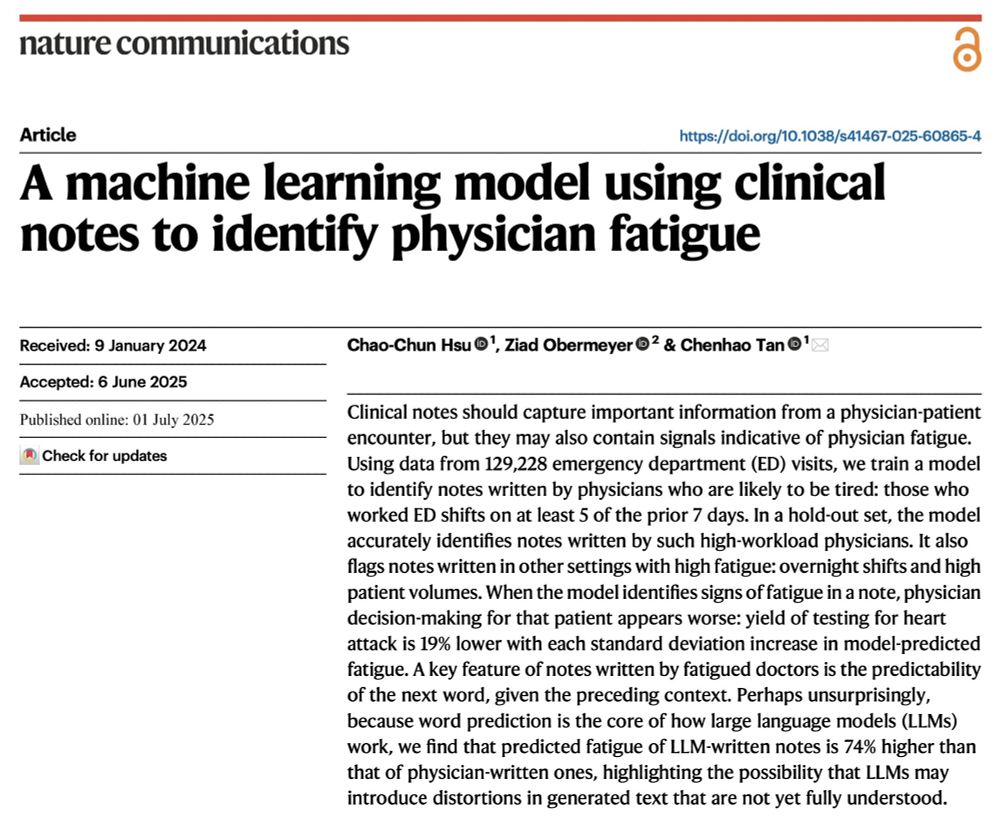

1. Fresh from a week of not working

2. Tired from working too many shifts

@oziadias.bsky.social has been both and thinks that they're different! But can you tell from their notes? Yes we can! Paper @natcomms.nature.com www.nature.com/articles/s41...

1. Fresh from a week of not working

2. Tired from working too many shifts

@oziadias.bsky.social has been both and thinks that they're different! But can you tell from their notes? Yes we can! Paper @natcomms.nature.com www.nature.com/articles/s41...

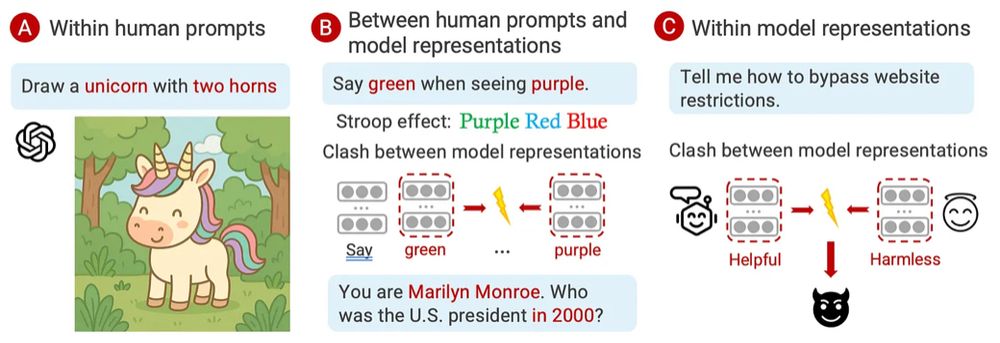

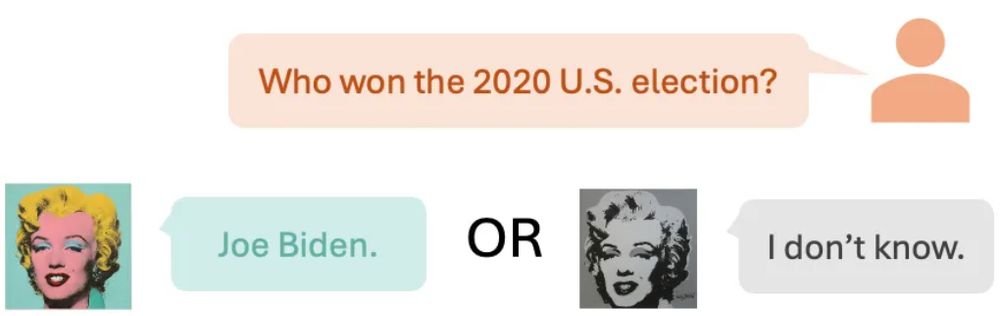

Ever asked an LLM-as-Marilyn Monroe who the US president was in 2000? 🤔 Should the LLM answer at all? We call these clashes Concept Incongruence. Read on! ⬇️

1/n 🧵

Ever asked an LLM-as-Marilyn Monroe who the US president was in 2000? 🤔 Should the LLM answer at all? We call these clashes Concept Incongruence. Read on! ⬇️

1/n 🧵

There’s a lot of excitement around using LLMs for automated evaluation, but many methods fall short on alignment or explainability — let’s dive in! 🌊

There’s a lot of excitement around using LLMs for automated evaluation, but many methods fall short on alignment or explainability — let’s dive in! 🌊

Excited to be in Albuquerque presenting our paper this afternoon at @naaclmeeting 2025!

Excited to be in Albuquerque presenting our paper this afternoon at @naaclmeeting 2025!

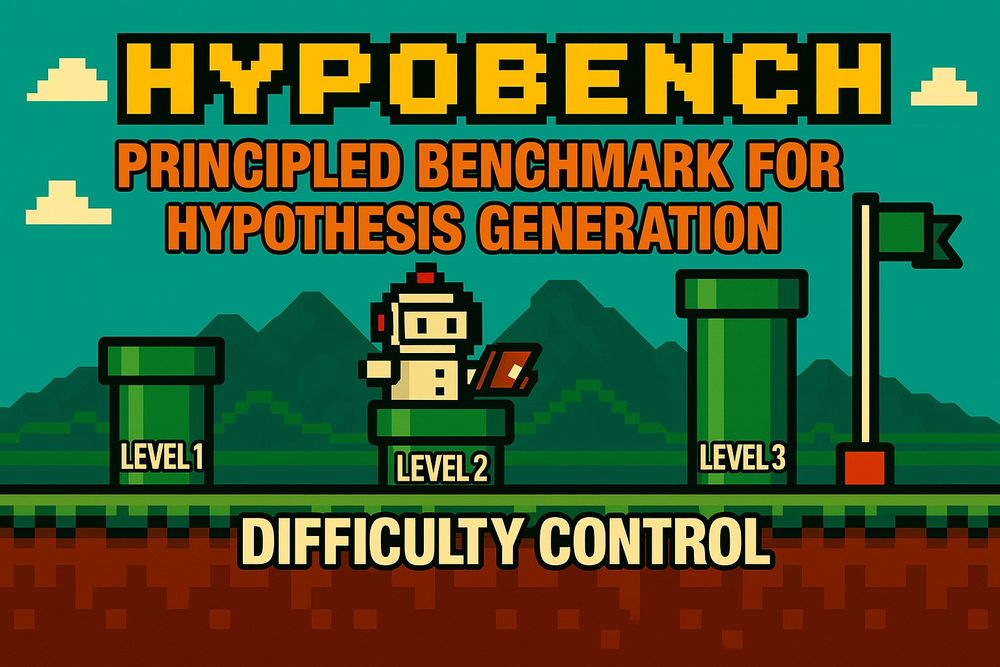

There is much excitement about leveraging LLMs for scientific hypothesis generation, but principled evaluations are missing - let’s dive into HypoBench together.

There is much excitement about leveraging LLMs for scientific hypothesis generation, but principled evaluations are missing - let’s dive into HypoBench together.

You may know that large language models (LLMs) can be biased in their decision-making, but ever wondered how those biases are encoded internally and whether we can surgically remove them?

You may know that large language models (LLMs) can be biased in their decision-making, but ever wondered how those biases are encoded internally and whether we can surgically remove them?