My work focuses on learning dynamics in biologically plausible neural networks. #NeuroAI

We show that in sign-diverse networks, inherent non-gradient “curl” terms arise, and can, depending on network architecture, destabilize gradient-descent solutions or paradoxically accelerate learning beyond pure gradient flow.

🧵⬇️

www.arxiv.org/abs/2510.02765

We show that in sign-diverse networks, inherent non-gradient “curl” terms arise, and can, depending on network architecture, destabilize gradient-descent solutions or paradoxically accelerate learning beyond pure gradient flow.

🧵⬇️

www.arxiv.org/abs/2510.02765

We show that in sign-diverse networks, inherent non-gradient “curl” terms arise, and can, depending on network architecture, destabilize gradient-descent solutions or paradoxically accelerate learning beyond pure gradient flow.

🧵⬇️

www.arxiv.org/abs/2510.02765

(1/6)

(1/6)

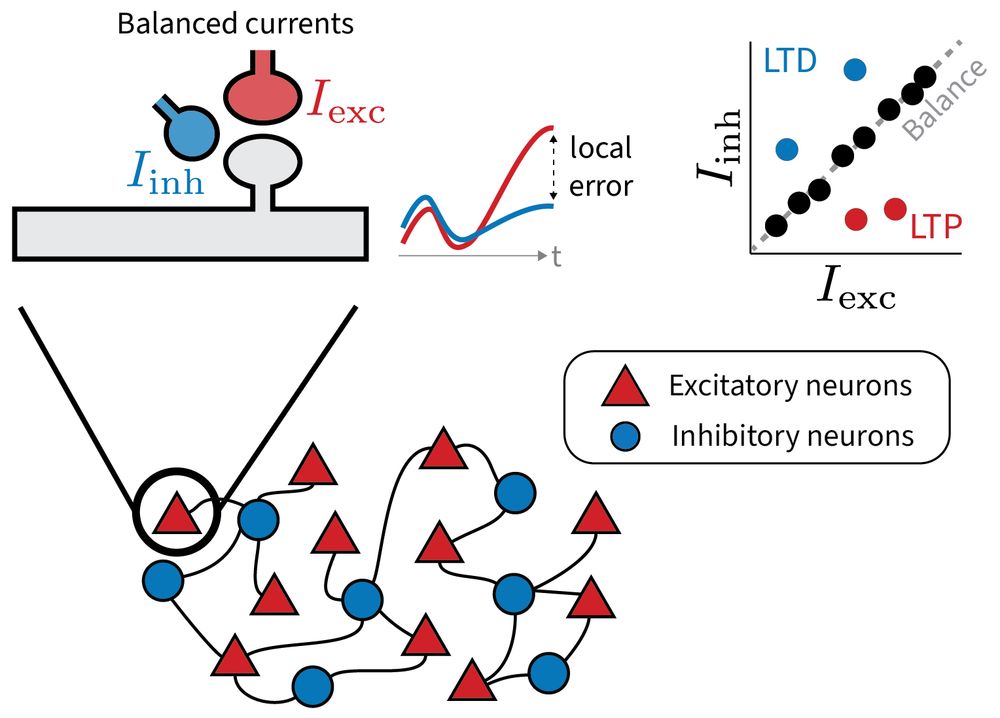

Our new preprint explores a provocative idea: Small, targeted deviations from this balance may serve a purpose: to encode local error signals for learning.

www.biorxiv.org/content/10.1...

led by @jrbch.bsky.social

Our new preprint explores a provocative idea: Small, targeted deviations from this balance may serve a purpose: to encode local error signals for learning.

www.biorxiv.org/content/10.1...

led by @jrbch.bsky.social

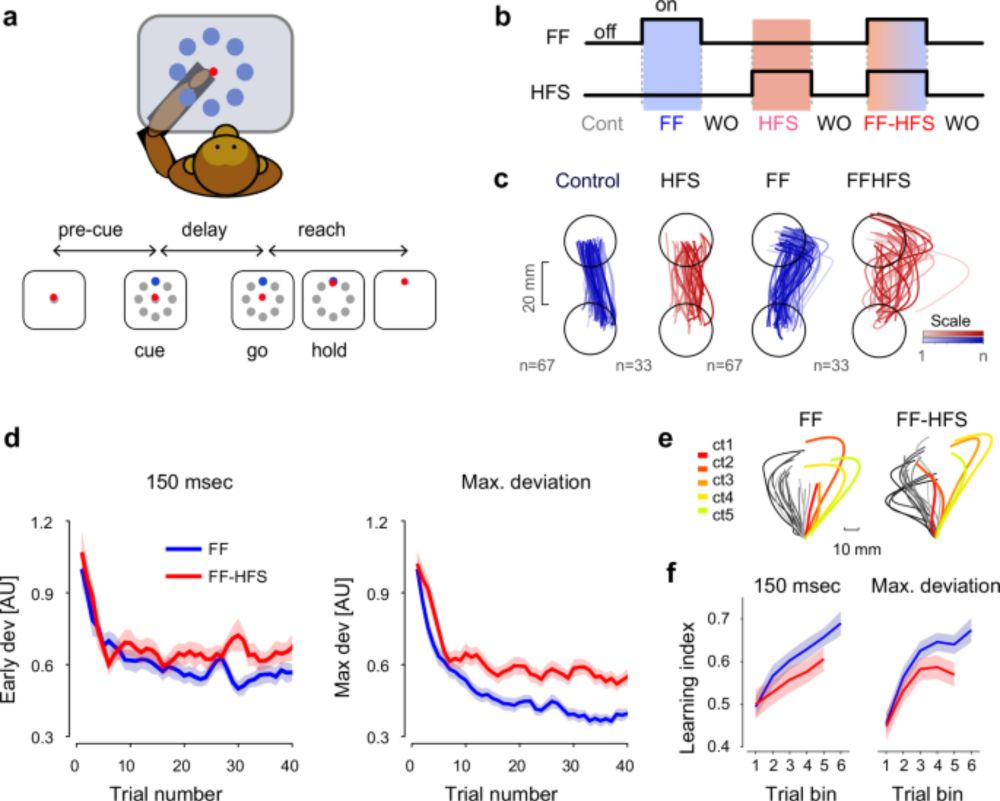

1/n Thrilled to share our latest research, now published in Nature Communications! 🎉 This study dives deep into how the cerebellum shapes cortical preparatory activity during motor adaptation.

www.nature.com/articles/s41...

#neuroskyence #motorcontrol #cerebellum #motoradaptation

1/n Thrilled to share our latest research, now published in Nature Communications! 🎉 This study dives deep into how the cerebellum shapes cortical preparatory activity during motor adaptation.

www.nature.com/articles/s41...

#neuroskyence #motorcontrol #cerebellum #motoradaptation

Let me know if you want to be added (the 'from' can include those not in Paris anymore) or just tap in if you want to know what we're talking about!

Regardless, please re-tweet!

go.bsky.app/3Zs9w5w

Let me know if you want to be added (the 'from' can include those not in Paris anymore) or just tap in if you want to know what we're talking about!

Regardless, please re-tweet!

go.bsky.app/3Zs9w5w

I created a starter pack! Please comment on this if you are not on the list and working in this field 🙂

go.bsky.app/CscFTAr

I created a starter pack! Please comment on this if you are not on the list and working in this field 🙂

go.bsky.app/CscFTAr