We created a bimanual iBCI that enabled simultaneous neural control of two cursors on the first day of use.

www.bci-award.com/Home

We created a bimanual iBCI that enabled simultaneous neural control of two cursors on the first day of use.

www.bci-award.com/Home

Check it out! 👉https://sciencedirect.com/science/article/pii/S2211124725010125

🔗 All positions: ki.se/en/about-ki/...

🔗 All positions: ki.se/en/about-ki/...

Apple: 28%

Google: 25%

Microsoft: 34%

Elsevier: 37% with a revenue of $3.9 billion.

Elsevier's payment to academic authors and reviewers: $0

Apple: 28%

Google: 25%

Microsoft: 34%

Elsevier: 37% with a revenue of $3.9 billion.

Elsevier's payment to academic authors and reviewers: $0

www.nporadio1.nl/fragmenten/d...

www.nporadio1.nl/fragmenten/d...

www.nature.com/articles/s42...

www.nature.com/articles/s42...

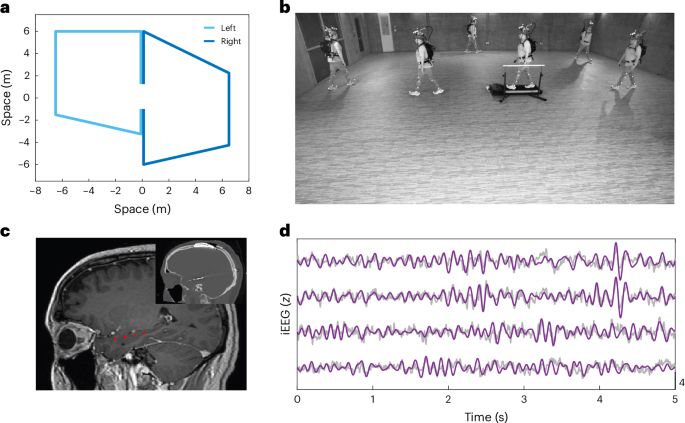

A dream study of mine for nearly 20 yrs not possible until now thanks to NIH 🧠 funding & 1st-author lead @seeber.bsky.social

We tracked hippocampal activity as people walked memory-guided paths & imagined them again. Did brain patterns reappear?🧵👇

www.nature.com/articles/s41...

A dream study of mine for nearly 20 yrs not possible until now thanks to NIH 🧠 funding & 1st-author lead @seeber.bsky.social

We tracked hippocampal activity as people walked memory-guided paths & imagined them again. Did brain patterns reappear?🧵👇

www.nature.com/articles/s41...

Please RT for reach! 🙏🙏

Please RT for reach! 🙏🙏