Understanding deep learning (generalization, calibration, diffusion, etc).

preetum.nakkiran.org

In the lectures, we first introduced diffusion models from a practitioner's perspective, showing how to build a simple but powerful implementation from the ground up (L1).

(1/4)

In the lectures, we first introduced diffusion models from a practitioner's perspective, showing how to build a simple but powerful implementation from the ground up (L1).

(1/4)

Running a prompt and getting output that looks good isn't sufficient evidence for a paper.

@Apple

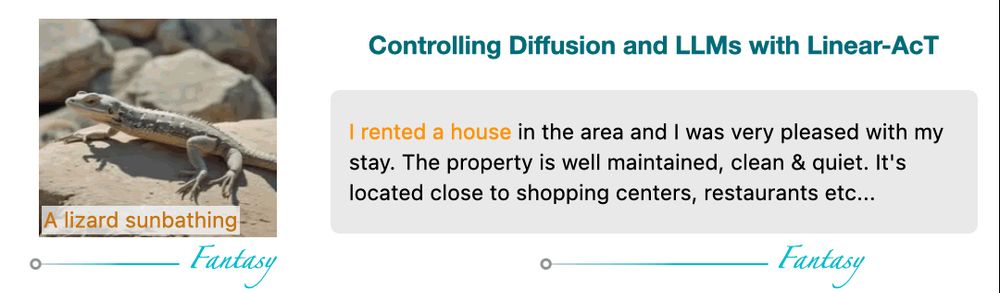

where we achieve interpretable and fine-grained control of LLMs and Diffusion models via Activation Transport 🔥

📄 arxiv.org/abs/2410.23054

🛠️ github.com/apple/ml-act

0/9 🧵

@Apple

where we achieve interpretable and fine-grained control of LLMs and Diffusion models via Activation Transport 🔥

📄 arxiv.org/abs/2410.23054

🛠️ github.com/apple/ml-act

0/9 🧵

www.metacareers.com/jobs/1459691...

www.metacareers.com/jobs/1459691...

alexxthiery.github.io/jobs/2024_di...

alexxthiery.github.io/jobs/2024_di...

My favourite: "Find the easiest problem you can't solve. The more embarrassing, the better!"

Slides: drive.google.com/file/d/15VaT...

TCS For all: sigact.org/tcsforall/

My favourite: "Find the easiest problem you can't solve. The more embarrassing, the better!"

Slides: drive.google.com/file/d/15VaT...

TCS For all: sigact.org/tcsforall/

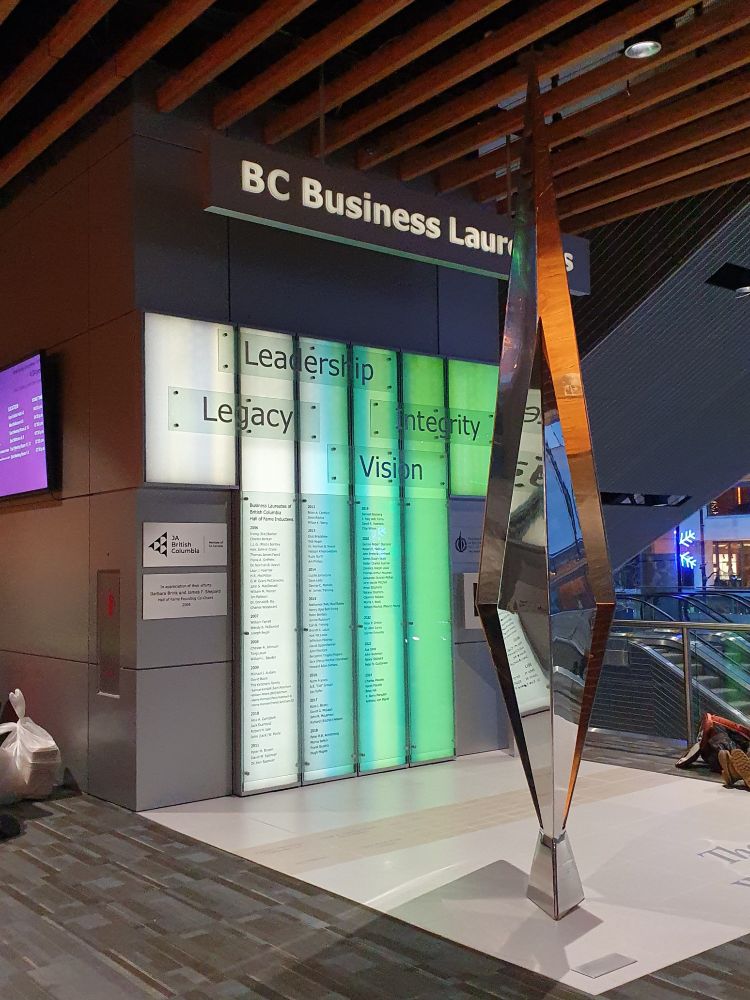

It's time for the #NeurIPS2024 diffusion circle!

🕒Join us at 3PM on Friday December 13. We'll meet near this thing, and venture out from there and find a good spot to sit. Tell your friends!

It's time for the #NeurIPS2024 diffusion circle!

🕒Join us at 3PM on Friday December 13. We'll meet near this thing, and venture out from there and find a good spot to sit. Tell your friends!

If you are presenting something that you think I should check out, pls drop the deets in thread (1-2 sentence description + where to find you)! 🙏

If you are presenting something that you think I should check out, pls drop the deets in thread (1-2 sentence description + where to find you)! 🙏

Despite seeming similar, there is some confusion in the community about the exact connection between the two frameworks. We aim to clear up the confusion by showing how to convert one framework to another, for both training and sampling.

Despite seeming similar, there is some confusion in the community about the exact connection between the two frameworks. We aim to clear up the confusion by showing how to convert one framework to another, for both training and sampling.

I'm talking specifically about the observation that these models seem to recover the same (or similar) mappings every time, regardless of initialisation etc.