The fact of the matter is that THIS SKEET will certainly be part of many, many AI training runs, and there is nothing I nor anyone else can do to stop it.

The fact of the matter is that THIS SKEET will certainly be part of many, many AI training runs, and there is nothing I nor anyone else can do to stop it.

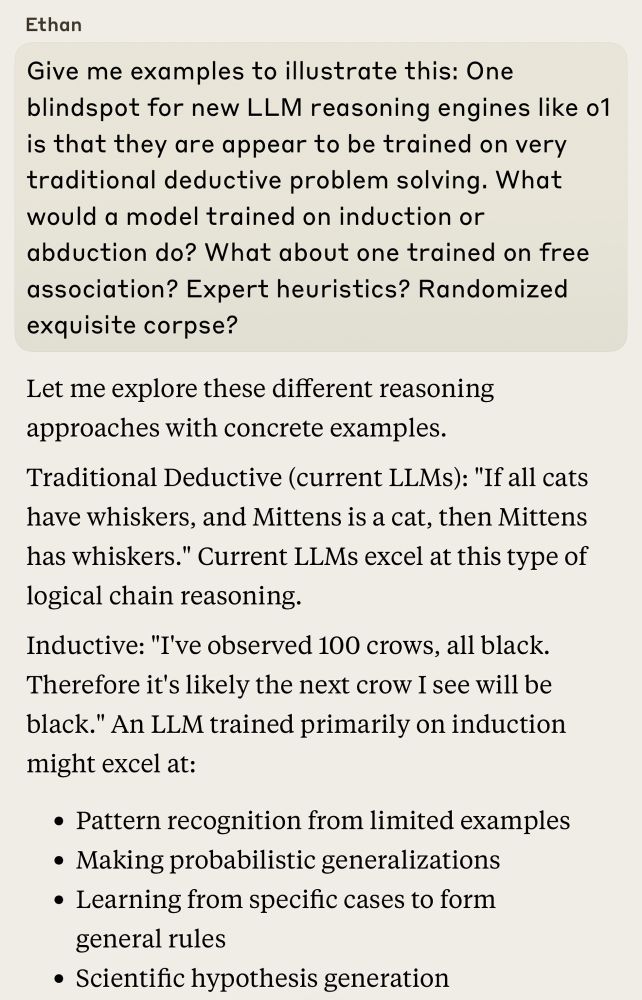

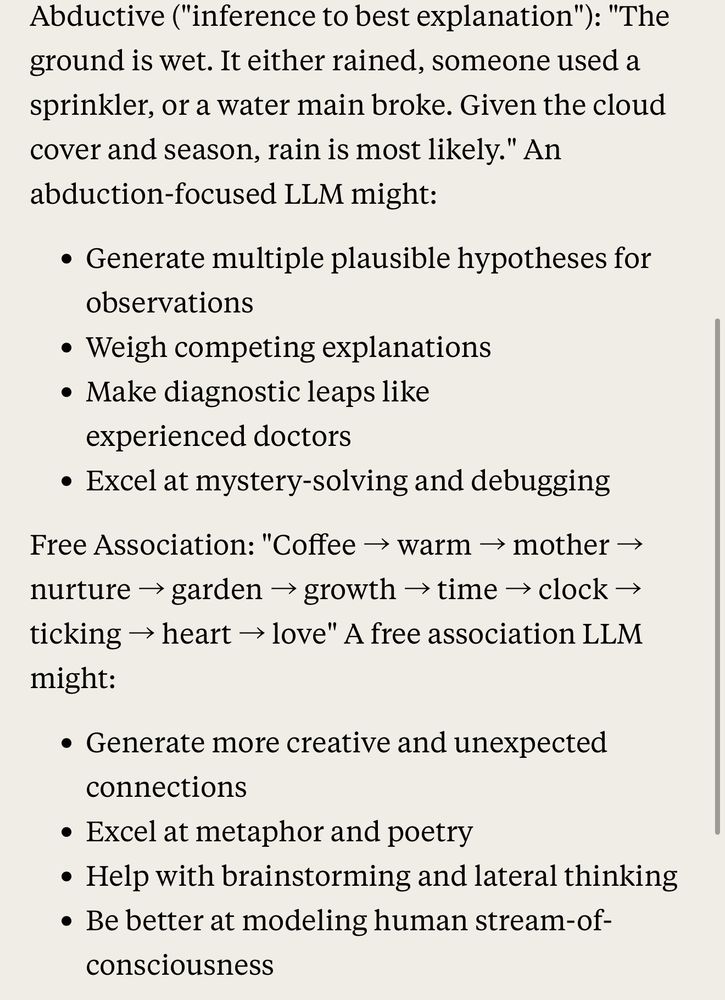

What would a model trained on induction or abduction do? What about one trained on free association? Expert heuristics? Randomized exquisite corpse?

What would a model trained on induction or abduction do? What about one trained on free association? Expert heuristics? Randomized exquisite corpse?

Thread.

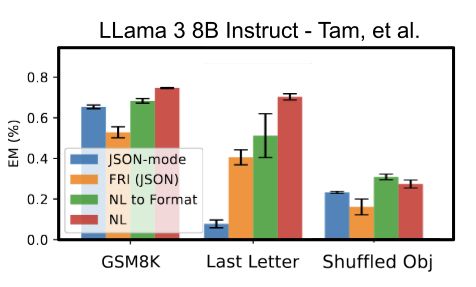

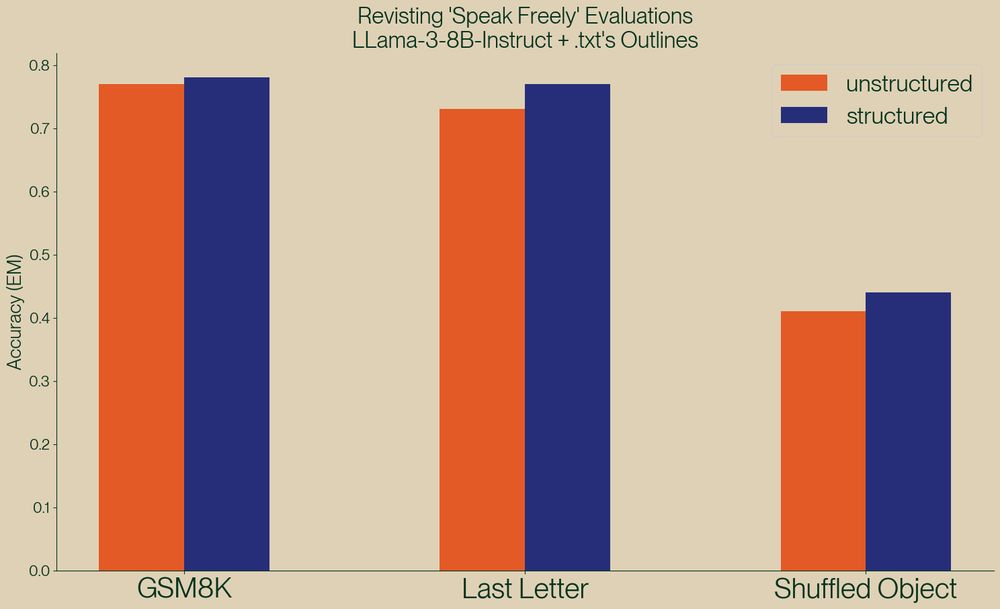

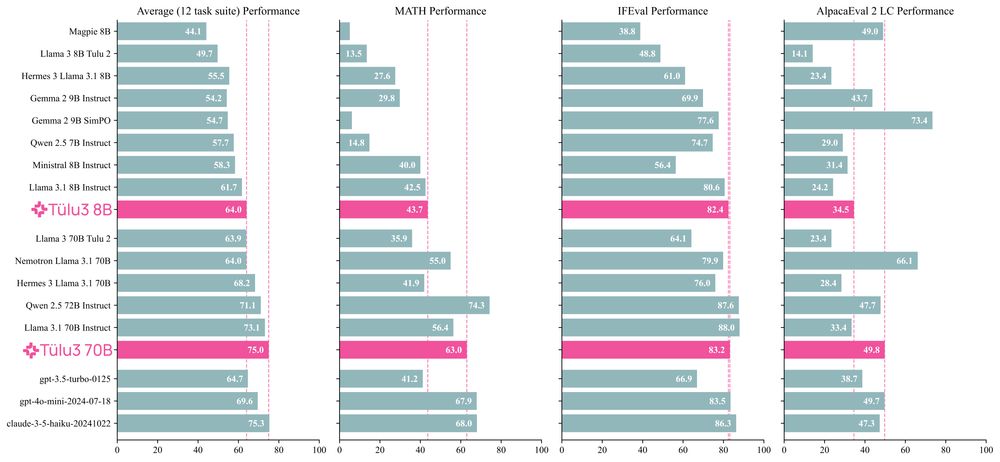

Well, we've taken a look and found serious issue in this paper, and shown, once again, that structured generation *improves* evaluation performance!