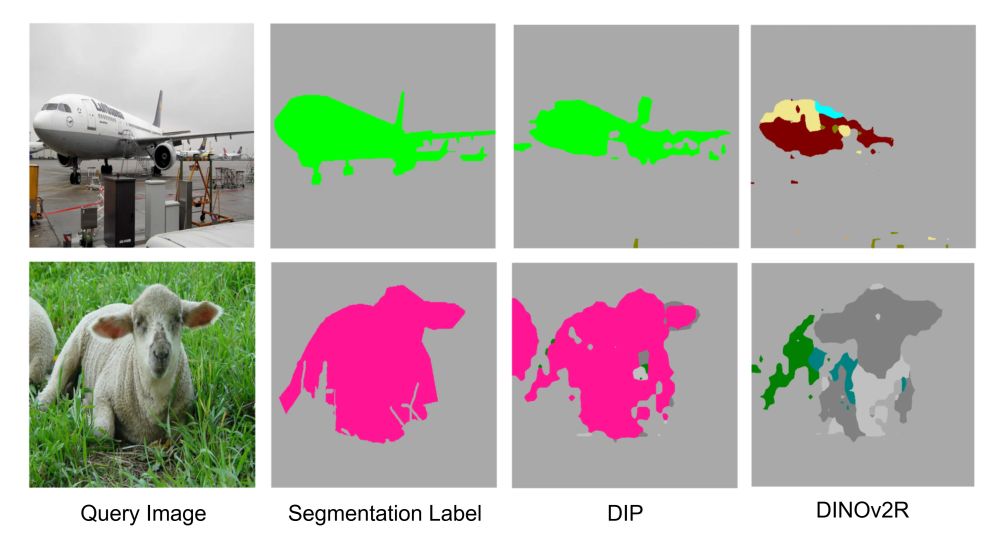

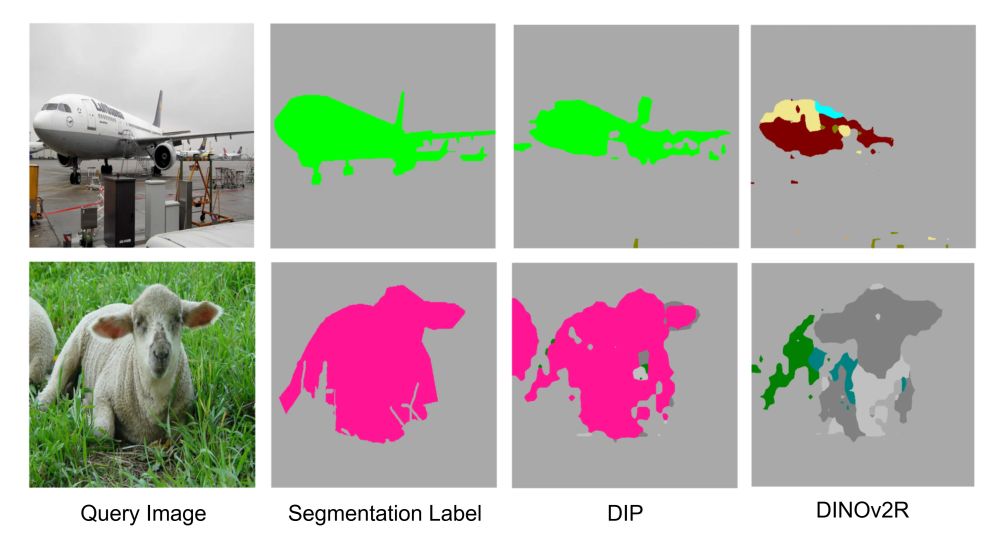

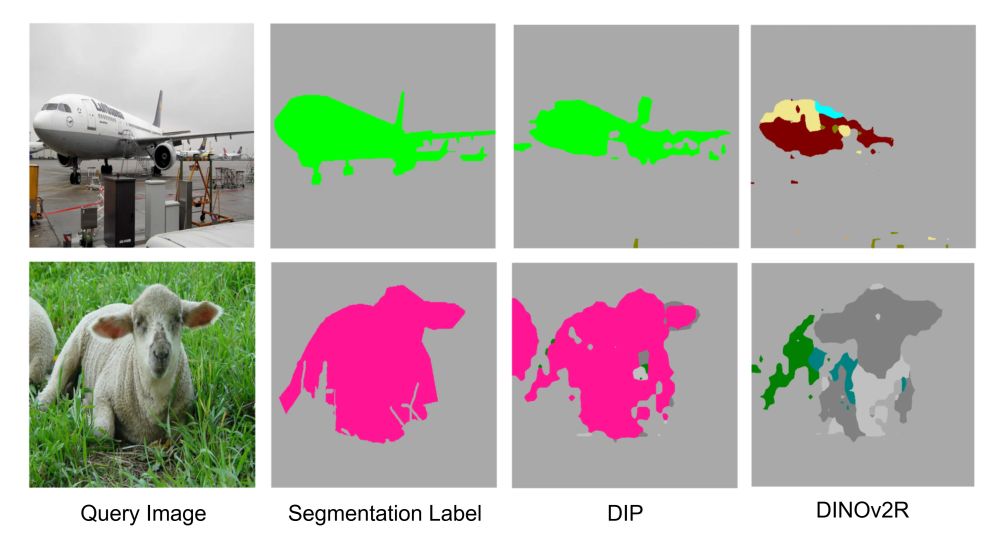

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

🚀Introducing NAF: A universal, zero-shot feature upsampler.

It turns low-res ViT features into pixel-perfect maps.

-⚡ Model-agnostic

-🥇 SoTA results

-🚀 4× faster than SoTA

-📈 Scales up to 2K res

🚀Introducing NAF: A universal, zero-shot feature upsampler.

It turns low-res ViT features into pixel-perfect maps.

-⚡ Model-agnostic

-🥇 SoTA results

-🚀 4× faster than SoTA

-📈 Scales up to 2K res

We'll present MuDDoS at BMVC: a method that boosts multimodal distillation for 3D semantic segmentation under domain shift.

📍 BMVC

🕚 Monday, Poster Session 1: Multimodal Learning (11:00–12:30)

📌 Hadfield Hall #859

We'll present MuDDoS at BMVC: a method that boosts multimodal distillation for 3D semantic segmentation under domain shift.

📍 BMVC

🕚 Monday, Poster Session 1: Multimodal Learning (11:00–12:30)

📌 Hadfield Hall #859

The team is proud to announce that several paper were accepted at #NeurIPS25. Looking forward to meet in Paris (25th-26th Nov), Copenhagen or San Diego (1st-7th Dec)!

Let's present our papers ⬇️

The team is proud to announce that several paper were accepted at #NeurIPS25. Looking forward to meet in Paris (25th-26th Nov), Copenhagen or San Diego (1st-7th Dec)!

Let's present our papers ⬇️

His thesis focused on structured visual representations:

• VidEdit - zero-shot text-to-video editing

• DiffCut - zero-shot segmentation via diffusion features

• JAFAR - high-res visual representation upsampling

His thesis focused on structured visual representations:

• VidEdit - zero-shot text-to-video editing

• DiffCut - zero-shot segmentation via diffusion features

• JAFAR - high-res visual representation upsampling

First conference as a PhD student, really excited to meet new people.

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

First conference as a PhD student, really excited to meet new people.

We’ll present 5 papers about:

💡 self-supervised & representation learning

🌍 3D occupancy & multi-sensor perception

🧩 open-vocabulary segmentation

🧠 multimodal LLMs & explainability

valeoai.github.io/posts/iccv-2...

We’ll present 5 papers about:

💡 self-supervised & representation learning

🌍 3D occupancy & multi-sensor perception

🧩 open-vocabulary segmentation

🧠 multimodal LLMs & explainability

valeoai.github.io/posts/iccv-2...

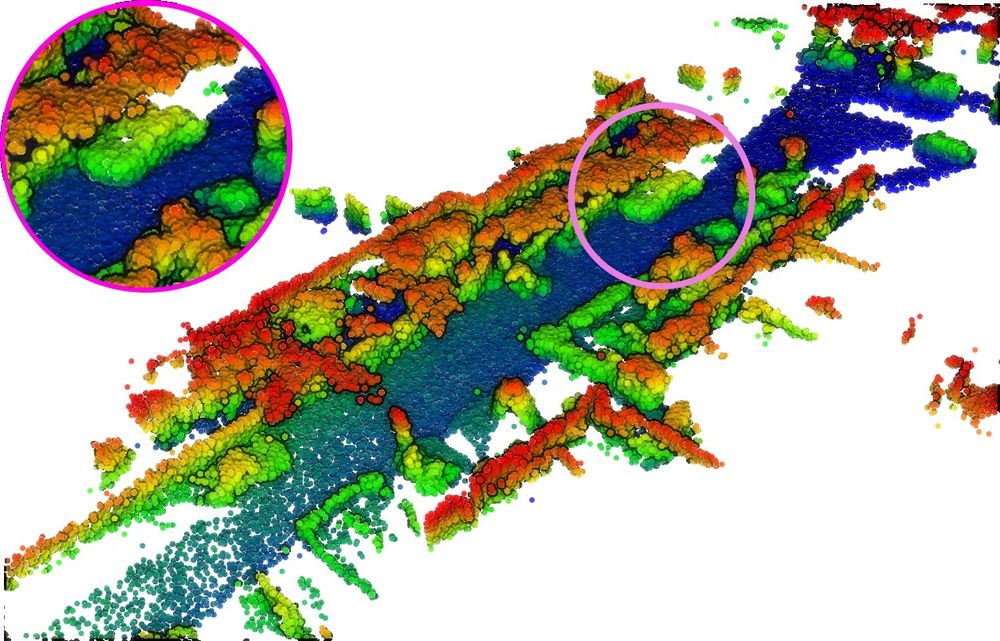

His thesis «Learning Actionable LiDAR Representations w/o Annotations» covers the papers BEVContrast (learning self-sup LiDAR features), SLidR, ScaLR (distillation), UNIT and Alpine (solving tasks w/o labels).

His thesis «Learning Actionable LiDAR Representations w/o Annotations» covers the papers BEVContrast (learning self-sup LiDAR features), SLidR, ScaLR (distillation), UNIT and Alpine (solving tasks w/o labels).

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

Paper : arxiv.org/abs/2506.11136

Project Page: jafar-upsampler.github.io

Github: github.com/PaulCouairon...

Paper : arxiv.org/abs/2506.11136

Project Page: jafar-upsampler.github.io

Github: github.com/PaulCouairon...

tldr: LiDPM enables high-quality LiDAR completion by applying a vanilla DDPM with tailored initialization, avoiding local diffusion approximations.

Project page: astra-vision.github.io/LiDPM/

tldr: LiDPM enables high-quality LiDAR completion by applying a vanilla DDPM with tailored initialization, avoiding local diffusion approximations.

Project page: astra-vision.github.io/LiDPM/

Registration is open (it's free) with priority given to authors of accepted papers: cvprinparis.github.io/CVPR2025InPa...

Big 🧵👇 with details!

Registration is open (it's free) with priority given to authors of accepted papers: cvprinparis.github.io/CVPR2025InPa...

Big 🧵👇 with details!

This is also an excellent occasion to fit all team members in a photo 📸

Many more missing, please let me know how is already in bsky to add them!

go.bsky.app/BowzivT

Many more missing, please let me know how is already in bsky to add them!

go.bsky.app/BowzivT