doing researchy metasciencey stuff at the Center for Open Science

I will not explain any further.

I will not explain any further.

Small potatoes in the scheme of things, but just another example of the astounding destruction of scientific progress happening right now.

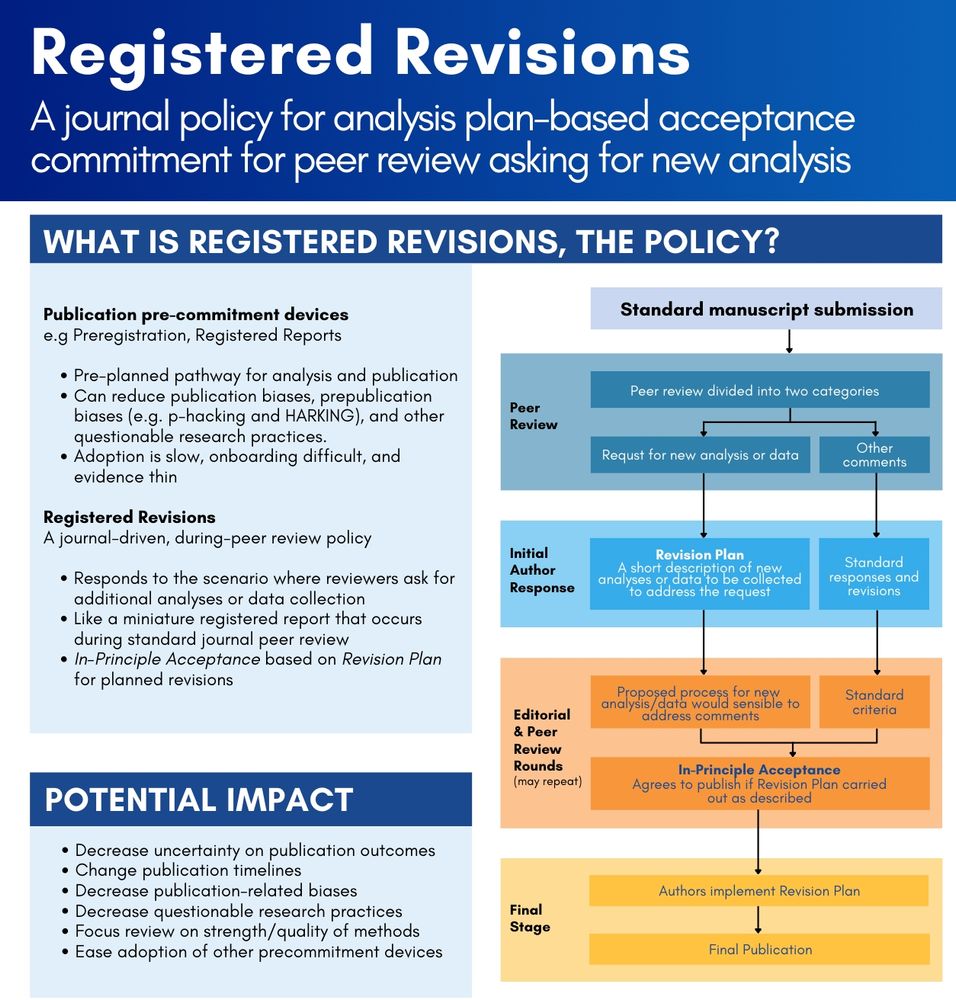

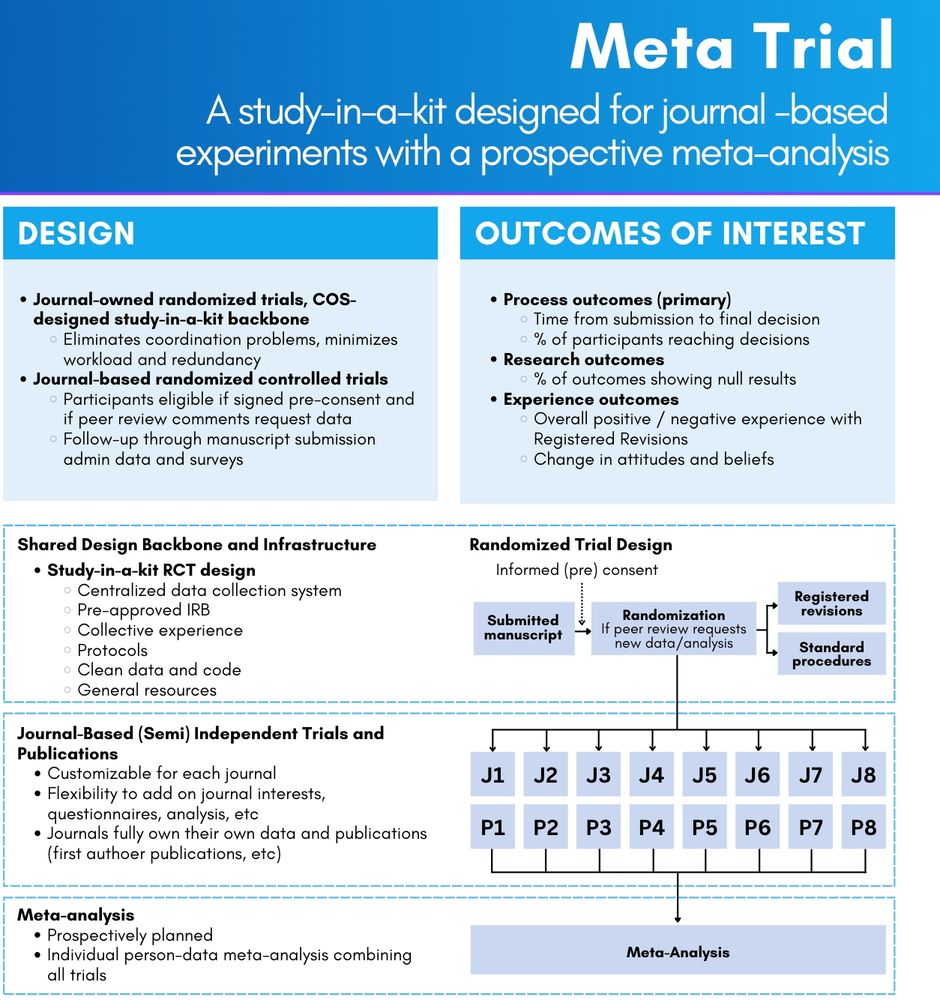

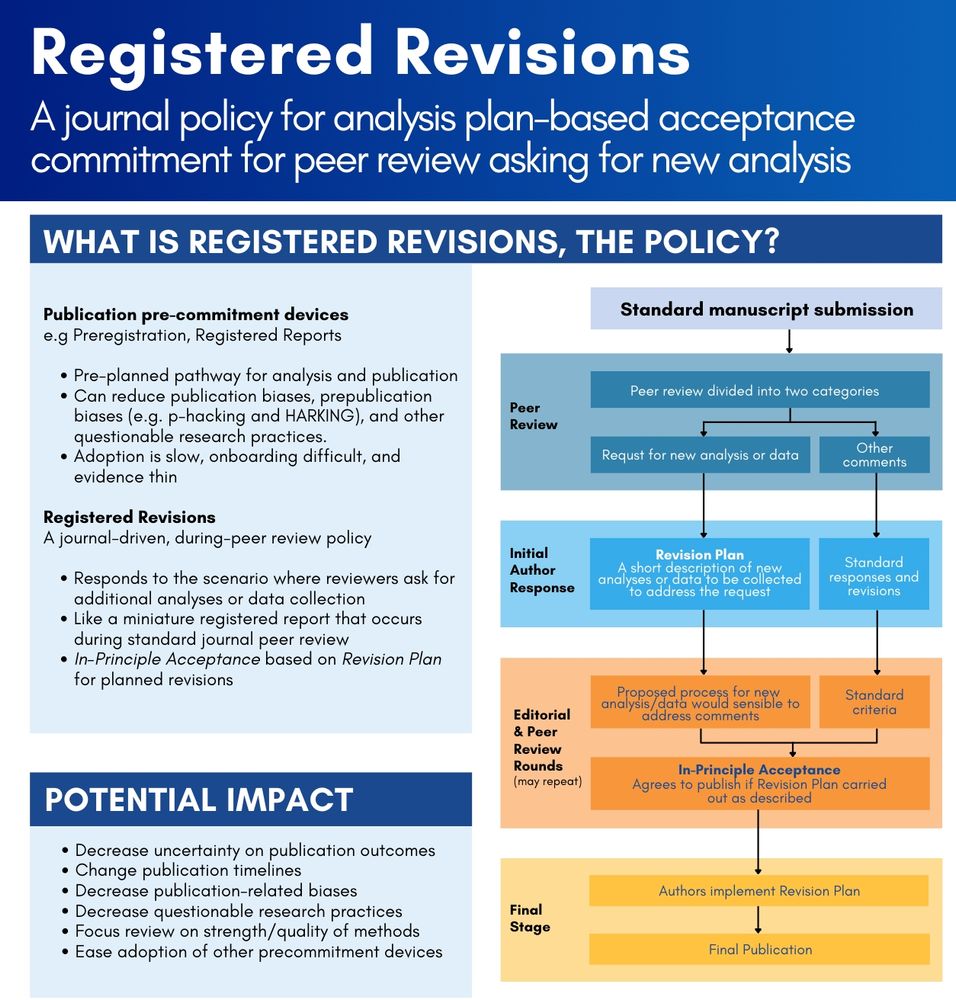

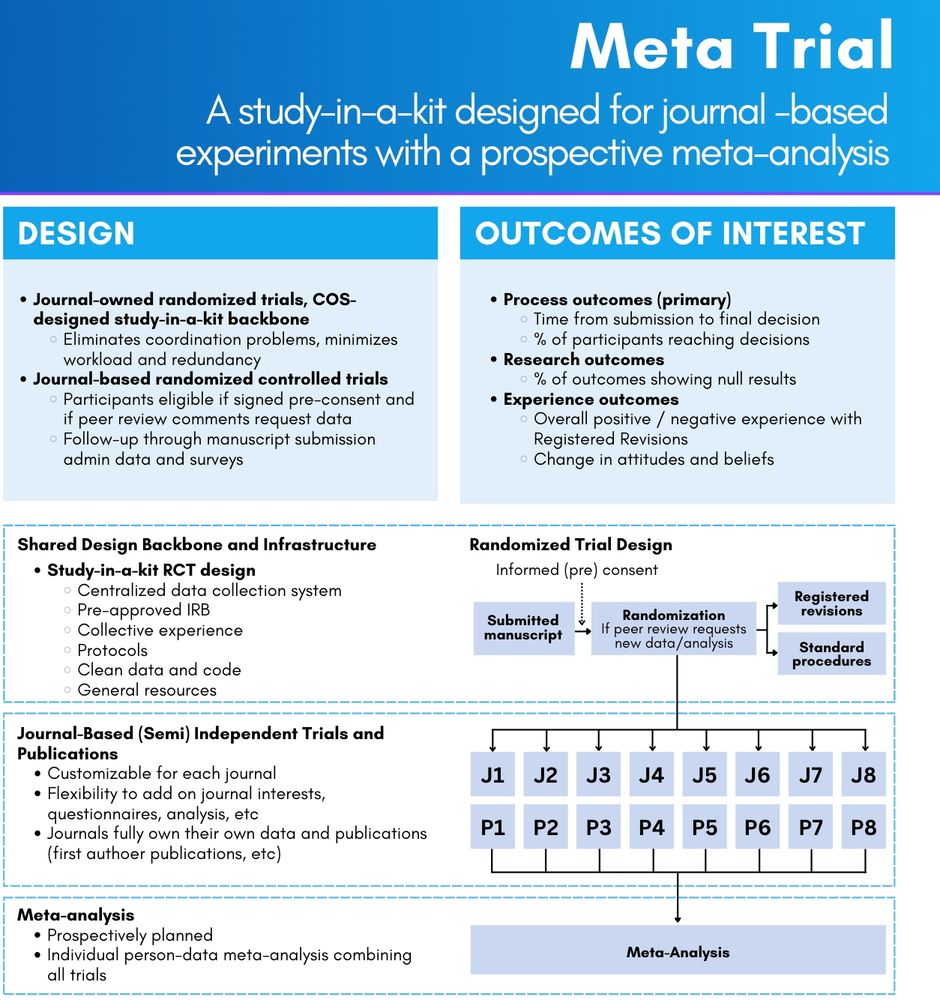

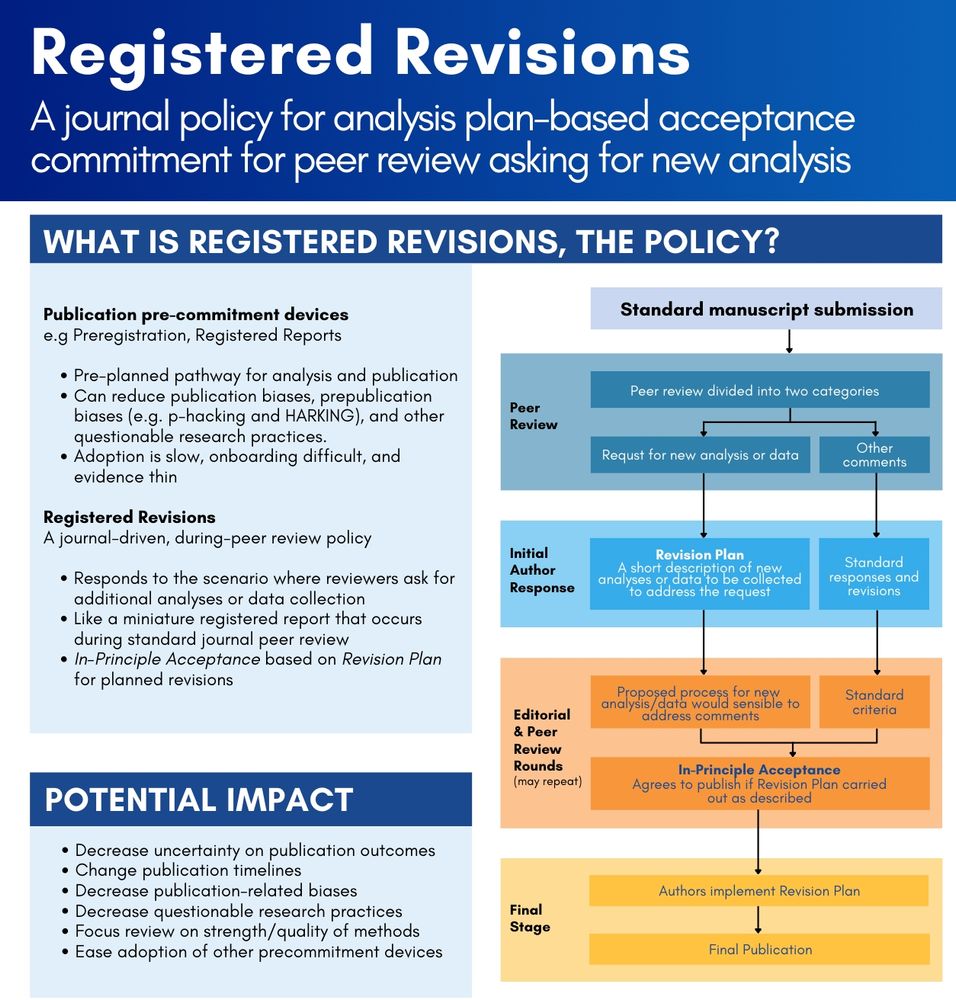

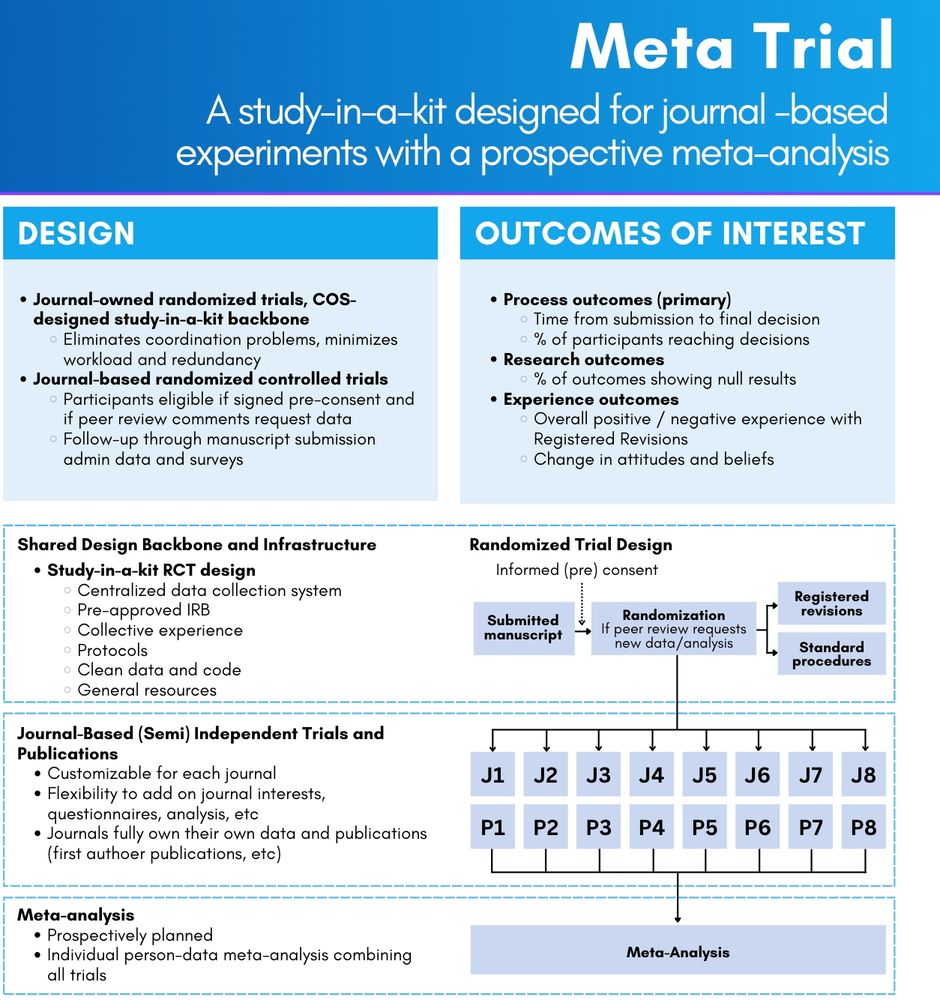

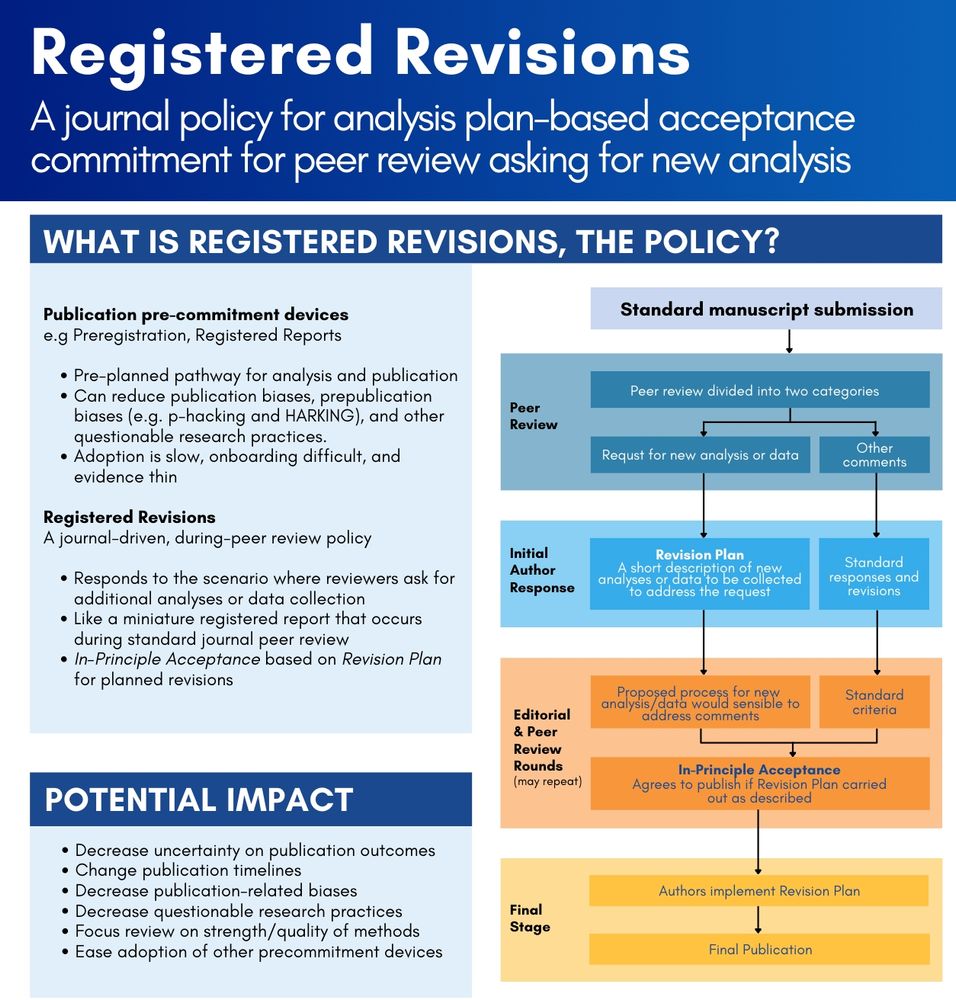

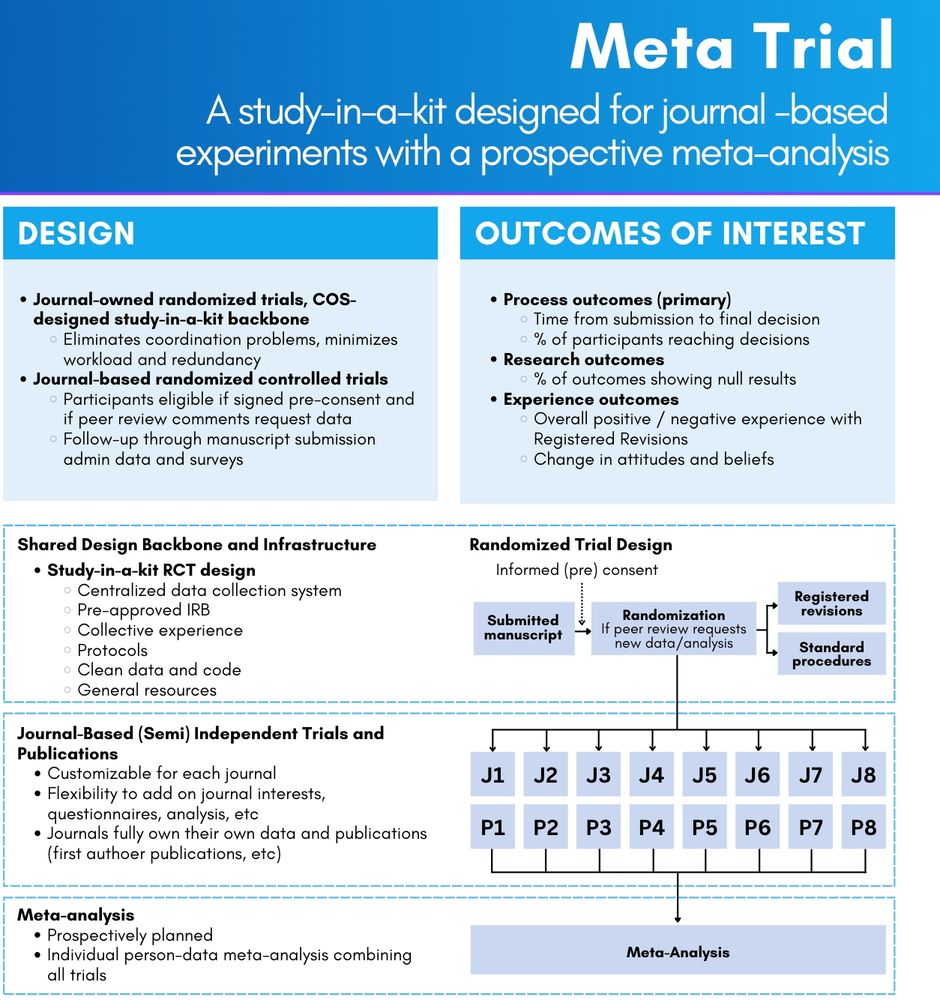

This project about new peer review policy and a WILD new way of doing actionable evidence generation via RCTs. A LOT of RCTs.

Now piloted and ready for the main stage, and looking for partners! 🧵👇

Small potatoes in the scheme of things, but just another example of the astounding destruction of scientific progress happening right now.

In theory, it goes a LONG way towards solving the feasibility, logistics, and incentives problems inherent in multi-unit policy experiments at scale.

Not just for journal policy. For policy period.

For the last year or so, we've been piloting a pretty wild approach to getting exactly that:

A study-in-a-kit

In theory, it goes a LONG way towards solving the feasibility, logistics, and incentives problems inherent in multi-unit policy experiments at scale.

Not just for journal policy. For policy period.

This project about new peer review policy and a WILD new way of doing actionable evidence generation via RCTs. A LOT of RCTs.

Now piloted and ready for the main stage, and looking for partners! 🧵👇

This project about new peer review policy and a WILD new way of doing actionable evidence generation via RCTs. A LOT of RCTs.

Now piloted and ready for the main stage, and looking for partners! 🧵👇

This project about new peer review policy and a WILD new way of doing actionable evidence generation via RCTs. A LOT of RCTs.

Now piloted and ready for the main stage, and looking for partners! 🧵👇

This project about new peer review policy and a WILD new way of doing actionable evidence generation via RCTs. A LOT of RCTs.

Now piloted and ready for the main stage, and looking for partners! 🧵👇

This project about new peer review policy and a WILD new way of doing actionable evidence generation via RCTs. A LOT of RCTs.

Now piloted and ready for the main stage, and looking for partners! 🧵👇

long live "how much do A and B contribute to Y"

long live "how much do A and B contribute to Y"

Throw a DM my way if you need anything at all.

Throw a DM my way if you need anything at all.

Fortunately the damage to my pride is almost certainly larger than any risk to participants.

But still. Humbling.

Fortunately the damage to my pride is almost certainly larger than any risk to participants.

But still. Humbling.

If you're around, come hang out!

If you're around, come hang out!

If you're around, come hang out!

A simple (read: stupid) set of rules dictating what's good (double blind RCT, meta-analysis, etc) and not (anything else).

What could possibly go wrong?

A simple (read: stupid) set of rules dictating what's good (double blind RCT, meta-analysis, etc) and not (anything else).

What could possibly go wrong?

There is a lot to be said about how the aesthetics of science and scientific critique are beiung twisted, coopted, corrupted, and targeted for destructive ends.

Right now, it is time to make yourself heard, however you choose to do it.

actionnetwork.org/petitions/op...

There is a lot to be said about how the aesthetics of science and scientific critique are beiung twisted, coopted, corrupted, and targeted for destructive ends.

Right now, it is time to make yourself heard, however you choose to do it.

actionnetwork.org/petitions/op...

* Pre-written methods and results section

* Full reproducible data -> manuscript text and fig pipeline

* Pre-populated data w/ full trial(s) simulation

* Multiple levels (trials + meta analysis) for all

* Pre-written methods and results section

* Full reproducible data -> manuscript text and fig pipeline

* Pre-populated data w/ full trial(s) simulation

* Multiple levels (trials + meta analysis) for all

This Friday, where will you be?

standupforscience2025.org

This Friday, where will you be?

standupforscience2025.org

Both identify the severity of the threat we face.

But when it comes to what we should to do about it, one is a call to fight alongside the students and postdocs; the other takes the opportunity to tell those students to stop being critical of university admins.

🧪

www.nature.com/articles/d41...

www.science.org/doi/10.1126/...

Both identify the severity of the threat we face.

But when it comes to what we should to do about it, one is a call to fight alongside the students and postdocs; the other takes the opportunity to tell those students to stop being critical of university admins.

Bad news: It's a more robust public record of your research if, say, some capricious and vindictive government decides to pull your funding, force modifications, censors, or cancels it altogether.

Bad news: It's a more robust public record of your research if, say, some capricious and vindictive government decides to pull your funding, force modifications, censors, or cancels it altogether.

Science is punk.

Science is punk.

This take is somehow simultaneously entirely predictable and surprising. It's profoundly demoralizing and ineffective, maybe harmful.

www.science.org/doi/10.1126/...

This take is somehow simultaneously entirely predictable and surprising. It's profoundly demoralizing and ineffective, maybe harmful.

www.science.org/doi/10.1126/...

Those weaknesses are points of leverage for people trying to tear the whole thing down.

Those weaknesses are points of leverage for people trying to tear the whole thing down.

Those weaknesses are points of leverage for people trying to tear the whole thing down.

I would strongly recommend avoiding the potato salad for a long while.

That includes 1,250 people at CDC, including all first-year EIS officers — “disease detectives.”

Employees are bracing for more layoffs in coming days.

I would strongly recommend avoiding the potato salad for a long while.