Computational Psycholinguistics, NLP, Cognitive Science.

https://lacclab.github.io/

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...

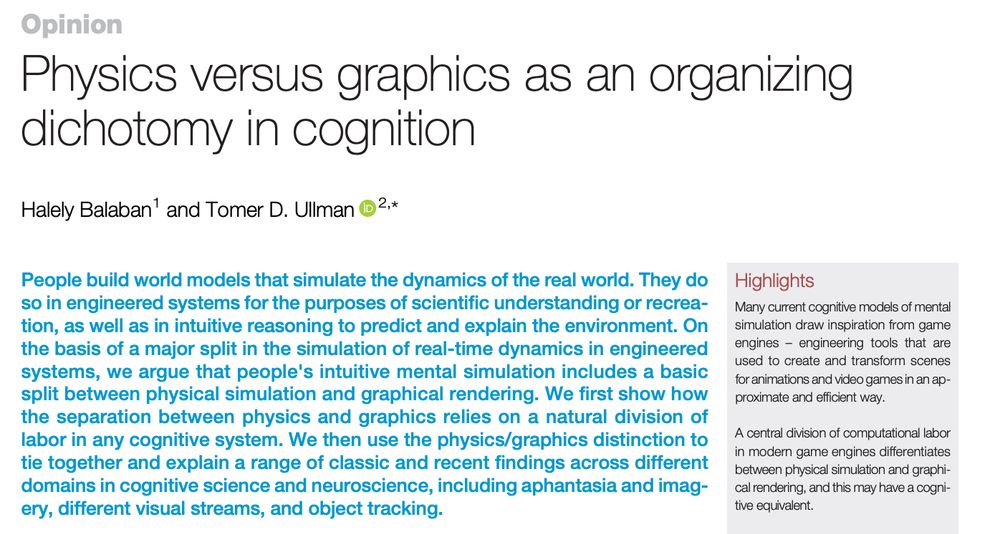

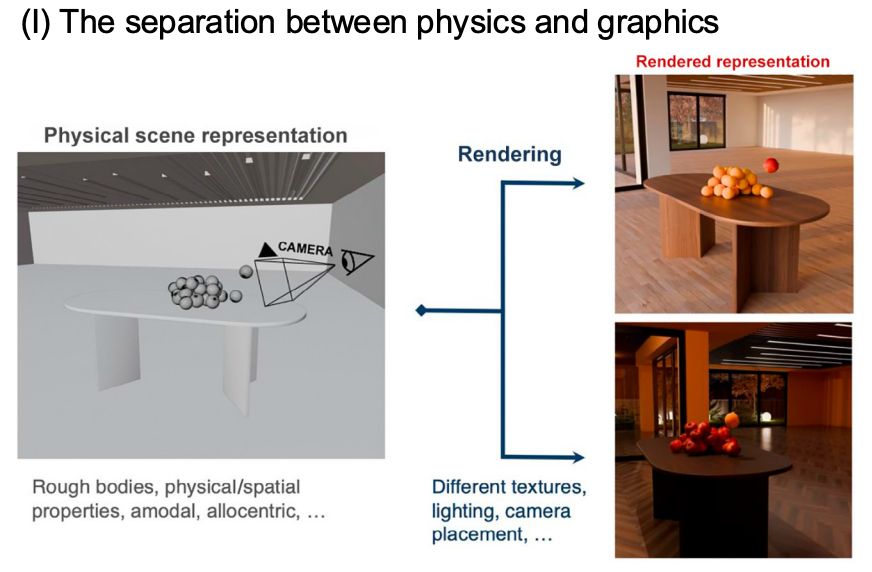

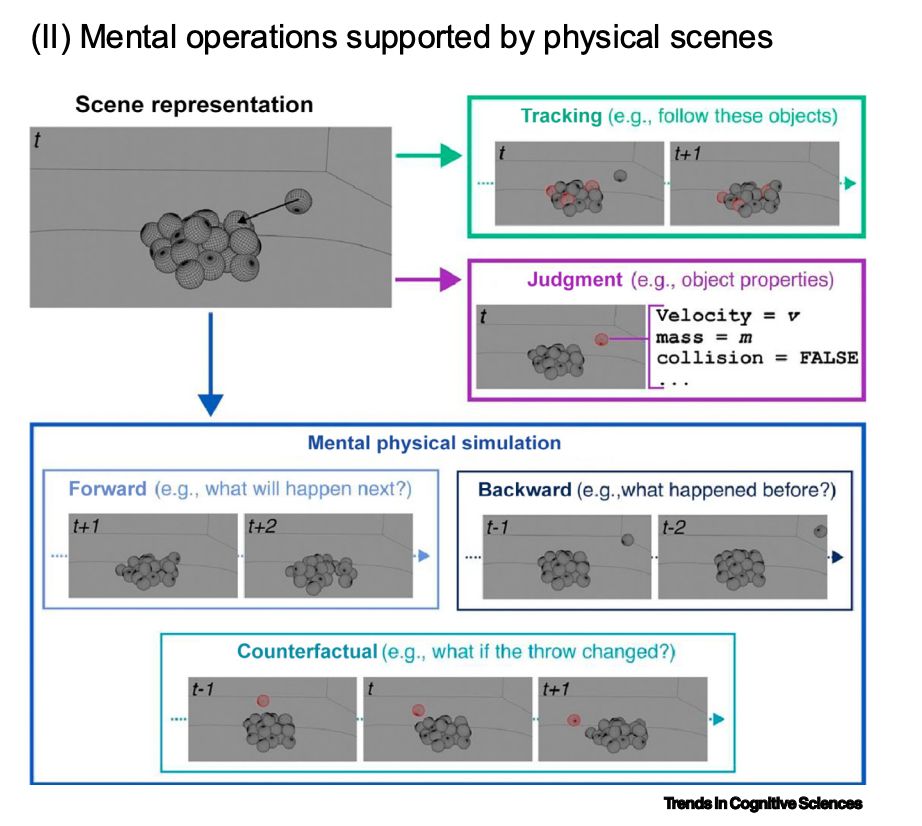

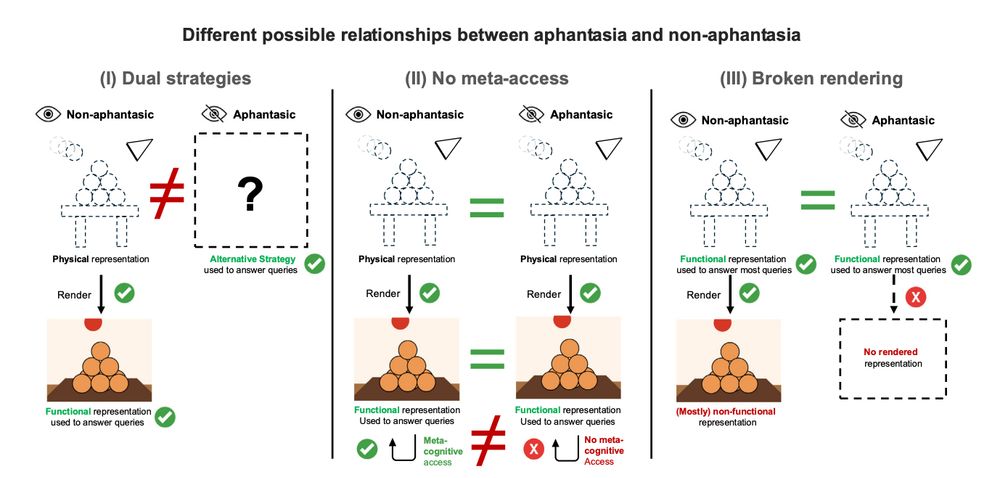

"Physics vs. graphics as an organizing dichotomy in cognition"

(by Balaban & me)

relevant for many people, related to imagination, intuitive physics, mental simulation, aphantasia, and more

authors.elsevier.com/a/1lBaC4sIRv...

"Physics vs. graphics as an organizing dichotomy in cognition"

(by Balaban & me)

relevant for many people, related to imagination, intuitive physics, mental simulation, aphantasia, and more

authors.elsevier.com/a/1lBaC4sIRv...

Today we are launching our new Thematic Collections to organize our growing set of articles!

Today we are launching our new Thematic Collections to organize our growing set of articles!

Happy to announce the release of the OneStop Eye Movements dataset! 🎉 🎉

OneStop is the product of over 6 years of experimental design, data collection and data curation.

github.com/lacclab/OneS...

Happy to announce the release of the OneStop Eye Movements dataset! 🎉 🎉

OneStop is the product of over 6 years of experimental design, data collection and data curation.

github.com/lacclab/OneS...

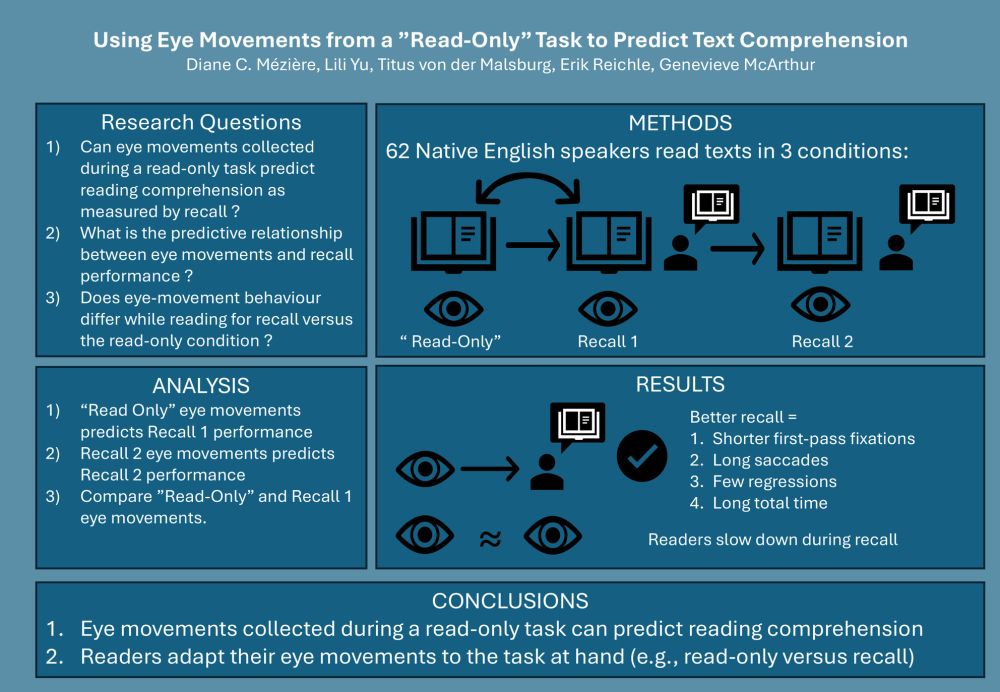

ila.onlinelibrary.wiley.com/doi/10.1002/...

ila.onlinelibrary.wiley.com/doi/10.1002/...

vasishth.github.io/sentproc-wor...

vasishth.github.io/sentproc-wor...

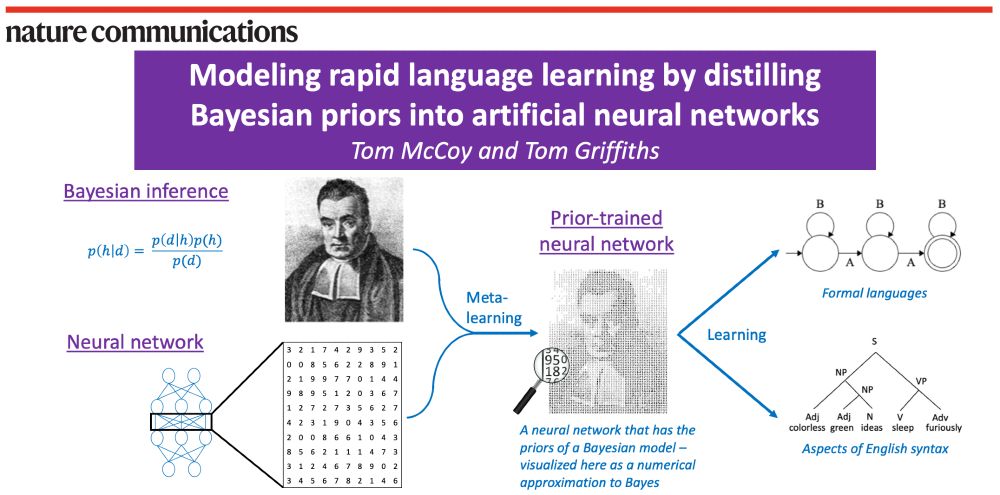

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

- I trained a Transformer language model on sentences sampled from a PCFG

- The students' task: Given the Transformer, try to infer the PCFG (w/ a leaderboard for who got closest)

Would recommend!

1/n

- I trained a Transformer language model on sentences sampled from a PCFG

- The students' task: Given the Transformer, try to infer the PCFG (w/ a leaderboard for who got closest)

Would recommend!

1/n

TL;DR we find no evidence that LLMs have privileged access to their own knowledge.

Beyond the study of LLM introspection, our findings inform an ongoing debate in linguistics research: prompting (eg grammaticality judgments) =/= prob measurement!

Across models and domains, we did not find evidence that LLMs have privileged access to their own predictions. 🧵(1/8)

TL;DR we find no evidence that LLMs have privileged access to their own knowledge.

Beyond the study of LLM introspection, our findings inform an ongoing debate in linguistics research: prompting (eg grammaticality judgments) =/= prob measurement!

"Re-evaluating Theory of Mind evaluation in large language models"

(by Hu* @jennhu.bsky.social , Sosa, and me)

link: arxiv.org/pdf/2502.21098

"Re-evaluating Theory of Mind evaluation in large language models"

(by Hu* @jennhu.bsky.social , Sosa, and me)

link: arxiv.org/pdf/2502.21098

From childhood on, people can create novel, playful, and creative goals. Models have yet to capture this ability. We propose a new way to represent goals and report a model that can generate human-like goals in a playful setting... 1/N

From childhood on, people can create novel, playful, and creative goals. Models have yet to capture this ability. We propose a new way to represent goals and report a model that can generate human-like goals in a playful setting... 1/N

It'd be great if you could share this widely with people you think might be interested.

More details on the position & how to apply: bit.ly/cocodev_post...

Official posting here: academicpositions.harvard.edu/postings/14723

It'd be great if you could share this widely with people you think might be interested.

More details on the position & how to apply: bit.ly/cocodev_post...

Official posting here: academicpositions.harvard.edu/postings/14723

Submit an abstract by March 1st and join us!

tmalsburg.github.io/Comprehensio...

Submit an abstract by March 1st and join us!

tmalsburg.github.io/Comprehensio...

link.springer.com/article/10.3...

www.jobs.cam.ac.uk/job/48835/

www.jobs.cam.ac.uk/job/48835/

Deadline January 31st!

#NLP #DigitalHumanities #CulturalAnalytics

Deadline January 31st!

#NLP #DigitalHumanities #CulturalAnalytics