Additional fine-tuning examples in our docs with:

@pytorch.org, Deepspeed, @lightningai.bsky.social, HF Accelerate

Additional fine-tuning examples in our docs with:

@pytorch.org, Deepspeed, @lightningai.bsky.social, HF Accelerate

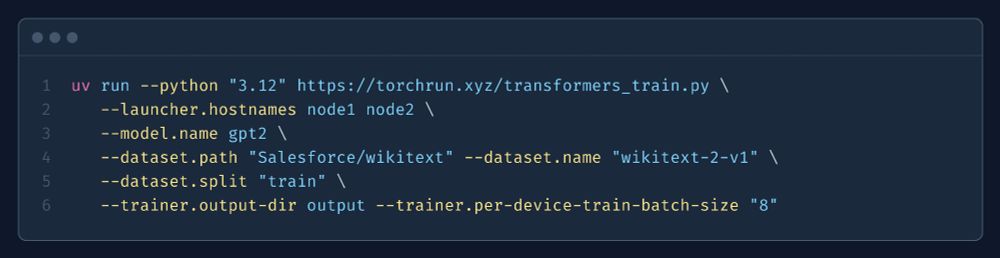

@huggingface

transformers) on any text dataset *with multiple nodes* in just *one command*.

torchrun.xyz/examples/tra...

@huggingface

transformers) on any text dataset *with multiple nodes* in just *one command*.

torchrun.xyz/examples/tra...

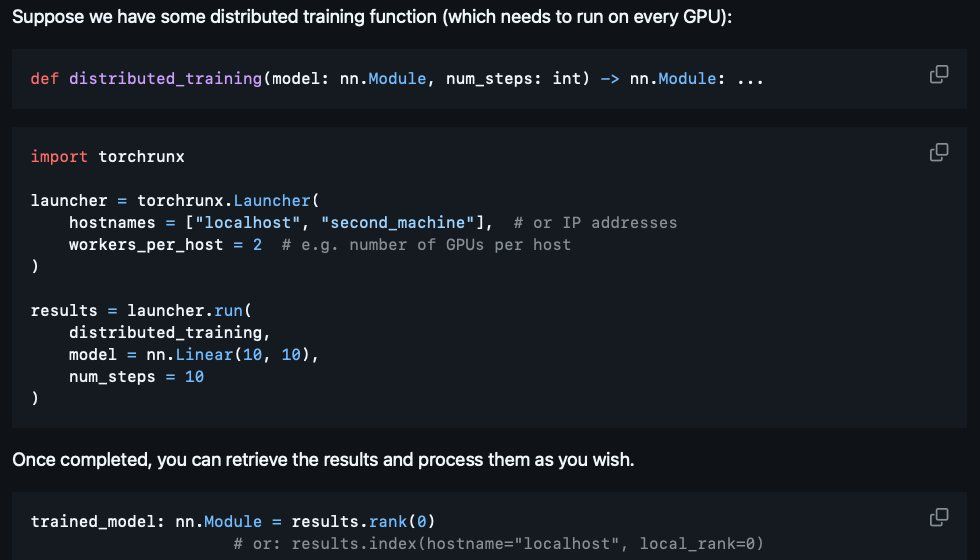

Most basic usage: specify some (SSH-enabled) machines you want to parallelize your code on. Then launch a function onto that configuration.

All from inside your Python script!

Most basic usage: specify some (SSH-enabled) machines you want to parallelize your code on. Then launch a function onto that configuration.

All from inside your Python script!

If you want the naive, training-free / model-agnostic approach: their related work section says it is most common to using the final token’s last hidden state.

If you want the naive, training-free / model-agnostic approach: their related work section says it is most common to using the final token’s last hidden state.