Apoorv Khandelwal

@apoorvkh.com

820 followers

200 following

16 posts

cs phd student at brown

https://apoorvkh.com

Posts

Media

Videos

Starter Packs

Apoorv Khandelwal

@apoorvkh.com

· Jul 28

Apoorv Khandelwal

@apoorvkh.com

· May 29

Reposted by Apoorv Khandelwal

Reposted by Apoorv Khandelwal

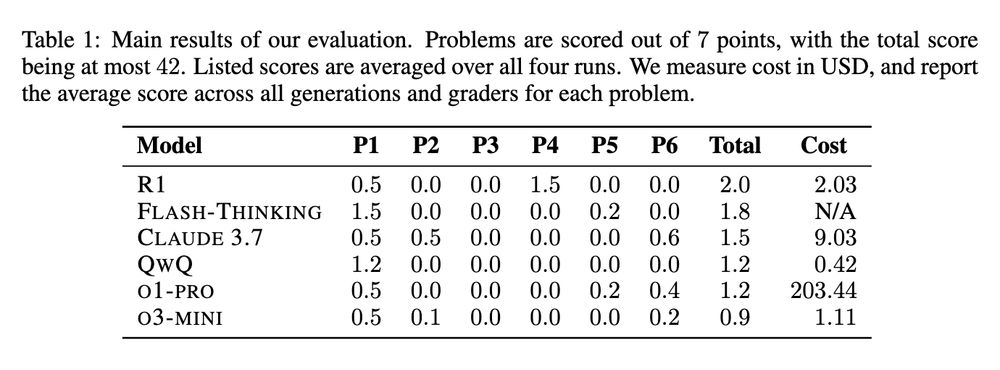

Dr Sasha Luccioni

@sashamtl.bsky.social

· Mar 19

Reposted by Apoorv Khandelwal

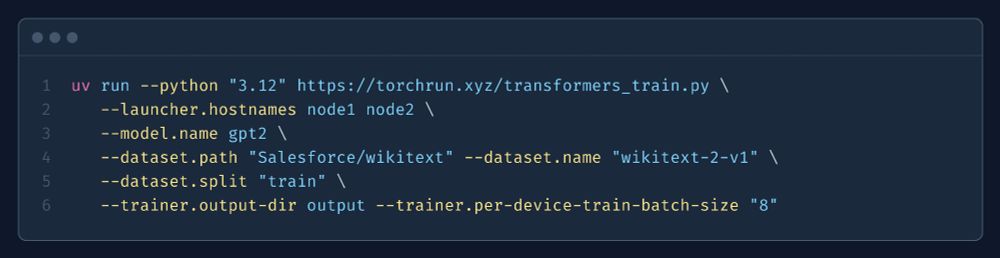

Apoorv Khandelwal

@apoorvkh.com

· Mar 11

Reposted by Apoorv Khandelwal

Reposted by Apoorv Khandelwal

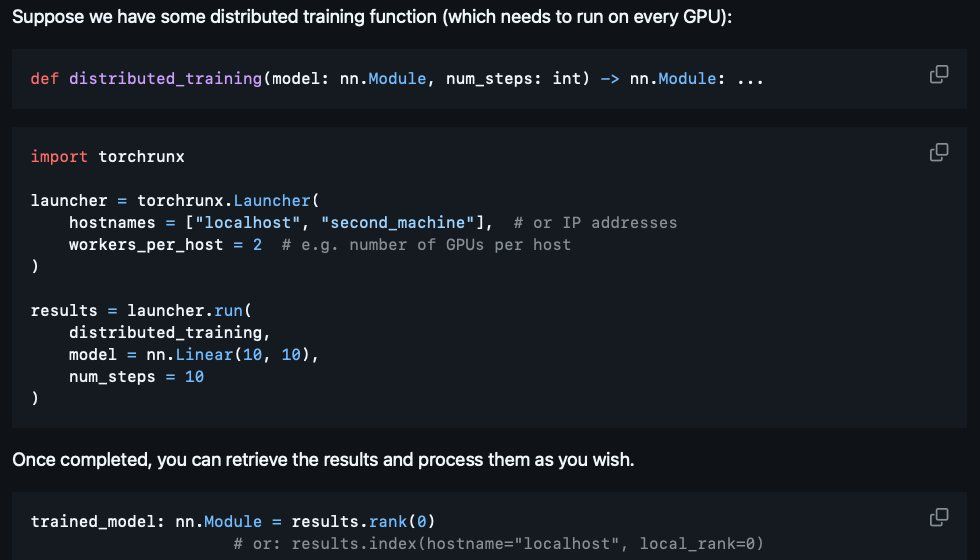

Apoorv Khandelwal

@apoorvkh.com

· Jan 25

Reposted by Apoorv Khandelwal

Reposted by Apoorv Khandelwal

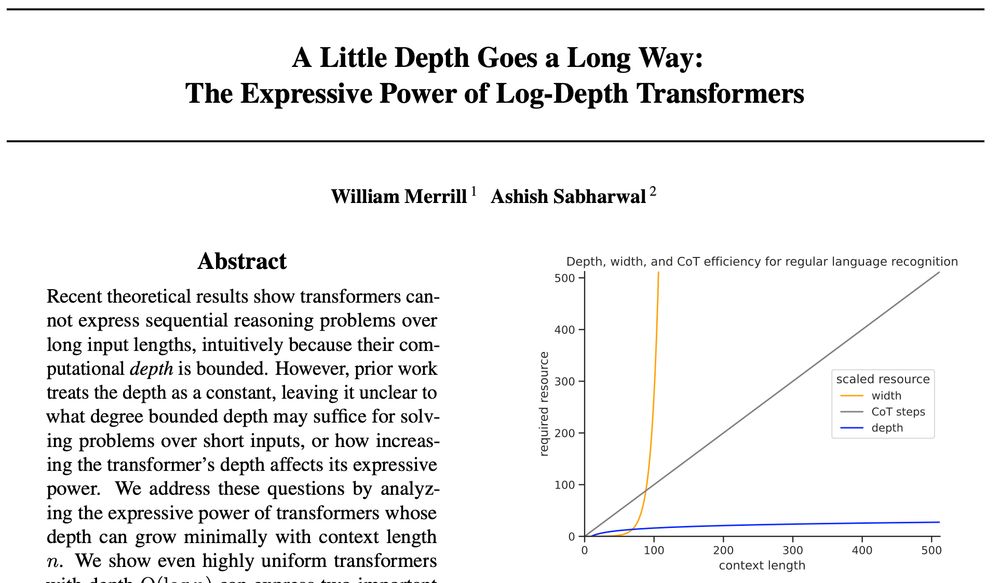

Naomi Saphra

@nsaphra.bsky.social

· Dec 18

Reposted by Apoorv Khandelwal

Reposted by Apoorv Khandelwal

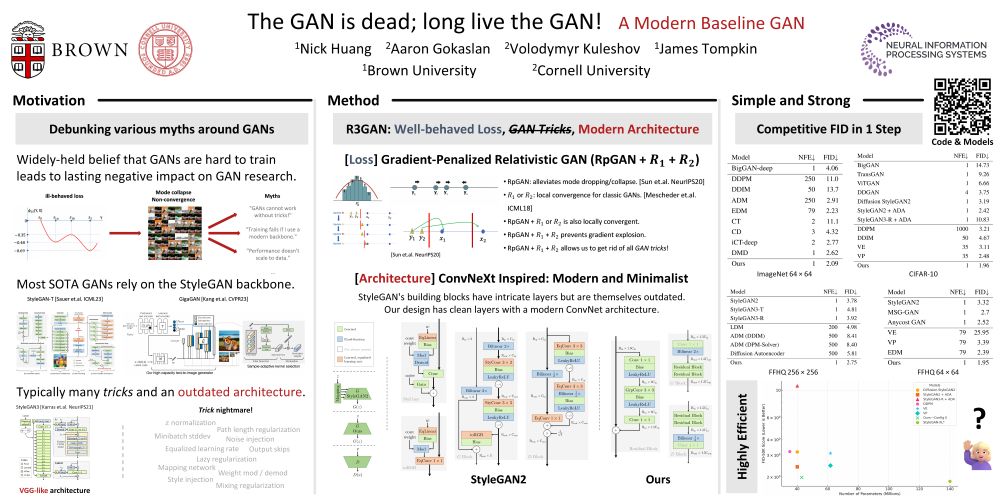

Apoorv Khandelwal

@apoorvkh.com

· Nov 27

Reposted by Apoorv Khandelwal

Joe Stacey

@joestacey.bsky.social

· Nov 24

Apoorv Khandelwal

@apoorvkh.com

· Nov 21

Apoorv Khandelwal

@apoorvkh.com

· Nov 21