Arnaud Doucet

@arnauddoucet.bsky.social

890 followers

220 following

10 posts

Senior Staff Research Scientist @Google DeepMind, previously Stats Prof @Oxford Uni - interested in Computational Statistics, Generative Modeling, Monte Carlo methods, Optimal Transport.

Posts

Media

Videos

Starter Packs

Reposted by Arnaud Doucet

Reposted by Arnaud Doucet

Reposted by Arnaud Doucet

Reposted by Arnaud Doucet

Reposted by Arnaud Doucet

Reposted by Arnaud Doucet

Arnaud Doucet

@arnauddoucet.bsky.social

· Jan 16

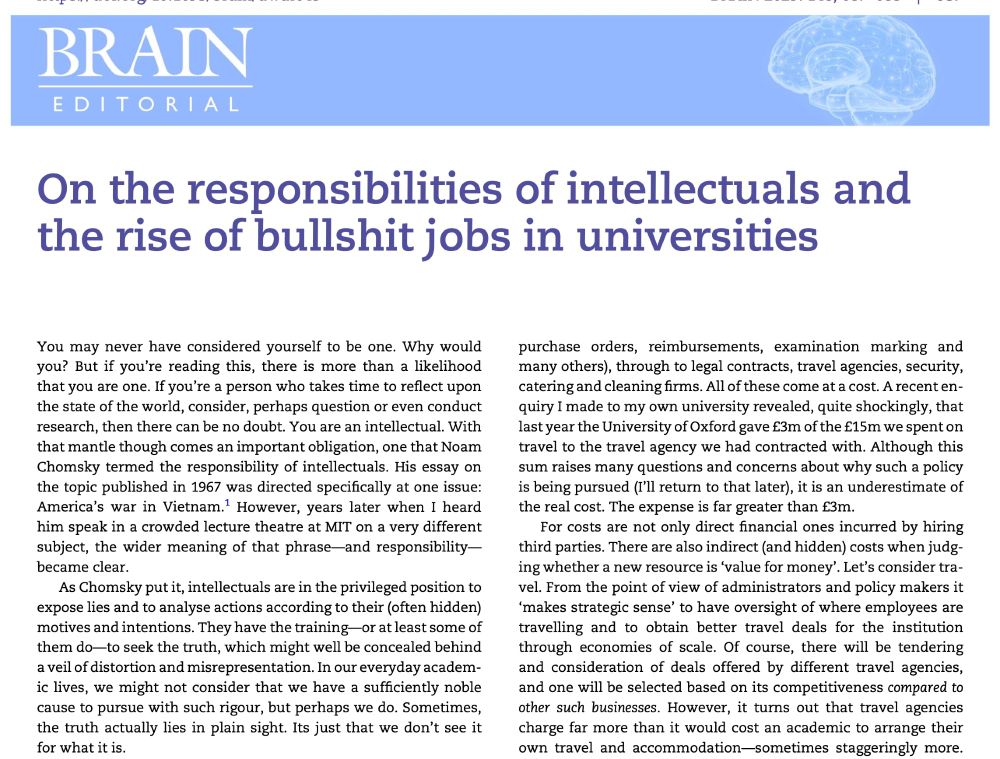

On the Asymptotics of Importance Weighted Variational Inference

For complex latent variable models, the likelihood function is not available in closed form. In this context, a popular method to perform parameter estimation is Importance Weighted Variational Infere...

arxiv.org

Arnaud Doucet

@arnauddoucet.bsky.social

· Dec 27

Reposted by Arnaud Doucet

Reposted by Arnaud Doucet

Reposted by Arnaud Doucet

Arnaud Doucet

@arnauddoucet.bsky.social

· Dec 14

Reposted by Arnaud Doucet

Reposted by Arnaud Doucet

Gergely Neu

@neu-rips.bsky.social

· Dec 4

On the optimality of coin-betting for mean estimation

Confidence sequences are sequences of confidence sets that adapt to incoming data while maintaining validity. Recent advances have introduced an algorithmic formulation for constructing some of the ti...

arxiv.org