Christoph Abels

@cabels18.bsky.social

68 followers

77 following

41 posts

Post-Doctoral Fellow @unipotsdam.bsky.social, visiting @arc-mpib.bsky.social | PhD @hertieschool.bsky.social | Democracy, Technology, Behavioral Public Policy | Website: https://christophabels.com

Posts

Media

Videos

Starter Packs

Christoph Abels

@cabels18.bsky.social

· Jul 28

Christoph Abels

@cabels18.bsky.social

· Jul 28

Christoph Abels

@cabels18.bsky.social

· Jul 28

Christoph Abels

@cabels18.bsky.social

· Jul 28

Christoph Abels

@cabels18.bsky.social

· Jul 28

Christoph Abels

@cabels18.bsky.social

· Jul 28

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

NYAS Publications

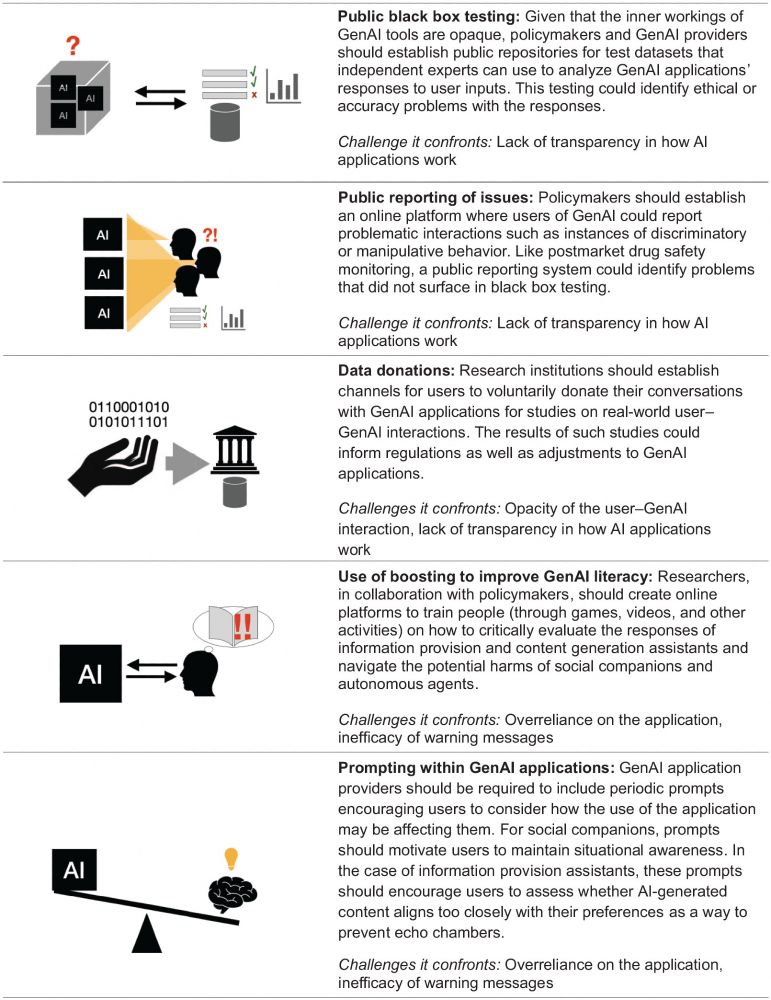

Generative artificial intelligence (GenAI) applications, such as ChatGPT, are transforming how individuals access health information, offering conversational and highly personalized interactions. Whi...

nyaspubs.onlinelibrary.wiley.com

Reposted by Christoph Abels

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

NYAS Publications

Generative artificial intelligence (GenAI) applications, such as ChatGPT, are transforming how individuals access health information, offering conversational and highly personalized interactions. Whi...

nyaspubs.onlinelibrary.wiley.com

Christoph Abels

@cabels18.bsky.social

· Jul 7

Christoph Abels

@cabels18.bsky.social

· Jul 7

Christoph Abels

@cabels18.bsky.social

· Jul 7

Christoph Abels

@cabels18.bsky.social

· Jul 7

Christoph Abels

@cabels18.bsky.social

· Jul 7

Christoph Abels

@cabels18.bsky.social

· Jul 7

Christoph Abels

@cabels18.bsky.social

· Jul 7

Christoph Abels

@cabels18.bsky.social

· Jul 7

Christoph Abels

@cabels18.bsky.social

· Jul 7

Christoph Abels

@cabels18.bsky.social

· Jul 7

Christoph Abels

@cabels18.bsky.social

· Jul 7