Ezequiel Lopez-Lopez

@eloplop.bsky.social

120 followers

210 following

16 posts

Pre-doctoral researcher @ Max Planck Institute for Human Development (Adaptive Rationality Center) — Berlin | knowledge representations, NLP, computational social sciences & policy

Posts

Media

Videos

Starter Packs

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

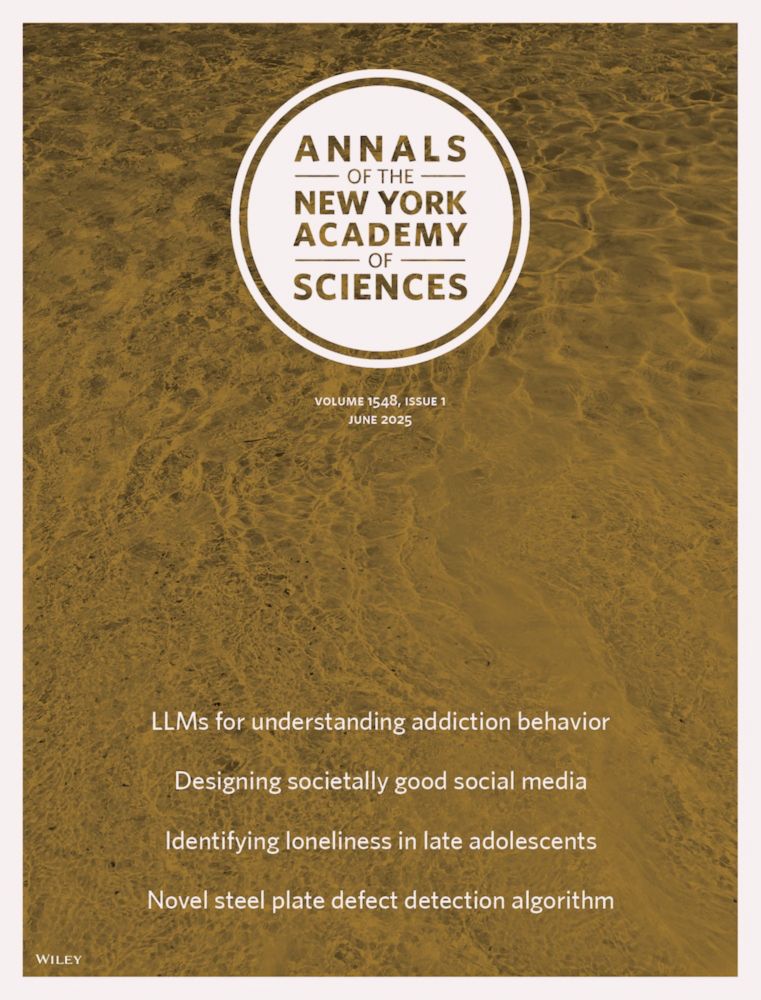

NYAS Publications

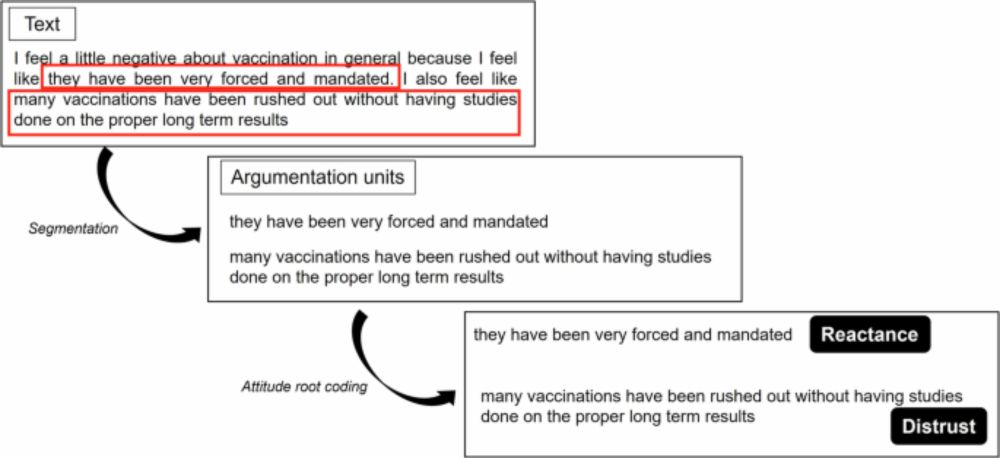

Generative artificial intelligence (GenAI) applications, such as ChatGPT, are transforming how individuals access health information, offering conversational and highly personalized interactions. Whi...

nyaspubs.onlinelibrary.wiley.com

Reposted by Ezequiel Lopez-Lopez

Reposted by Ezequiel Lopez-Lopez

Christoph Abels

@cabels18.bsky.social

· Jul 7

The governance & behavioral challenges of generative artificial intelligence’s hypercustomization capabilities - Christoph M. Abels, Ezequiel Lopez-Lopez, Jason W. Burton, Dawn L. Holford, Levin Brink...

Generative artificial intelligence (GenAI) is changing human–machine interactions and the broader information ecosystem. Much as social media algorithms persona...

journals.sagepub.com

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jun 19

Reposted by Ezequiel Lopez-Lopez

Stephan Lewandowsky

@lewan.bsky.social

· Jun 19

The Anti-Autocracy Handbook: A Scholars' Guide to Navigating Democratic Backsliding

The Anti-Autocracy Handbook is a call to action, resilience, and collective defence of democracy, truth, and academic freedom in the face of mounting authoritarianism. It tries to provide guidance to ...

sks.to

Reposted by Ezequiel Lopez-Lopez

Reposted by Ezequiel Lopez-Lopez

Reposted by Ezequiel Lopez-Lopez

Reposted by Ezequiel Lopez-Lopez

Reposted by Ezequiel Lopez-Lopez

Reposted by Ezequiel Lopez-Lopez

Ullrich Ecker

@ulliecker.bsky.social

· Jun 5

Misinformation poses a bigger threat to democracy than you might think

In today’s polarized political climate, researchers who combat mistruths have come under attack and been labelled as unelected arbiters of truth. But the fight against misinformation is valid, warrant...

nature.com