Cesare

@cesare-spinoso.bsky.social

25 followers

30 following

7 posts

Hello! I'm Cesare (pronounced Chez-array). I'm a PhD student at McGill/Mila working in NLP/computational pragmatics.

@mcgill-nlp.bsky.social

@mila-quebec.bsky.social

https://cesare-spinoso.github.io/

Posts

Media

Videos

Starter Packs

Reposted by Cesare

Reposted by Cesare

Reposted by Cesare

Cesare

@cesare-spinoso.bsky.social

· Jun 26

Cesare

@cesare-spinoso.bsky.social

· Jun 26

Reposted by Cesare

Reposted by Cesare

Reposted by Cesare

Reposted by Cesare

Reposted by Cesare

Siva Reddy

@sivareddyg.bsky.social

· May 1

Language Models Largely Exhibit Human-like Constituent Ordering Preferences

Though English sentences are typically inflexible vis-à-vis word order, constituents often show far more variability in ordering. One prominent theory presents the notion that constituent ordering is ...

arxiv.org

Reposted by Cesare

Reposted by Cesare

Reposted by Cesare

Reposted by Cesare

Reposted by Cesare

Reposted by Cesare

Reposted by Cesare

Reposted by Cesare

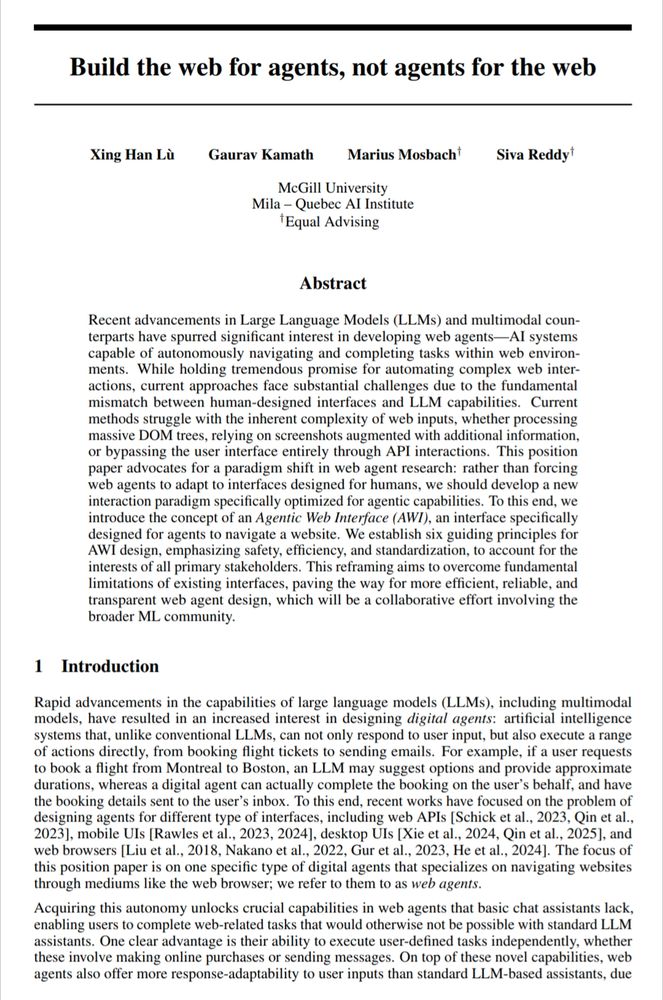

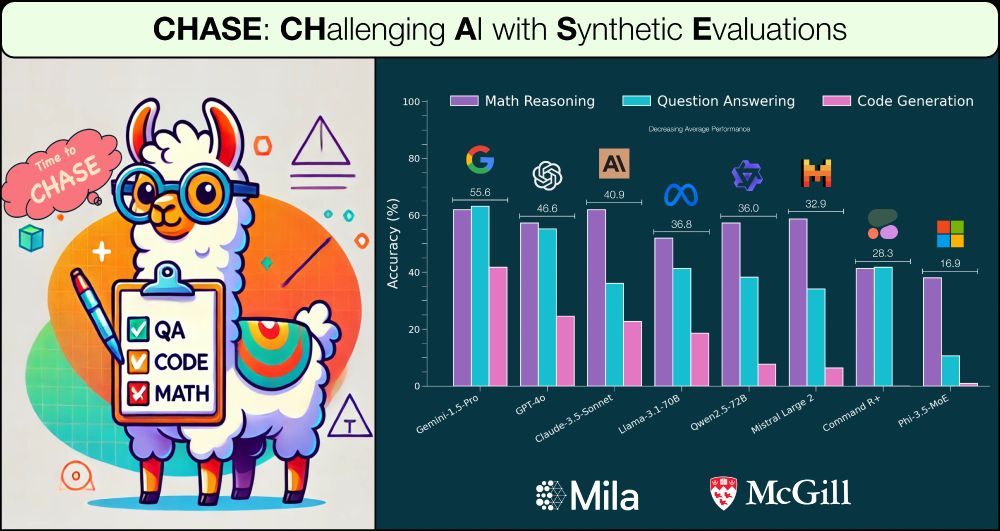

McGill NLP

@mcgill-nlp.bsky.social

· Nov 23