Co-Director @McGill-NLP.bsky.social

Researcher @ServiceNow.bsky.social

Alumni: @StanfordNLP.bsky.social, EdinburghNLP

Natural Language Processor #NLProc

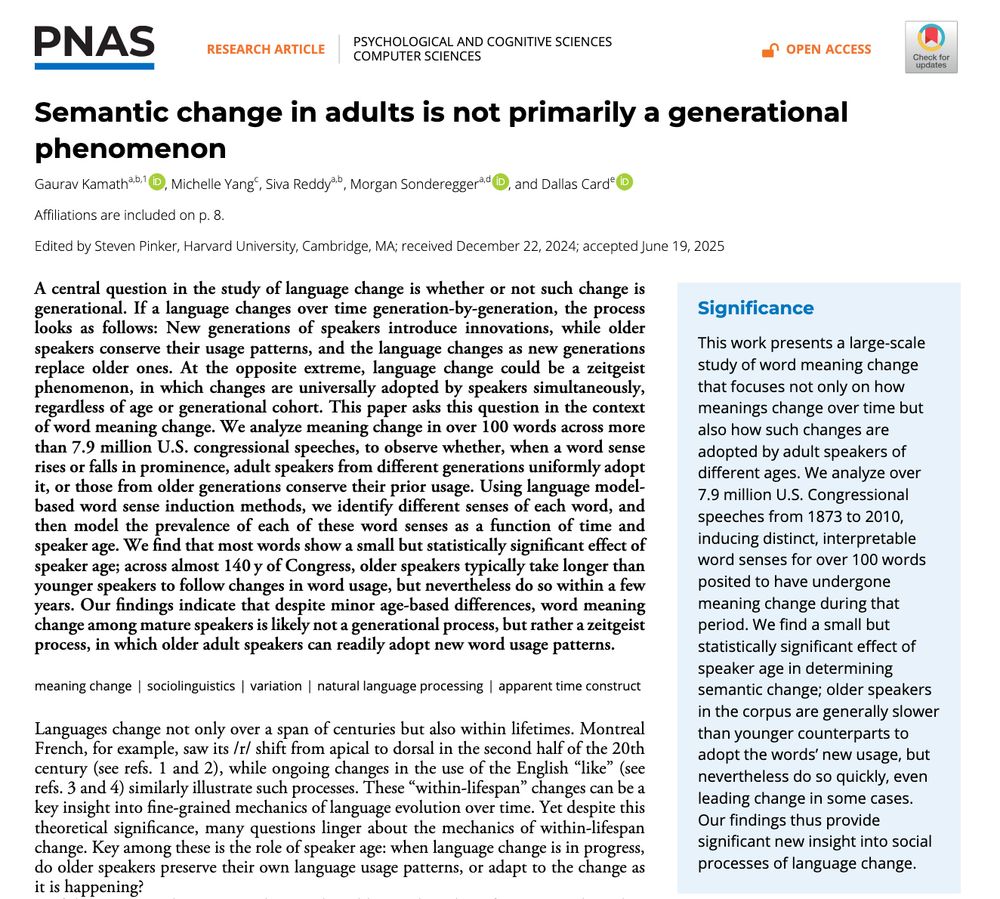

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

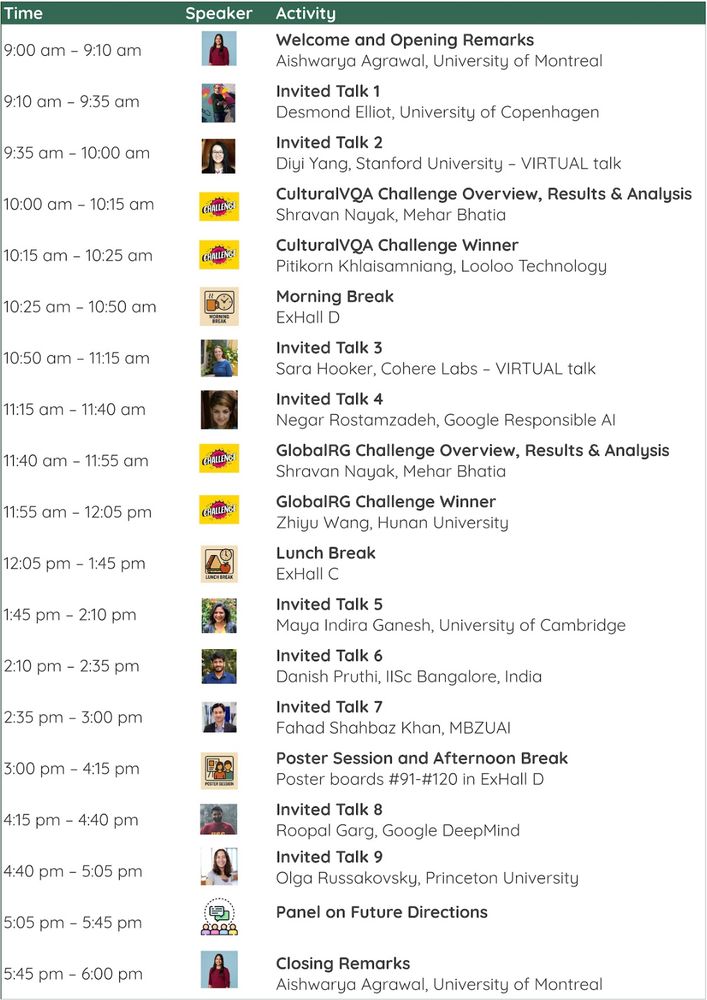

Get ready for a full day of speakers, posters, and a panel discussion on making VLMs more geo-diverse and culturally aware 🌐

Check out the schedule below!

Get ready for a full day of speakers, posters, and a panel discussion on making VLMs more geo-diverse and culturally aware 🌐

Check out the schedule below!

simons.berkeley.edu/workshops/sa...

simons.berkeley.edu/workshops/sa...

simons.berkeley.edu/workshops/sa...

simons.berkeley.edu/workshops/sa...

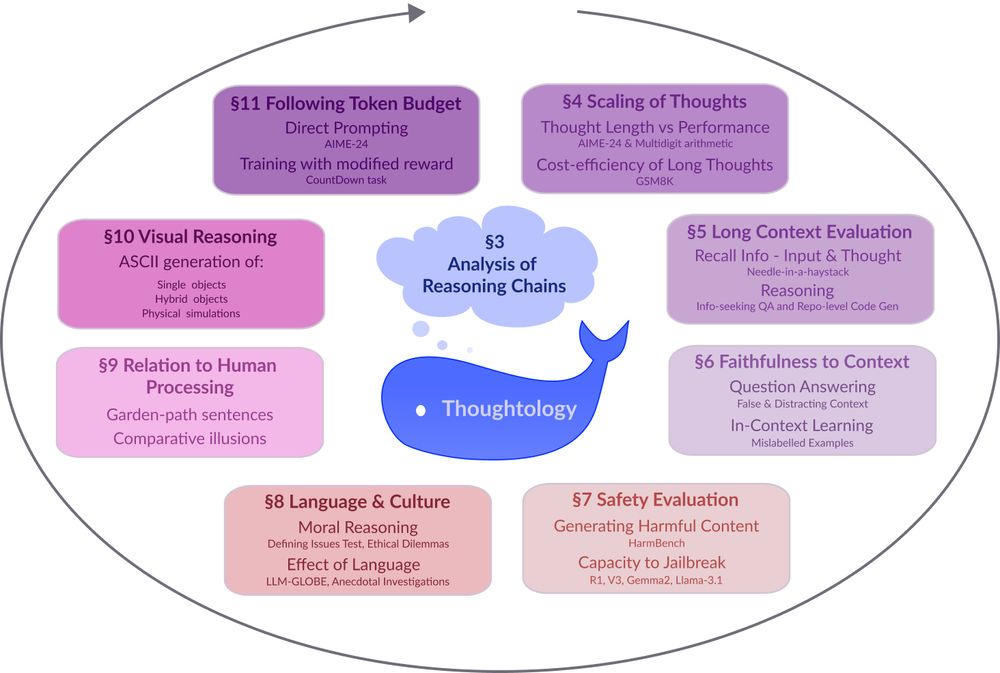

Everyone in the lab contributed different chapters and it was much more exploratory than your average phd project.

My chapter studied R1's reasoning on "image generation/editing" (via ASCII) 🧵👇

1/N

🔗: mcgill-nlp.github.io/thoughtology/

Everyone in the lab contributed different chapters and it was much more exploratory than your average phd project.

My chapter studied R1's reasoning on "image generation/editing" (via ASCII) 🧵👇

1/N

simons.berkeley.edu/workshops/fu...

simons.berkeley.edu/workshops/fu...

🔗: mcgill-nlp.github.io/thoughtology/

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

Gemini's new editing capabilities are seriously impressive!

Playing around with it is quite fun...

Edit 1: "edit the image to contain 3 more people"

Gemini's new editing capabilities are seriously impressive!

Playing around with it is quite fun...

Edit 1: "edit the image to contain 3 more people"

Incredible effort by @karstanczak.bsky.social in pulling views from multiple disciplines and experts in these fields.

arxiv.org/abs/2503.00069

Human alignment balances social expectations, economic incentives, and legal frameworks. What if LLM alignment worked the same way?🤔

Our latest work explores how social, economic, and contractual alignment can address incomplete contracts in LLM alignment🧵

Incredible effort by @karstanczak.bsky.social in pulling views from multiple disciplines and experts in these fields.

arxiv.org/abs/2503.00069

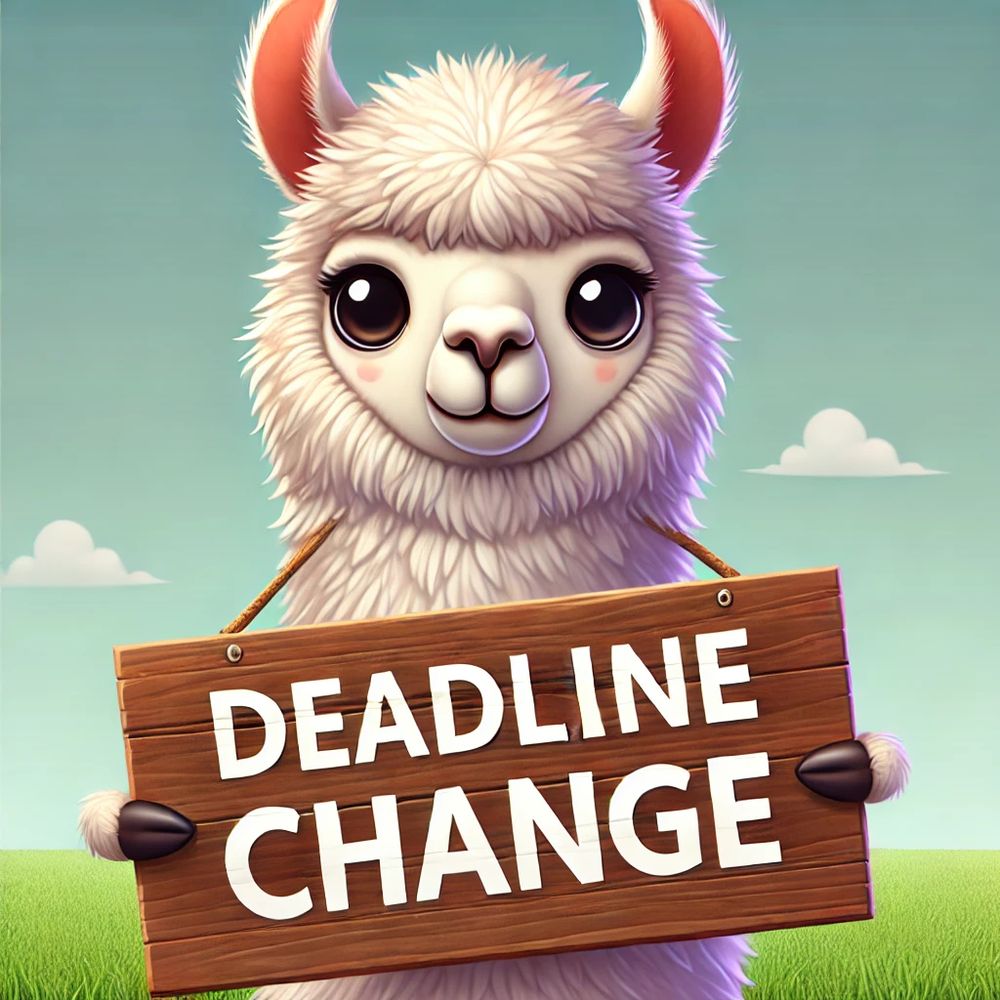

Problems for Evaluation? 🤔 Check out our CHASE recipe. A highly relevant problem given that most human-curated datasets are crushed within days.

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵:

Problems for Evaluation? 🤔 Check out our CHASE recipe. A highly relevant problem given that most human-curated datasets are crushed within days.

I'll be presenting AURORA at @neuripsconf.bsky.social on Wednesday!

Come by to discuss text-guided editing (and why imo it is more interesting than image generation), world modeling, evals and vision-and-language reasoning

We wondered if a model can do *controlled* video generation but in a *single* step?

So we built a dataset+model for “taking actions” on images via editing, or what you could call single-step controlled video gen

Super excited to share AURORA, a *general* image editing model + high-quality data that improves where prev work fails the most:

Performing *action or movement* edits, i.e. a kind of world model setup

Insights/Details ⬇️

I'll be presenting AURORA at @neuripsconf.bsky.social on Wednesday!

Come by to discuss text-guided editing (and why imo it is more interesting than image generation), world modeling, evals and vision-and-language reasoning

@andreasmadsen.bsky.social

on successfully defending your PhD ⚔️ 🎉🎉 Grateful to you for stretching my interests into interpretability and engaging me with exciting deas. Good luck with your mission on building faithfully interpretable models.

@andreasmadsen.bsky.social

on successfully defending your PhD ⚔️ 🎉🎉 Grateful to you for stretching my interests into interpretability and engaging me with exciting deas. Good luck with your mission on building faithfully interpretable models.

If you want the naive, training-free / model-agnostic approach: their related work section says it is most common to using the final token’s last hidden state.

If you want the naive, training-free / model-agnostic approach: their related work section says it is most common to using the final token’s last hidden state.

Stage 1: 😍 I hope I learn something new

Stage 2: 🤗 I hope I am constructive enough while being critical. Submits review

Stage 3: 🤯 Receives 5 page response + revision with many new pages

Stage 4: 😱 Crap, how do I get out of this?

Stage 5: 😵💫 What year is it?

Stage 1: 😍 I hope I learn something new

Stage 2: 🤗 I hope I am constructive enough while being critical. Submits review

Stage 3: 🤯 Receives 5 page response + revision with many new pages

Stage 4: 😱 Crap, how do I get out of this?

Stage 5: 😵💫 What year is it?

I can’t seem to find everyone though, help definitely appreciated to fill this out (DM or comment)!

I can’t seem to find everyone though, help definitely appreciated to fill this out (DM or comment)!