Ching Fang

@chingfang.bsky.social

320 followers

260 following

21 posts

Postdoc @Harvard interested in neuro-AI and neurotheory. Previously @columbia, @ucberkeley, and @apple. 🧠🧪🤖

Posts

Media

Videos

Starter Packs

Ching Fang

@chingfang.bsky.social

· Jun 27

Ching Fang

@chingfang.bsky.social

· Jun 26

Ching Fang

@chingfang.bsky.social

· Jun 26

Ching Fang

@chingfang.bsky.social

· Jun 26

From memories to maps: Mechanisms of in context reinforcement learning in transformers

Humans and animals show remarkable learning efficiency, adapting to new environments with minimal experience. This capability is not well captured by standard reinforcement learning algorithms that re...

arxiv.org

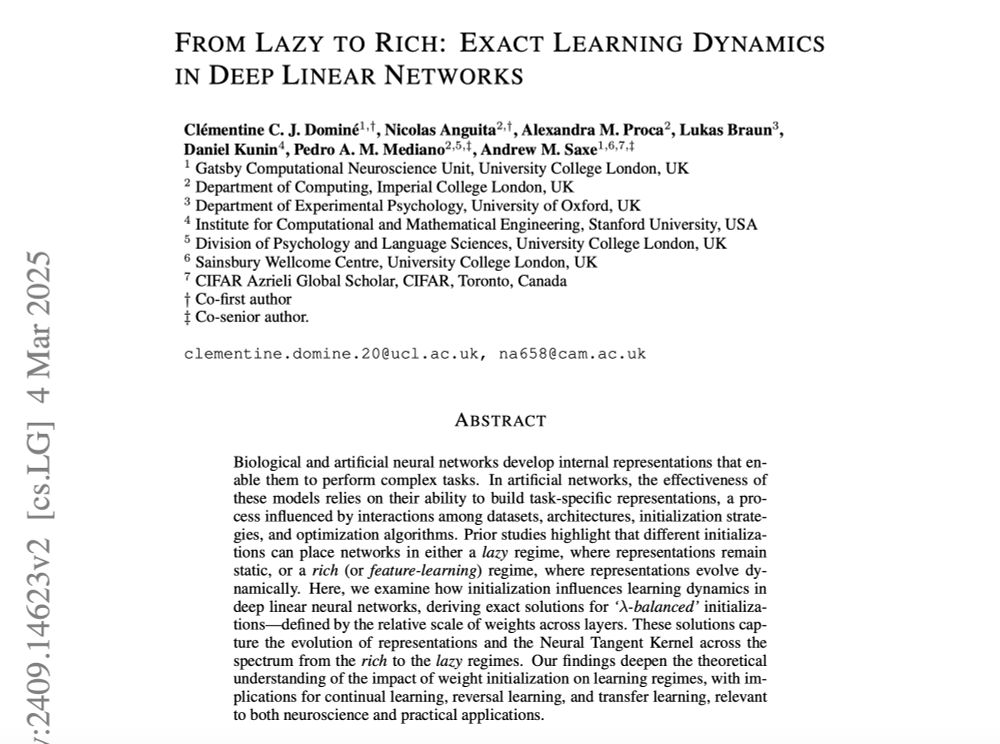

Reposted by Ching Fang

Reposted by Ching Fang

Jenelle Feather

@jfeather.bsky.social

· Apr 2

Reposted by Ching Fang

Reposted by Ching Fang

Reposted by Ching Fang

David G. Clark

@david-g-clark.bsky.social

· Mar 26

David G. Clark

@david-g-clark.bsky.social

· Jan 29

Symmetries and Continuous Attractors in Disordered Neural Circuits

A major challenge in neuroscience is reconciling idealized theoretical models with complex, heterogeneous experimental data. We address this challenge through the lens of continuous-attractor networks...

www.biorxiv.org

Reposted by Ching Fang