Clintin Davis-Stober

@clintin.bsky.social

2.4K followers

2.1K following

49 posts

Professor, quantitative psychology, decision theory, data science, mathematics, statistics, open science, modeling, weight lifting, photography, enjoyer of poetry

www.davis-stober.com

Posts

Media

Videos

Starter Packs

Reposted by Clintin Davis-Stober

Reposted by Clintin Davis-Stober

Berna Devezer

@devezer.bsky.social

· Aug 14

John Kennedy

@micefearboggis.bsky.social

· Aug 13

How to science a science with science

The purest description of the scientific method I ever saw was in a novel by Kurt Vonnegut. A workman discovers that if he puts a bucket full of nuts and bolts on one of the many supporting struts …

diagrammonkey.wordpress.com

Reposted by Clintin Davis-Stober

Carl T. Bergstrom

@carlbergstrom.com

· Aug 12

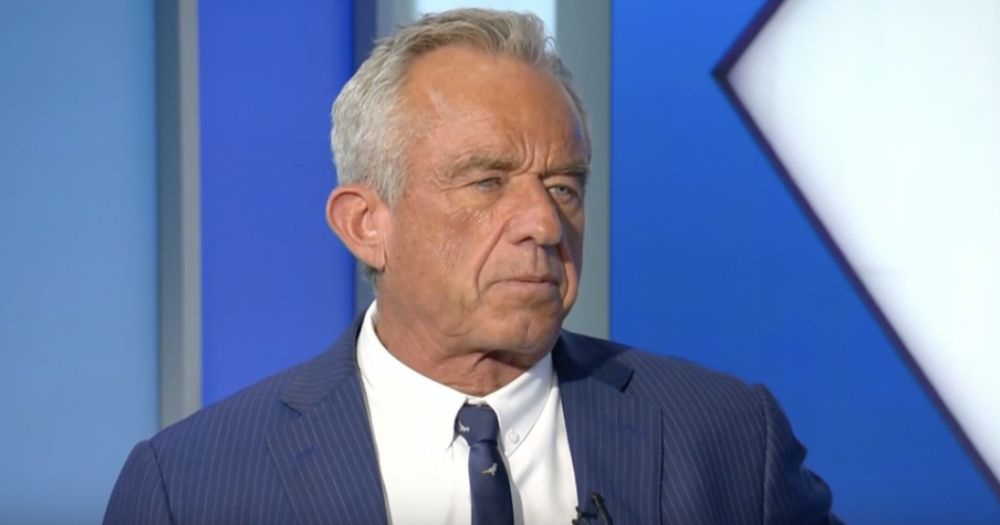

RFK Jr. in interview with Scripps News: ‘Trusting the experts is not science’

HHS Secretary RFK Jr. sat down with Scripps News for a wide-ranging interview, discussing mRNA vaccine funding policy changes and a recent shooting at the Centers for Disease Control and Prevention.

www.scrippsnews.com

Reposted by Clintin Davis-Stober

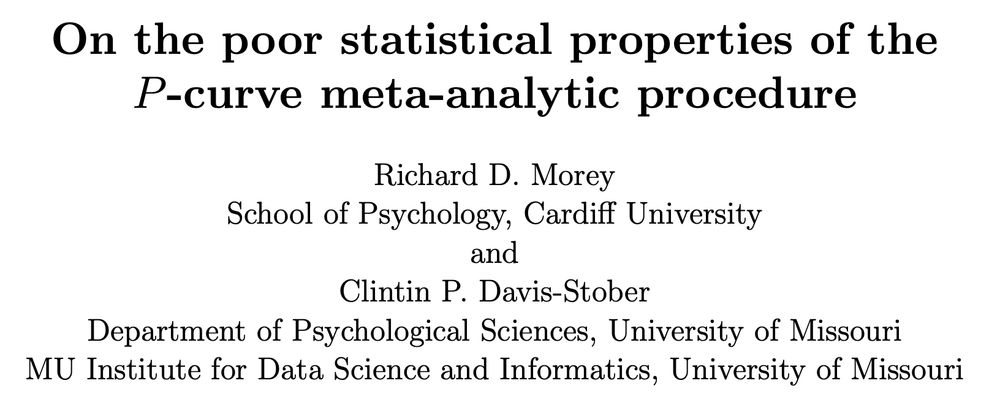

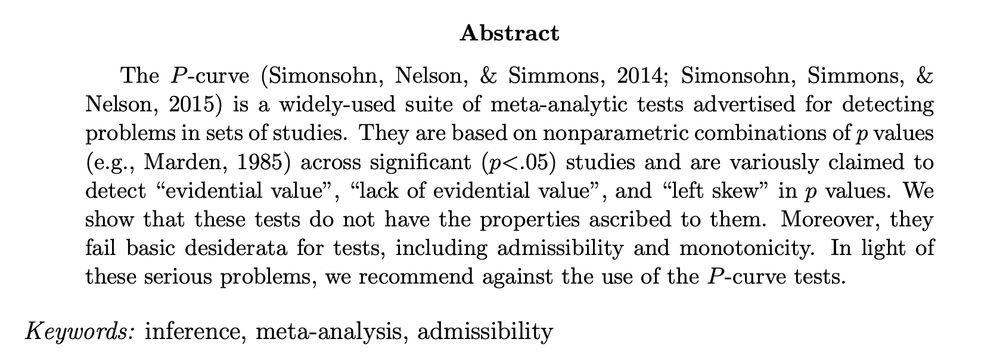

Clintin Davis-Stober

@clintin.bsky.social

· Aug 10

Reposted by Clintin Davis-Stober

Berna Devezer

@devezer.bsky.social

· Aug 10

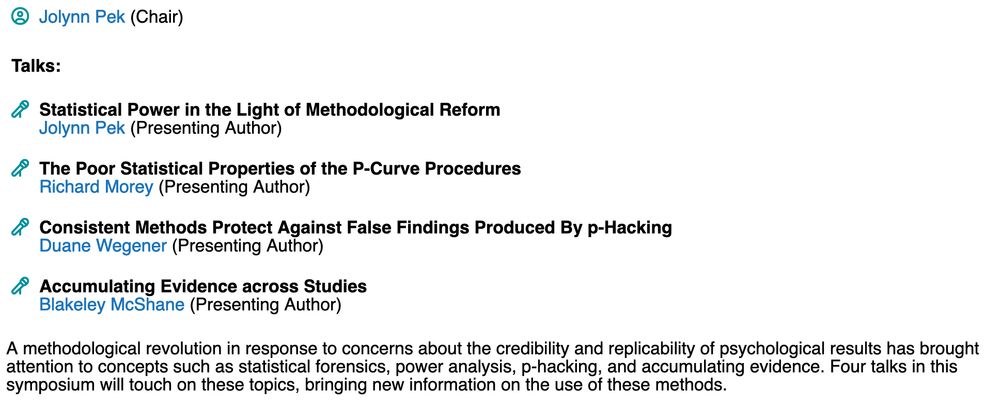

The case for formal methodology in scientific reform | Royal Society Open Science

Current attempts at methodological reform in sciences come in response to an overall

lack of rigor in methodological and scientific practices in experimental sciences.

However, most methodological ref...

share.google

Reposted by Clintin Davis-Stober

Berna Devezer

@devezer.bsky.social

· Aug 10

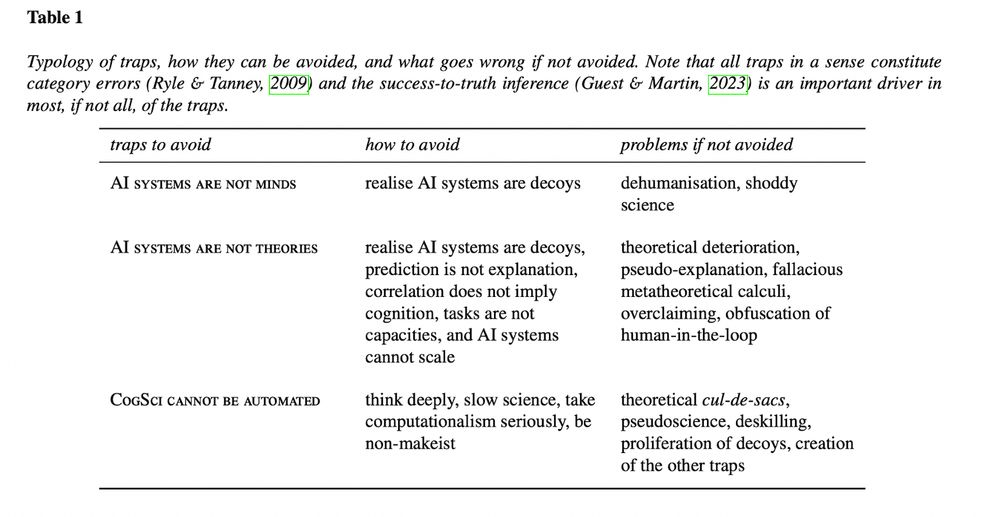

Clintin Davis-Stober

@clintin.bsky.social

· Aug 10

Clintin Davis-Stober

@clintin.bsky.social

· Aug 10

Clintin Davis-Stober

@clintin.bsky.social

· Aug 10

Clintin Davis-Stober

@clintin.bsky.social

· Aug 10

Reposted by Clintin Davis-Stober

Daniel Heck

@danielheck.bsky.social

· Jun 12

Clintin Davis-Stober

@clintin.bsky.social

· Jun 11

Reposted by Clintin Davis-Stober

Reposted by Clintin Davis-Stober

Reposted by Clintin Davis-Stober

Clintin Davis-Stober

@clintin.bsky.social

· May 20

Reposted by Clintin Davis-Stober