--The Diplomat, S2 E4

(I've been binge-watching this, and it is excellent.)

--The Diplomat, S2 E4

(I've been binge-watching this, and it is excellent.)

www.metacareers.com/jobs/1459691...

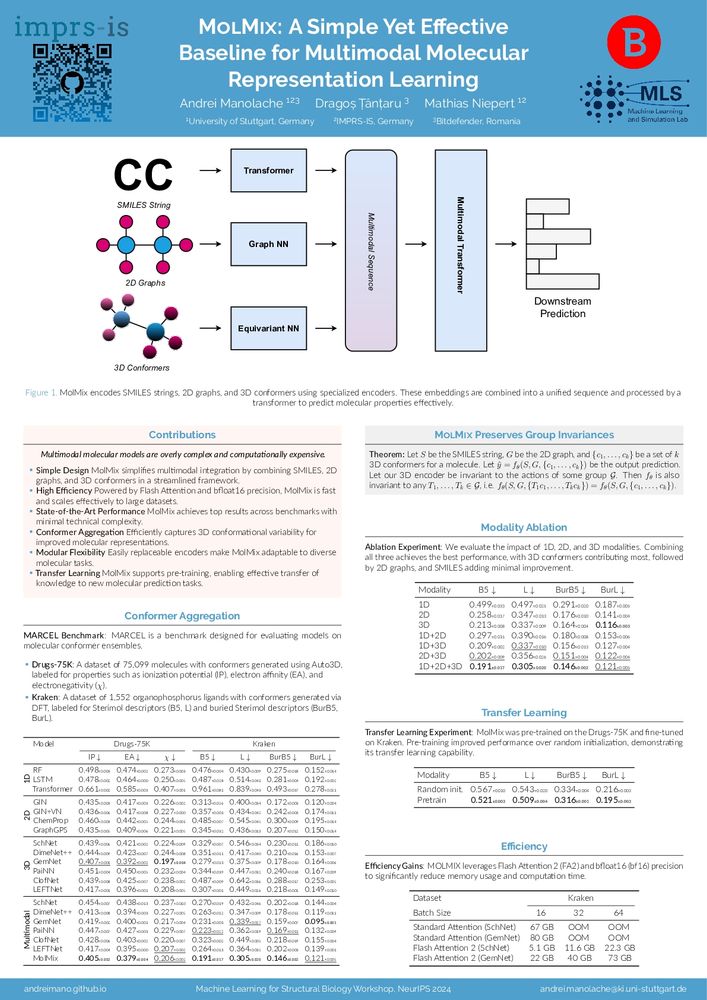

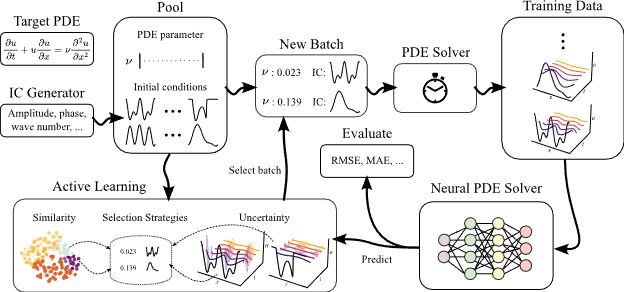

arxiv.org/abs/2405.06161

arxiv.org/abs/2405.06161

"We are trying to solve a problem that's too big and refuse to concretize it to make it actually tractable."

"I think RL's too elegant. … It draws us all in with its elegance, and then we get hit with all the other issues that you probably heard from everyone else."

"We are trying to solve a problem that's too big and refuse to concretize it to make it actually tractable."

"I think RL's too elegant. … It draws us all in with its elegance, and then we get hit with all the other issues that you probably heard from everyone else."

Usual suspects: training brittleness (over reliance on hyperparameter tuning), bad & slow sims, overemphasis on generality, LLMs dominating discourse, tabula rasa RL is hard

What do RL researchers complain about after hours at the bar? In this "Hot takes" episode, we find out!

Recorded at The Pearl in downtown Vancouver, during the RL meetup after a day of Neurips 2024.

Usual suspects: training brittleness (over reliance on hyperparameter tuning), bad & slow sims, overemphasis on generality, LLMs dominating discourse, tabula rasa RL is hard

Lol

Lol

also the feds: *treat him like they’ve captured the joker*

also the feds: *treat him like they’ve captured the joker*

Interested in molecular representation learning? Let’s chat 👋!

Interested in molecular representation learning? Let’s chat 👋!

In "Learning on compressed molecular representations" Jan Weinreich and I looked into whether GZIP performed better than Neural Networks in chemical machine learning tasks. Yes, you've read that right.

TL;DR: Yes, GZIP can perform better than baseline GNNs and MLPs. It can ..

In "Learning on compressed molecular representations" Jan Weinreich and I looked into whether GZIP performed better than Neural Networks in chemical machine learning tasks. Yes, you've read that right.

TL;DR: Yes, GZIP can perform better than baseline GNNs and MLPs. It can ..

x.com/_jasonwei/st...

x.com/_jasonwei/st...

-pretraining with human (?) prefs, merging pre and post training

-online exploration for (synthetic) data collection

-pretraining with human (?) prefs, merging pre and post training

-online exploration for (synthetic) data collection

- Precalculus: a modern approach with a lot of calculus

- An introduction to probability with only a gentle amount of gaslighting

- Category Theory

- Precalculus: a modern approach with a lot of calculus

- An introduction to probability with only a gentle amount of gaslighting

- Category Theory