Daniel Wurgaft

@danielwurgaft.bsky.social

120 followers

190 following

26 posts

PhD @Stanford working w Noah Goodman

Studying in-context learning and reasoning in humans and machines

Prev. @UofT CS & Psych

Posts

Media

Videos

Starter Packs

Reposted by Daniel Wurgaft

Reposted by Daniel Wurgaft

Reposted by Daniel Wurgaft

Reposted by Daniel Wurgaft

Reposted by Daniel Wurgaft

Reposted by Daniel Wurgaft

Sam Gershman

@gershbrain.bsky.social

· Jul 8

Reposted by Daniel Wurgaft

Andrew Lampinen

@lampinen.bsky.social

· Jun 28

Daniel Wurgaft

@danielwurgaft.bsky.social

· Jun 28

Daniel Wurgaft

@danielwurgaft.bsky.social

· Jun 28

Daniel Wurgaft

@danielwurgaft.bsky.social

· Jun 28

In-Context Learning Strategies Emerge Rationally

Recent work analyzing in-context learning (ICL) has identified a broad set of strategies that describe model behavior in different experimental conditions. We aim to unify these findings by asking why...

arxiv.org

Daniel Wurgaft

@danielwurgaft.bsky.social

· Jun 28

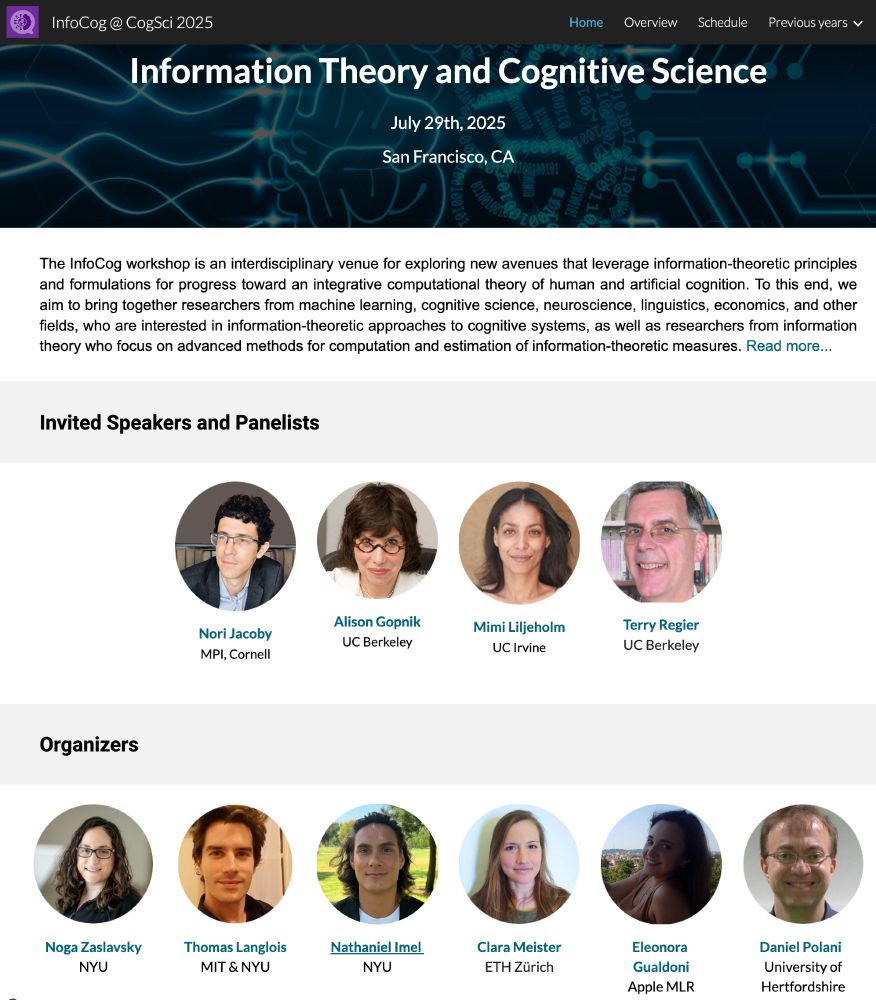

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)