Check out our paper and code below 🚀

📄 Paper: arxiv.org/abs/2505.24671

🤖 Dataset: github.com/dayeonki/cul...

Check out our paper and code below 🚀

📄 Paper: arxiv.org/abs/2505.24671

🤖 Dataset: github.com/dayeonki/cul...

Some exciting future directions:

1️⃣ Assigning specific roles to represent diverse cultural perspectives

2️⃣ Discovering optimal strategies for multi-LLM collaboration

3️⃣ Designing better adjudication methods to resolve disagreements fairly 🤝

Some exciting future directions:

1️⃣ Assigning specific roles to represent diverse cultural perspectives

2️⃣ Discovering optimal strategies for multi-LLM collaboration

3️⃣ Designing better adjudication methods to resolve disagreements fairly 🤝

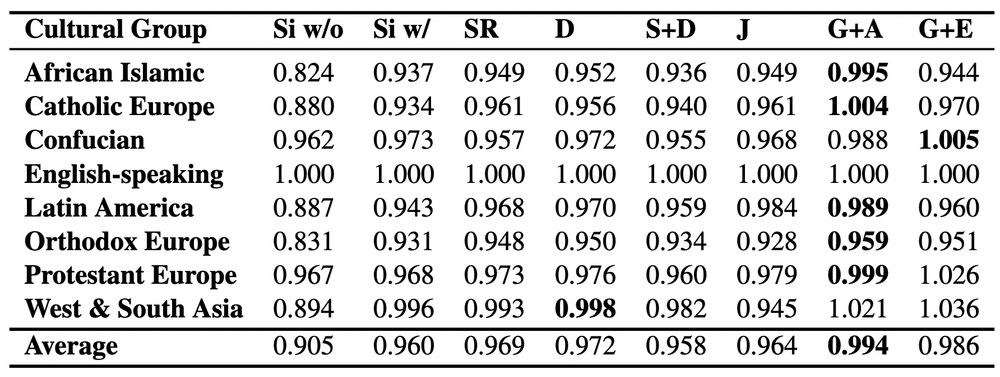

🫂 We measure cultural parity across diverse groups — and find that Multi-Agent Debate not only boosts average accuracy but also leads to more equitable cultural alignment 🌍

🫂 We measure cultural parity across diverse groups — and find that Multi-Agent Debate not only boosts average accuracy but also leads to more equitable cultural alignment 🌍

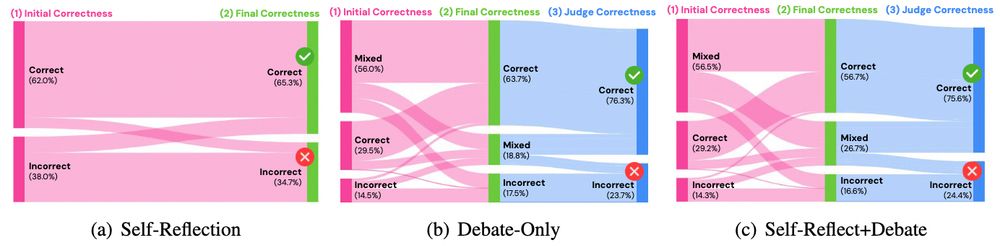

We track three phases of LLM behavior:

💗 Initial decision correctness

💚 Final decision correctness

💙 Judge’s decision correctness

✨ Multi-Agent Debate is most valuable when models initially disagree!

We track three phases of LLM behavior:

💗 Initial decision correctness

💚 Final decision correctness

💙 Judge’s decision correctness

✨ Multi-Agent Debate is most valuable when models initially disagree!

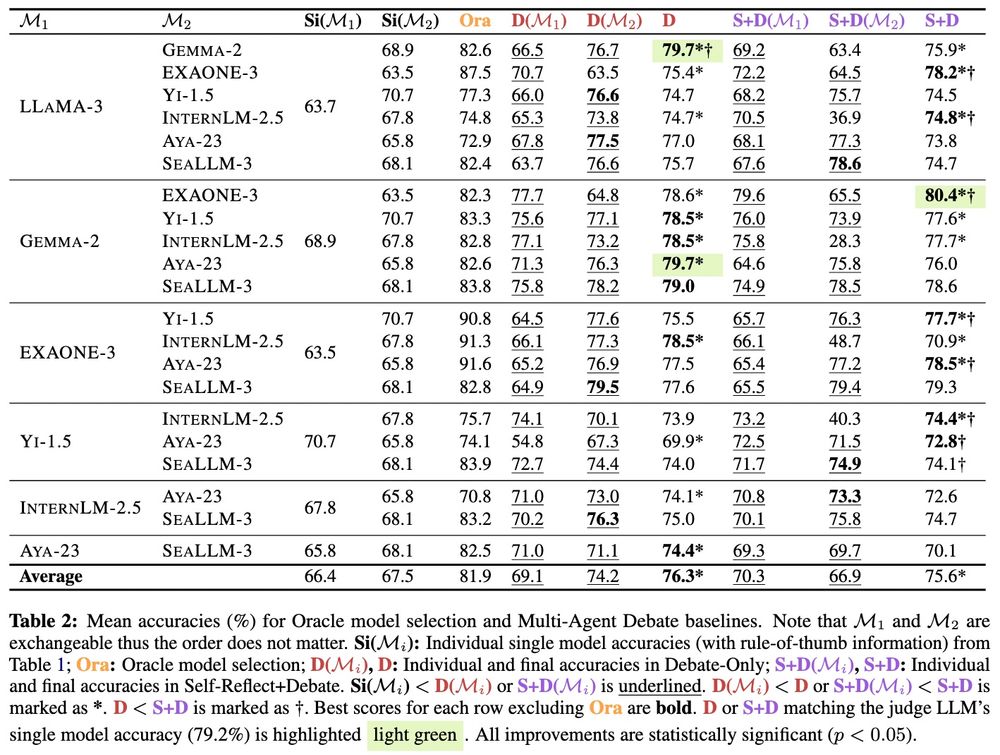

We find that:

🤯 Multi-Agent Debate lets smaller LLMs (7B) match the performance of much larger ones (27B)

🏆 Best combo? Gemma-2 9B + EXAONE-3 7B 💪

We find that:

🤯 Multi-Agent Debate lets smaller LLMs (7B) match the performance of much larger ones (27B)

🏆 Best combo? Gemma-2 9B + EXAONE-3 7B 💪

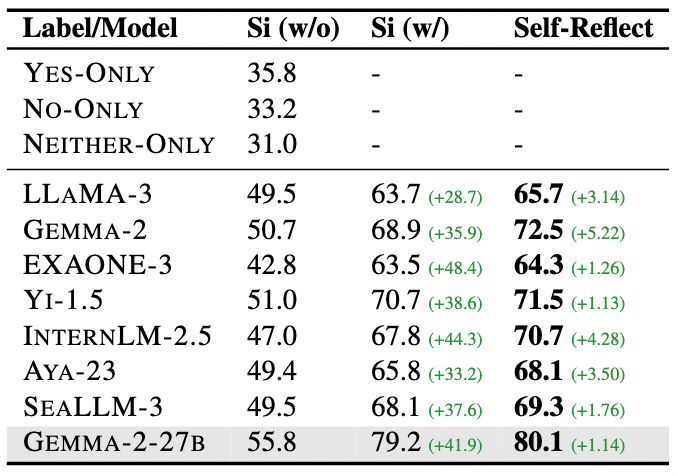

1️⃣ Cultural Contextualization: adding relevant rules-of-thumb for the target culture

2️⃣ Self-Reflection: evaluating and improve its own outputs

These serve as strong baselines before we introduce collaboration 🤝

1️⃣ Cultural Contextualization: adding relevant rules-of-thumb for the target culture

2️⃣ Self-Reflection: evaluating and improve its own outputs

These serve as strong baselines before we introduce collaboration 🤝

Different LLMs bring complementary perspectives and reasoning paths, thanks to variations in:

💽 Training data

🧠 Alignment processes

🌐 Language and cultural coverage

We explore one common form of collaboration: debate.

Different LLMs bring complementary perspectives and reasoning paths, thanks to variations in:

💽 Training data

🧠 Alignment processes

🌐 Language and cultural coverage

We explore one common form of collaboration: debate.

We’d love for you to check it out 🚀

📄 Paper: arxiv.org/abs/2504.11582

🤖 Dataset: github.com/dayeonki/askqe

We’d love for you to check it out 🚀

📄 Paper: arxiv.org/abs/2504.11582

🤖 Dataset: github.com/dayeonki/askqe

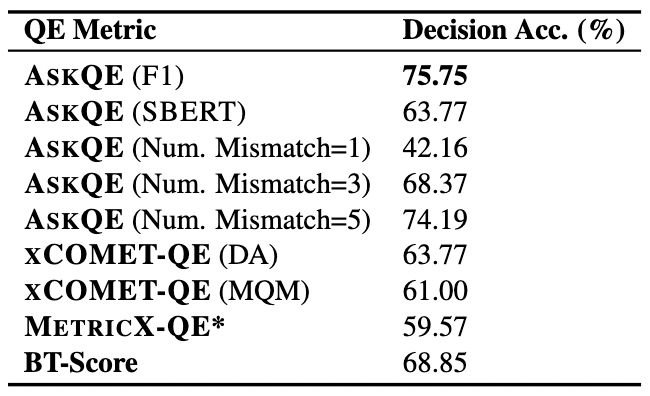

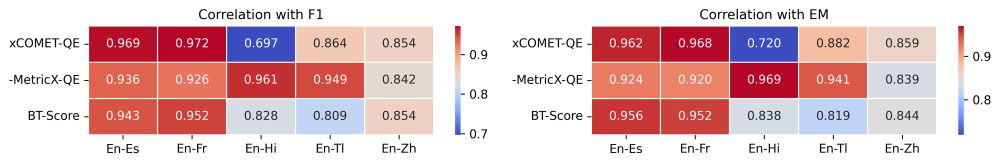

Yes! It shows:

💁♀️ Stronger correlation with human judgments

✅ Better decision-making accuracy than standard QE metrics

Yes! It shows:

💁♀️ Stronger correlation with human judgments

✅ Better decision-making accuracy than standard QE metrics

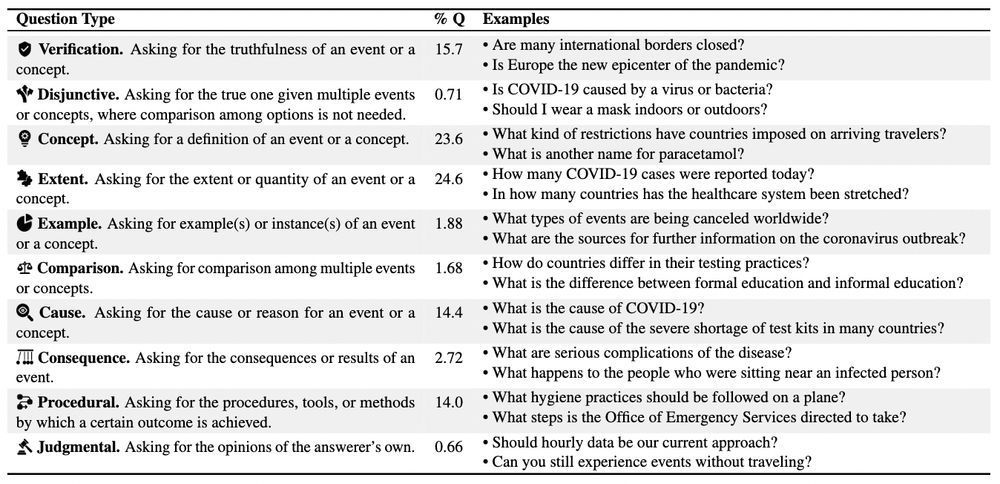

Most commonly:

📏 Extent — How many COVID-19 cases were reported today? (24.6%)

💡 Concept — What is another name for paracetamol? (23.6%)

Most commonly:

📏 Extent — How many COVID-19 cases were reported today? (24.6%)

💡 Concept — What is another name for paracetamol? (23.6%)

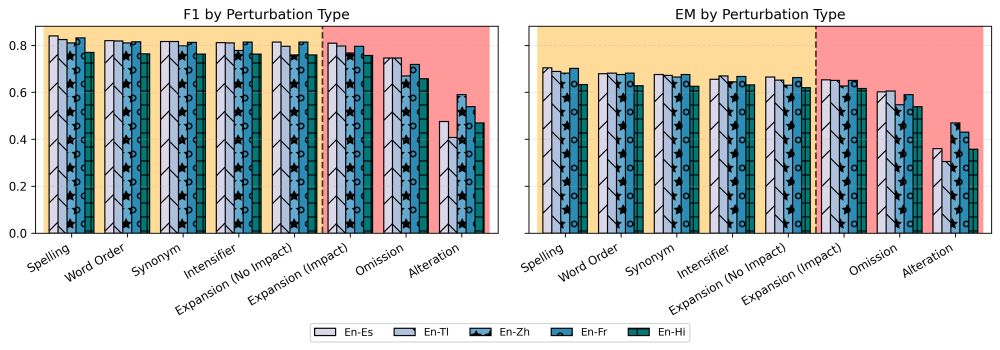

📉 It effectively distinguishes minor to critical translation errors

👭 It aligns closely with established quality estimation (QE) metrics

📉 It effectively distinguishes minor to critical translation errors

👭 It aligns closely with established quality estimation (QE) metrics

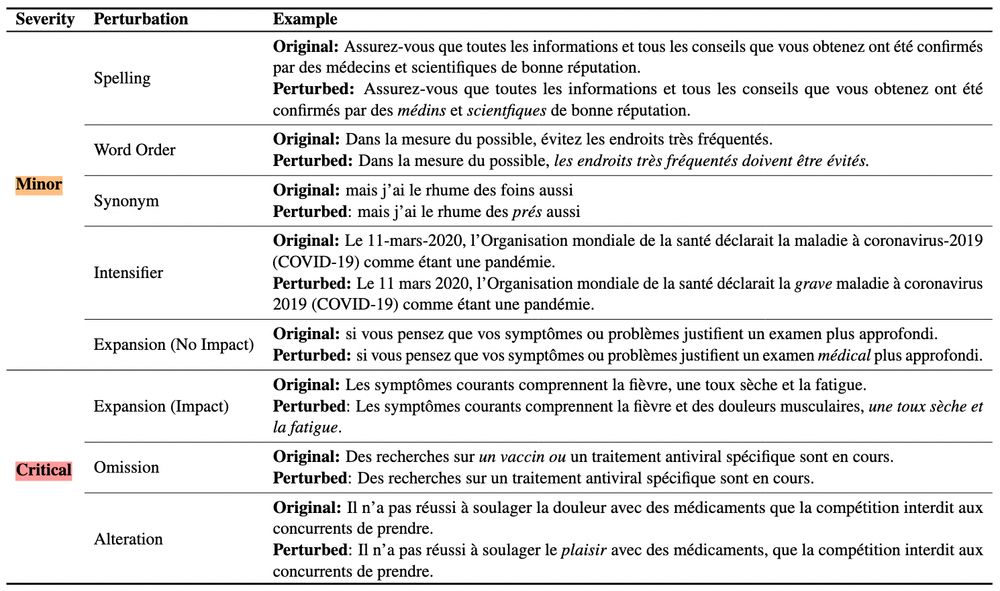

⚠️ Minor errors: spelling, word order, synonym, intensifier, expansion (no impact)

📛 Critical errors: expansion (impact), omission, alteration

⚠️ Minor errors: spelling, word order, synonym, intensifier, expansion (no impact)

📛 Critical errors: expansion (impact), omission, alteration

❓ Question Generation (QG): conditioned on the source + its entailed facts

❕ Question Answering (QA): based on the source and backtranslated MT

If the answers don’t match... there's likely an error ⚠️

❓ Question Generation (QG): conditioned on the source + its entailed facts

❕ Question Answering (QA): based on the source and backtranslated MT

If the answers don’t match... there's likely an error ⚠️

1️⃣ Provides functional explanations of MT quality

2️⃣ Users can weigh the evidence based on their own judgment

3️⃣ Aligns well with real-world cross-lingual communication strategies 🌐

1️⃣ Provides functional explanations of MT quality

2️⃣ Users can weigh the evidence based on their own judgment

3️⃣ Aligns well with real-world cross-lingual communication strategies 🌐

Please check out our paper accepted to NAACL 2025 🚀

📄 Paper: arxiv.org/abs/2502.16682

🤖 Code: github.com/dayeonki/rew...

Please check out our paper accepted to NAACL 2025 🚀

📄 Paper: arxiv.org/abs/2502.16682

🤖 Code: github.com/dayeonki/rew...

As LLM-based writing assistants grow, we encourage future work on interactive, rewriting-based approaches to MT 🫡

As LLM-based writing assistants grow, we encourage future work on interactive, rewriting-based approaches to MT 🫡

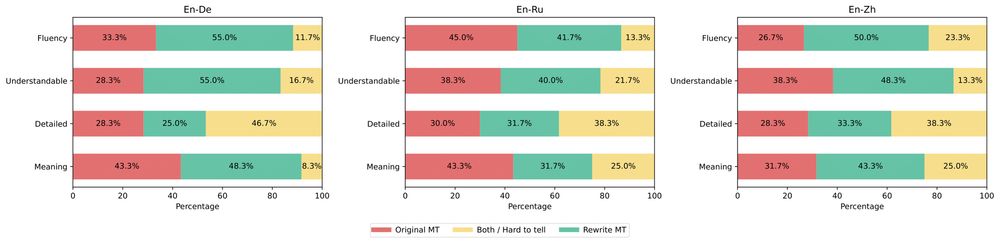

Yes! They rated these to be:

📝 More contextually appropriate

👁️ Easier to read

🤗 More comprehensible

compared to translations of original inputs!

Yes! They rated these to be:

📝 More contextually appropriate

👁️ Easier to read

🤗 More comprehensible

compared to translations of original inputs!

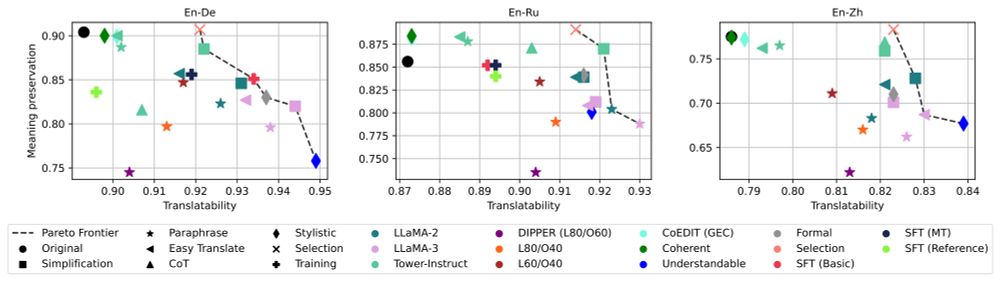

Here are 3 key findings:

1️⃣ Better translatability trades-off meaning preservation

2️⃣ Simplification boosts both input & output readability 📖

3️⃣ Input rewriting > Output post-editing 🤯

Here are 3 key findings:

1️⃣ Better translatability trades-off meaning preservation

2️⃣ Simplification boosts both input & output readability 📖

3️⃣ Input rewriting > Output post-editing 🤯

Yes! By selecting rewrites based on translatability scores at inference time, we outperform all other methods 🔥

Yes! By selecting rewrites based on translatability scores at inference time, we outperform all other methods 🔥