Emerson Harkin

@efharkin.bsky.social

130 followers

85 following

45 posts

Computational neuroscience post doc interested in serotonin | Dayan lab @mpicybernetics.bsky.social | 🇨🇦 in 🇩🇪

Posts

Media

Videos

Starter Packs

Pinned

Emerson Harkin

@efharkin.bsky.social

· Mar 27

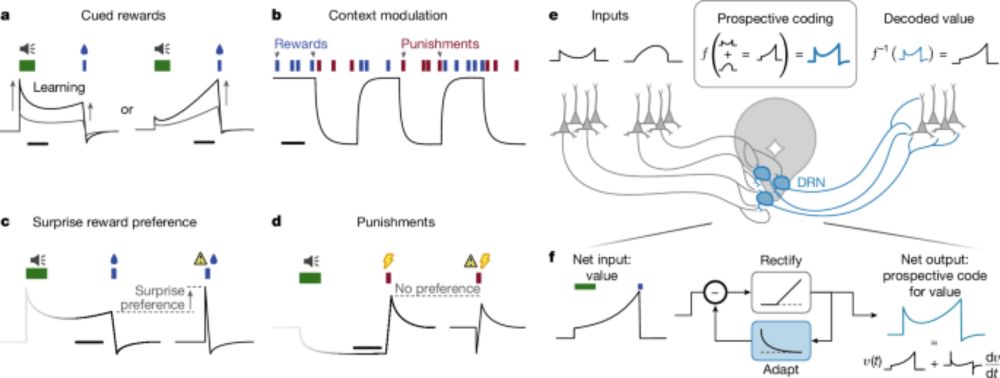

A prospective code for value in the serotonin system - Nature

Merging ideas from reinforcement learning theory with recent insights into the filtering properties of the dorsal raphe nucleus, a unifying perspective is found explaining why serotonin neurons are ac...

doi.org

Reposted by Emerson Harkin

Reposted by Emerson Harkin

Reposted by Emerson Harkin

Reposted by Emerson Harkin

Open Mind

@openmindjournal.bsky.social

· Aug 23

Merits of Curiosity: A Simulation Study

Abstract‘Why are we curious?’ has been among the central puzzles of neuroscience and psychology in the past decades. A popular hypothesis is that curiosity is driven by intrinsically generated reward signals, which have evolved to support survival in complex environments. To formalize and test this hypothesis, we need to understand the enigmatic relationship between (i) intrinsic rewards (as drives of curiosity), (ii) optimality conditions (as objectives of curiosity), and (iii) environment structures. Here, we demystify this relationship through a systematic simulation study. First, we propose an algorithm to generate environments that capture key abstract features of different real-world situations. Then, we simulate artificial agents that explore these environments by seeking one of six representative intrinsic rewards: novelty, surprise, information gain, empowerment, maximum occupancy principle, and successor-predecessor intrinsic exploration. We evaluate the exploration performance of these simulated agents regarding three potential objectives of curiosity: state discovery, model accuracy, and uniform state visitation. Our results show that the comparative performance of each intrinsic reward is highly dependent on the environmental features and the curiosity objective; this indicates that ‘optimality’ in top-down theories of curiosity needs a precise formulation of assumptions. Nevertheless, we found that agents seeking a combination of novelty and information gain always achieve a close-to-optimal performance on objectives of curiosity as well as in collecting extrinsic rewards. This suggests that novelty and information gain are two principal axes of curiosity-driven behavior. These results pave the way for the further development of computational models of curiosity and the design of theory-informed experimental paradigms.

dlvr.it

Reposted by Emerson Harkin

Emerson Harkin

@efharkin.bsky.social

· Aug 8

Reposted by Emerson Harkin

Guido Meijer

@guidomeijer.com

· Aug 5

Serotonin drives choice-independent reconfiguration of distributed neural activity

Serotonin (5-HT) is a central neuromodulator which is implicated in, amongst other functions, cognitive flexibility. 5-HT is released from the dorsal raphe nucleus (DRN) throughout nearly the entire f...

doi.org

Emerson Harkin

@efharkin.bsky.social

· Jul 23

Reposted by Emerson Harkin

Fernando Rosas

@frosas.bsky.social

· Jul 22

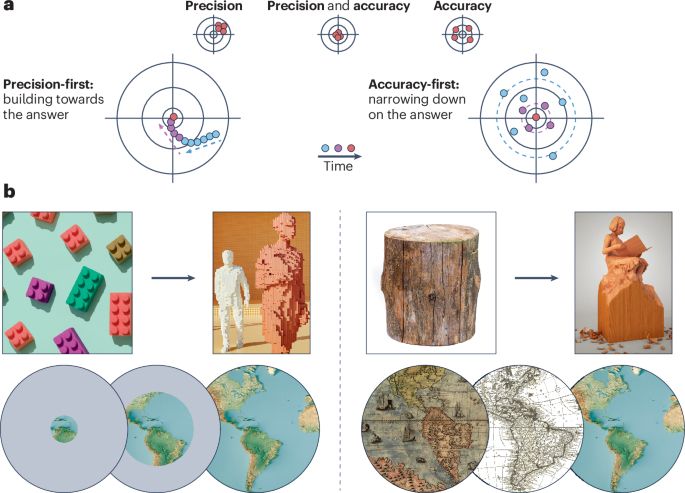

Top-down and bottom-up neuroscience: overcoming the clash of research cultures - Nature Reviews Neuroscience

As scientists, we want solid answers, but we also want to answer questions that matter. Yet, the brain’s complexity forces trade-offs between these desiderata, bringing about two distinct research app...

www.nature.com

Emerson Harkin

@efharkin.bsky.social

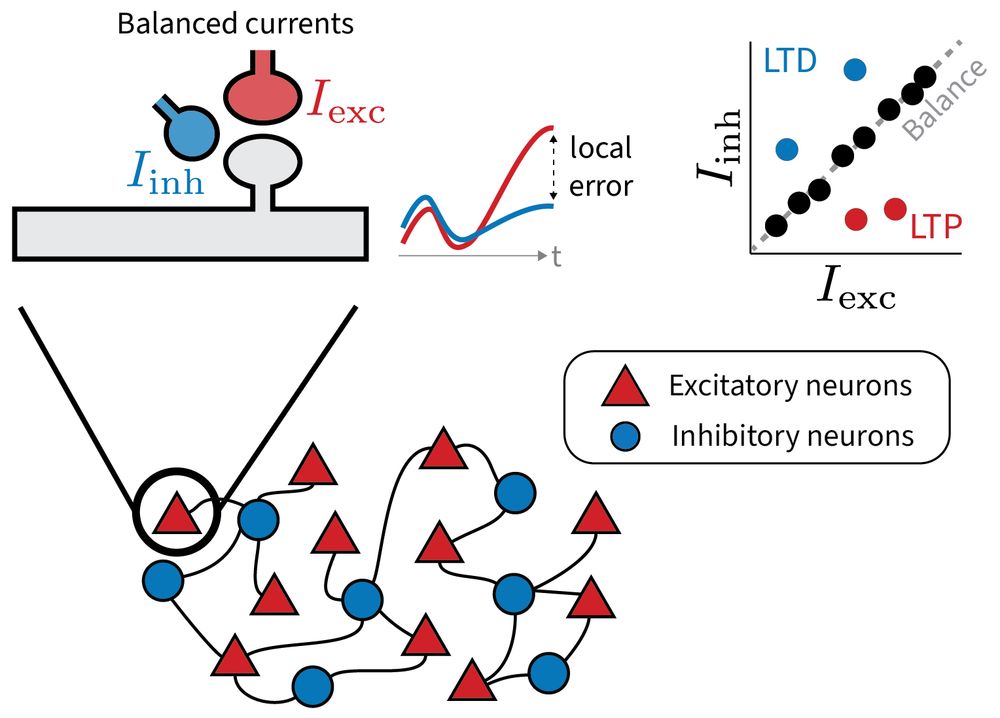

· Jun 6

Emerson Harkin

@efharkin.bsky.social

· Jun 6

Emerson Harkin

@efharkin.bsky.social

· Jun 6

Emerson Harkin

@efharkin.bsky.social

· Jun 6

Emerson Harkin

@efharkin.bsky.social

· Jun 5

Emerson Harkin

@efharkin.bsky.social

· Jun 5

A multidimensional distributional map of future reward in dopamine neurons

Nature - An algorithm called time–magnitude reinforcement learning (TMRL) extends distributional reinforcement learning to take account of reward time and magnitude, and behavioural and...

rdcu.be

Reposted by Emerson Harkin

Emerson Harkin

@efharkin.bsky.social

· May 25

Emerson Harkin

@efharkin.bsky.social

· May 22

Reposted by Emerson Harkin

Ida Momennejad

@neuroai.bsky.social

· May 9

Reposted by Emerson Harkin

Patrick Davidson

@pdav.bsky.social

· May 6

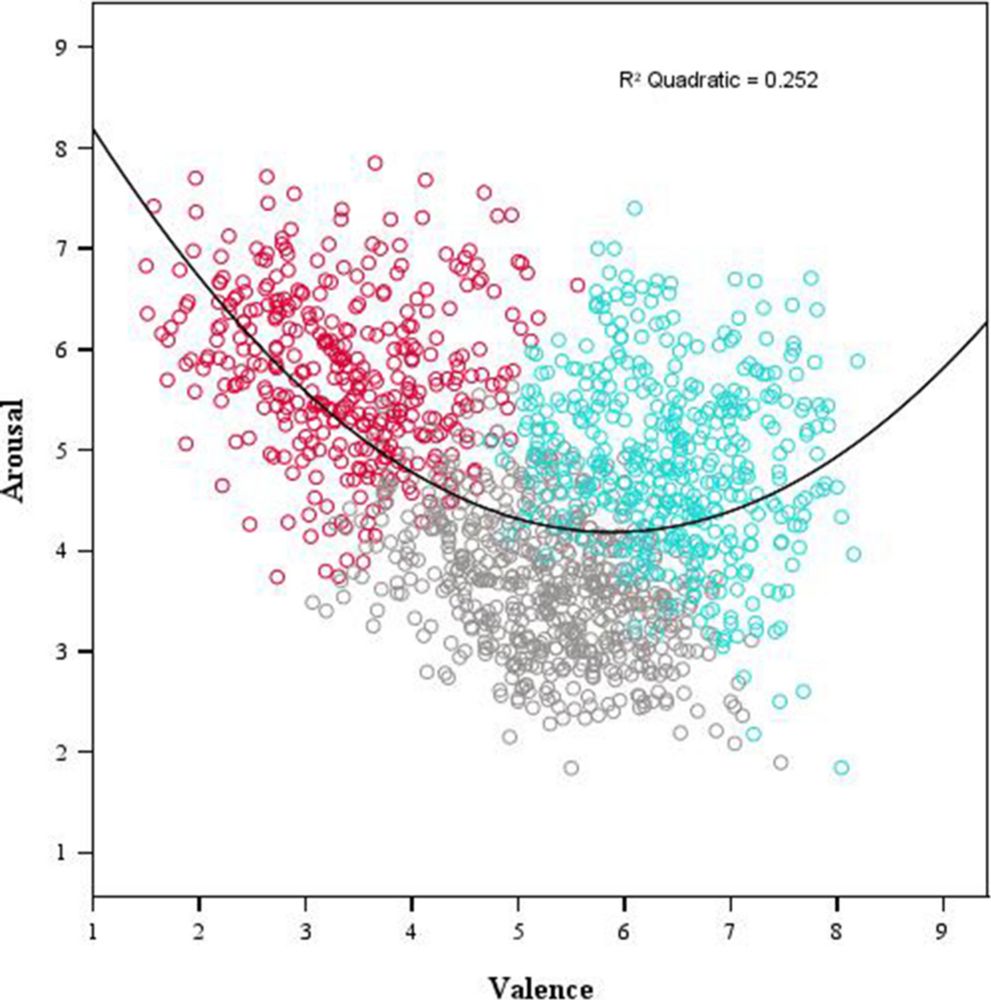

The Second Database of Emotional Videos from Ottawa (DEVO-2): Over 1300 brief video clips rated on valence, arousal, impact, and familiarity - Behavior Research Methods

We introduce an updated set of video clips for research on emotion and its relations with perception, cognition, and behavior. These 1380 brief video clips each portray realistic episodes. They were s...

link.springer.com

Emerson Harkin

@efharkin.bsky.social

· May 6