Friedemann Zenke

@fzenke.bsky.social

650 followers

310 following

13 posts

Computational neuroscientist at the FMI.

www.zenkelab.org

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Friedemann Zenke

Reposted by Friedemann Zenke

Reposted by Friedemann Zenke

Reposted by Friedemann Zenke

Reposted by Friedemann Zenke

Alexandra Bendel

@alexbendel.bsky.social

· Aug 25

The genetic architecture of the human bZIP family

Generative biology holds the promise to transform our ability to design and understand living systems by creating novel proteins, pathways, and organisms with tailored functions that address challenge...

www.biorxiv.org

Reposted by Friedemann Zenke

Reposted by Friedemann Zenke

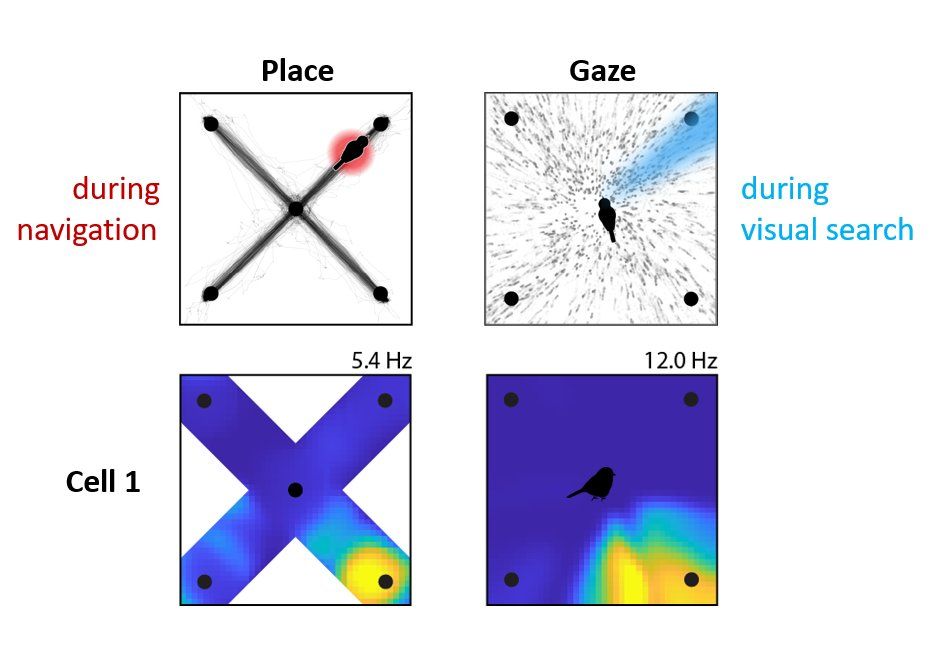

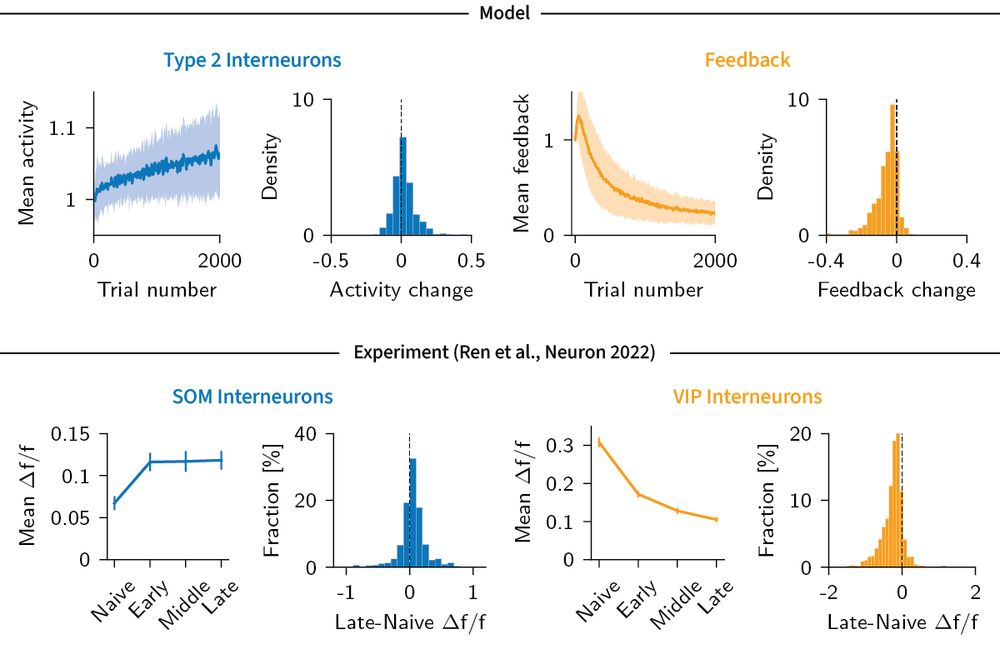

Georg Keller

@georgkeller.bsky.social

· Jul 14

Reposted by Friedemann Zenke

Reposted by Friedemann Zenke

Reposted by Friedemann Zenke

Reposted by Friedemann Zenke

Reposted by Friedemann Zenke

Tim Vogels

@tpvogels.bsky.social

· Jun 2

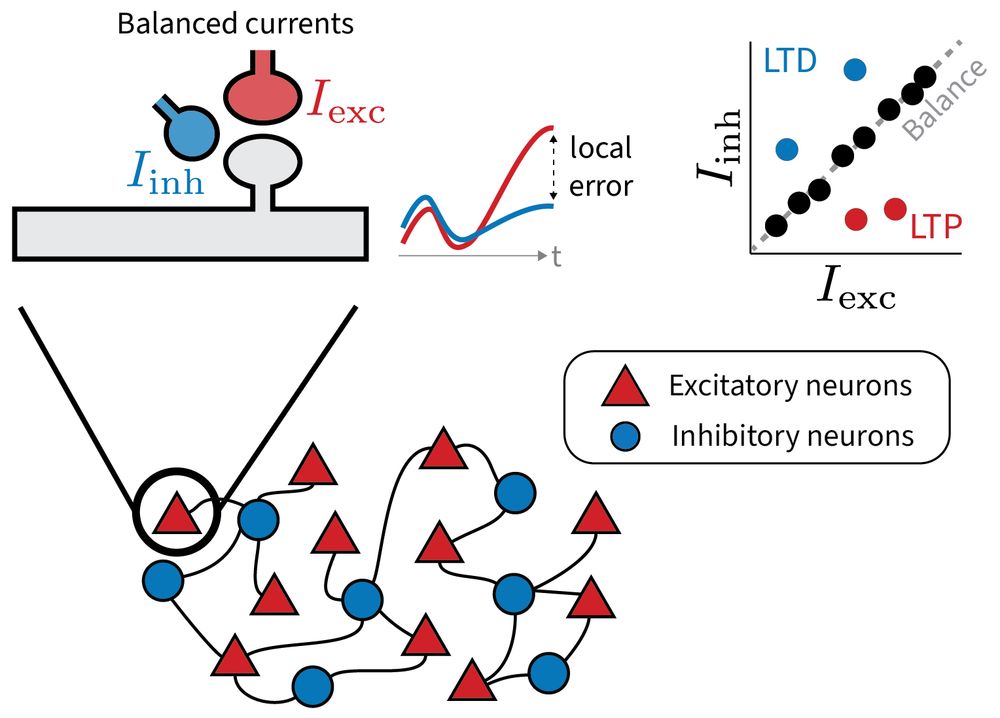

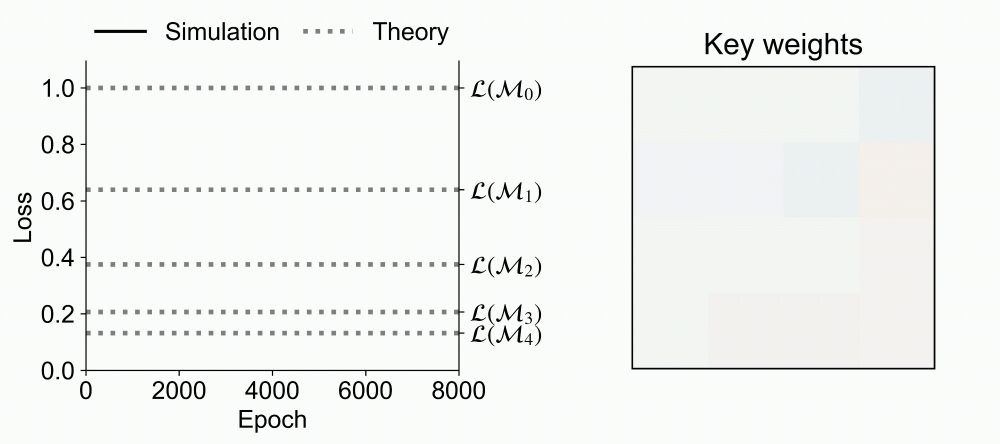

Memory by a thousand rules: Automated discovery of functional multi-type plasticity rules reveals variety & degeneracy at the heart of learning

Synaptic plasticity is the basis of learning and memory, but the link between synaptic changes and neural function remains elusive. Here, we used automated search algorithms to obtain thousands of str...

www.biorxiv.org

Reposted by Friedemann Zenke

FMI science

@fmiscience.bsky.social

· May 28

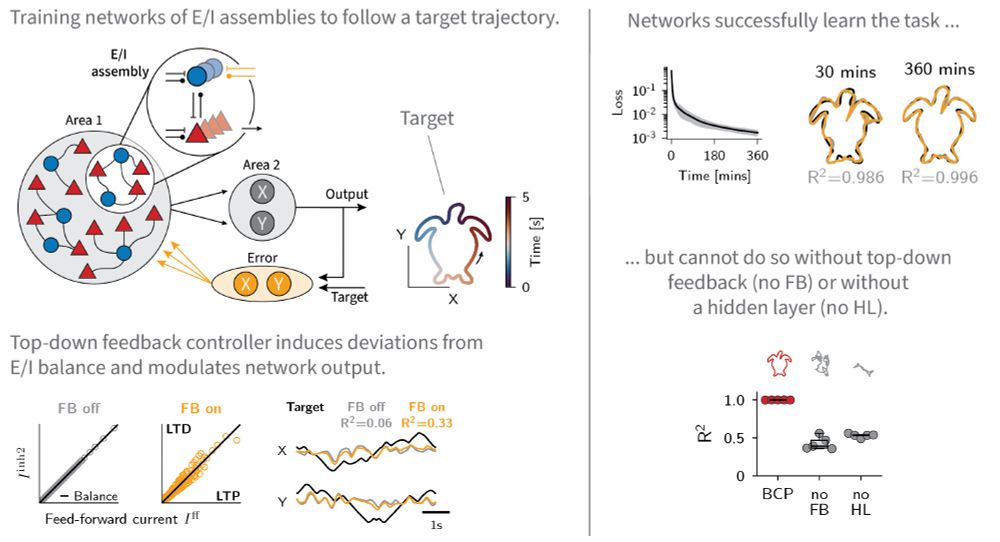

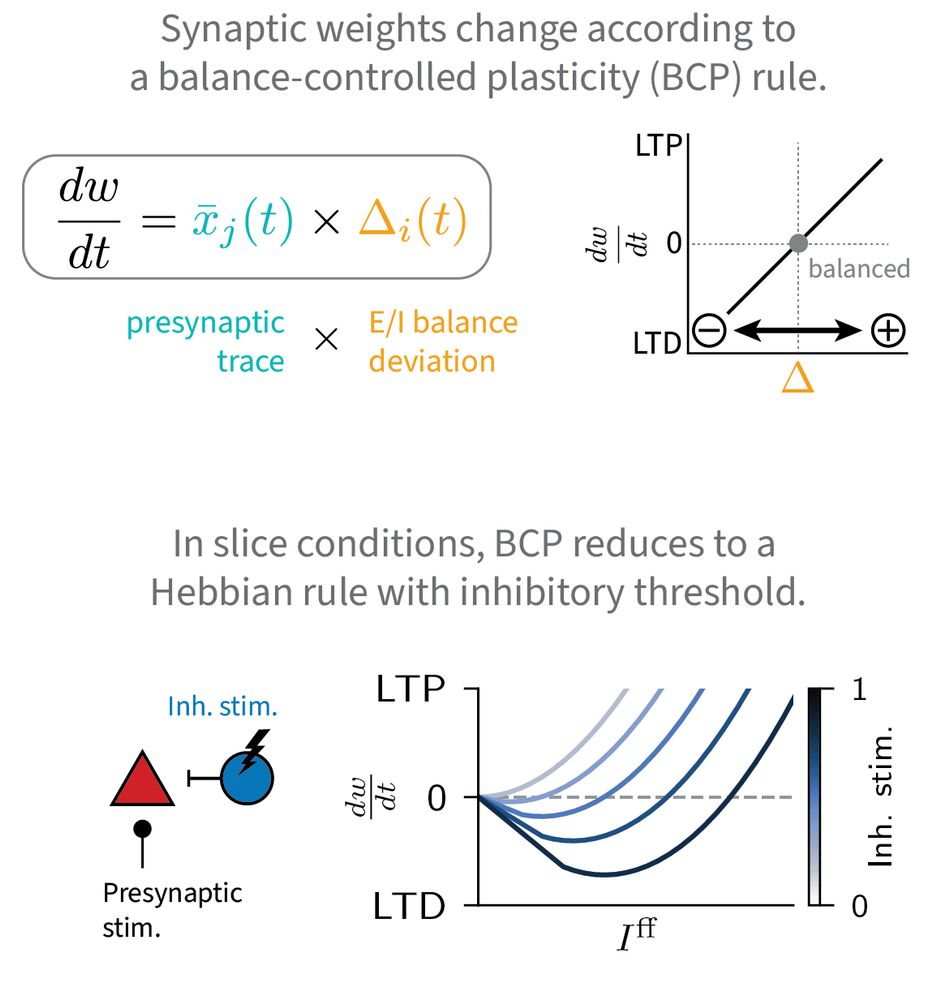

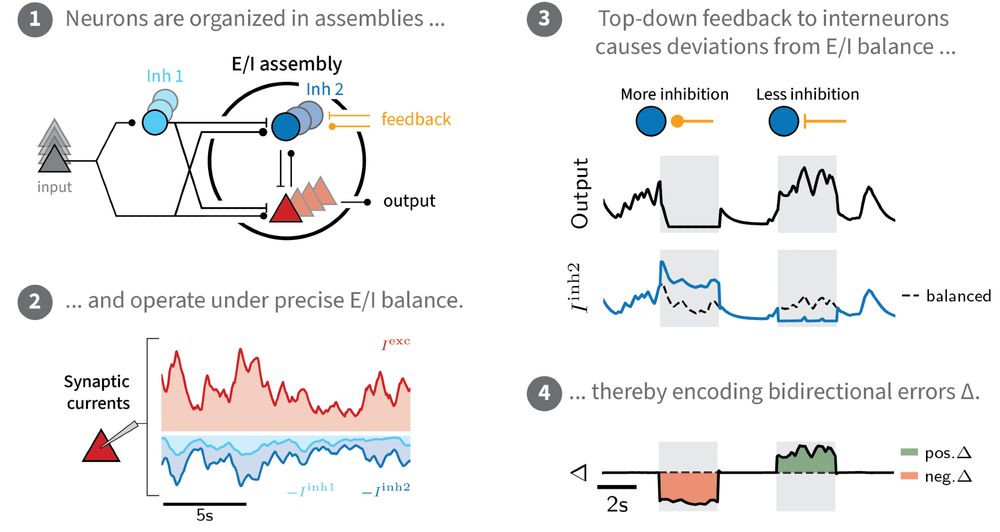

How brain networks balance learning and memory

FMI researchers have provided new insights into how the brain organizes and processes memories, thanks to a study that looks at the balance between excitatory and inhibitory neurons. Memory networks h...

www.fmi.ch

Friedemann Zenke

@fzenke.bsky.social

· May 28

Friedemann Zenke

@fzenke.bsky.social

· May 27