Ev Fedorenko

@evfedorenko.bsky.social

6.2K followers

570 following

80 posts

I study language using tools from cognitive science and neuroscience. I also like snuggles.

Posts

Media

Videos

Starter Packs

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Adele Goldberg

@adelegoldberg.bsky.social

· Aug 15

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Ev Fedorenko

@evfedorenko.bsky.social

· Aug 12

Ev Fedorenko

@evfedorenko.bsky.social

· Aug 12

Carl Zimmer

@carlzimmer.com

· Aug 12

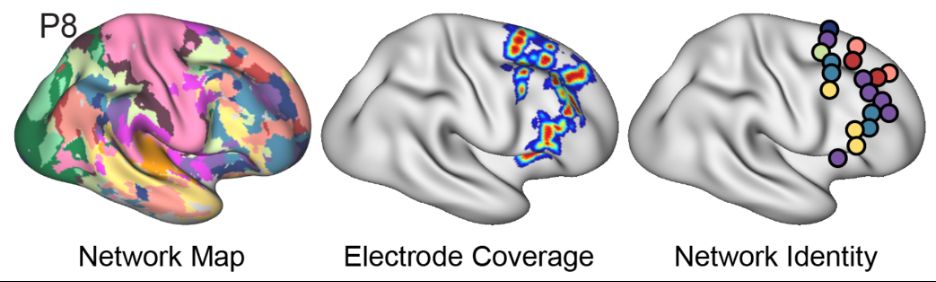

The extended language network: Language selective brain areas whose contributions to language remain to be discovered

Although language neuroscience has largely focused on ‘core’ left frontal and temporal brain areas and their right-hemisphere homotopes, numerous other areas—cortical, subcortical, and cerebellar—have...

www.biorxiv.org

Reposted by Ev Fedorenko

Carl Zimmer

@carlzimmer.com

· Aug 12

The extended language network: Language selective brain areas whose contributions to language remain to be discovered

Although language neuroscience has largely focused on ‘core’ left frontal and temporal brain areas and their right-hemisphere homotopes, numerous other areas—cortical, subcortical, and cerebellar—have...

www.biorxiv.org

Ev Fedorenko

@evfedorenko.bsky.social

· Aug 12

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Reposted by Ev Fedorenko

Hope Kean

@hopekean.bsky.social

· Aug 3

Evidence from Formal Logical Reasoning Reveals that the Language of Thought is not Natural Language

Humans are endowed with a powerful capacity for both inductive and deductive logical thought: we easily form generalizations based on a few examples and draw conclusions from known premises. Humans al...

tinyurl.com