Hannah Small

@hsmall.bsky.social

83 followers

110 following

9 posts

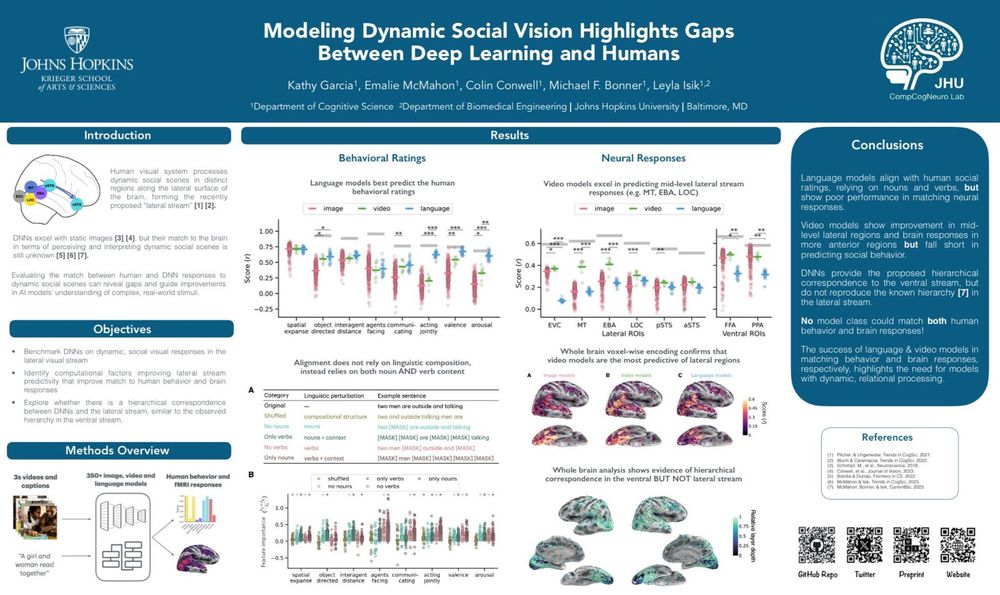

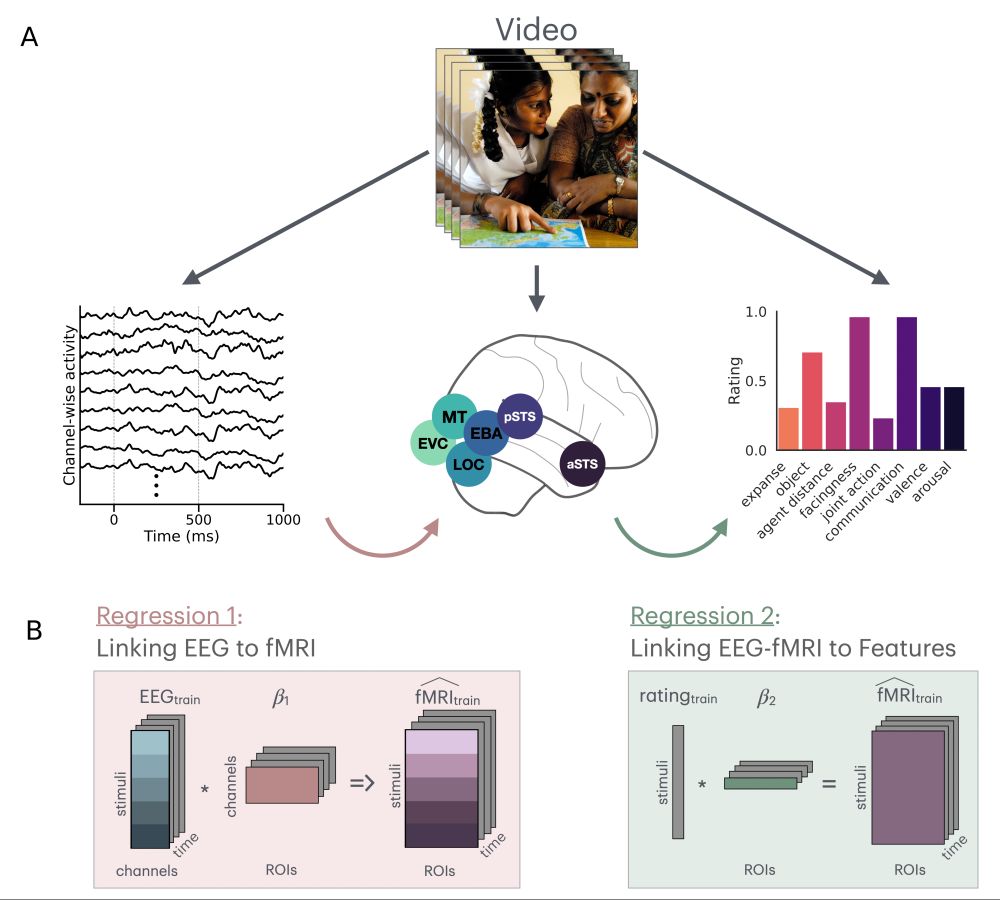

5th year PhD student in Cognitive Science at Johns Hopkins, working with Leyla Isik

https://www.hannah-small.com/

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Hannah Small

Reposted by Hannah Small

Reposted by Hannah Small

Reposted by Hannah Small

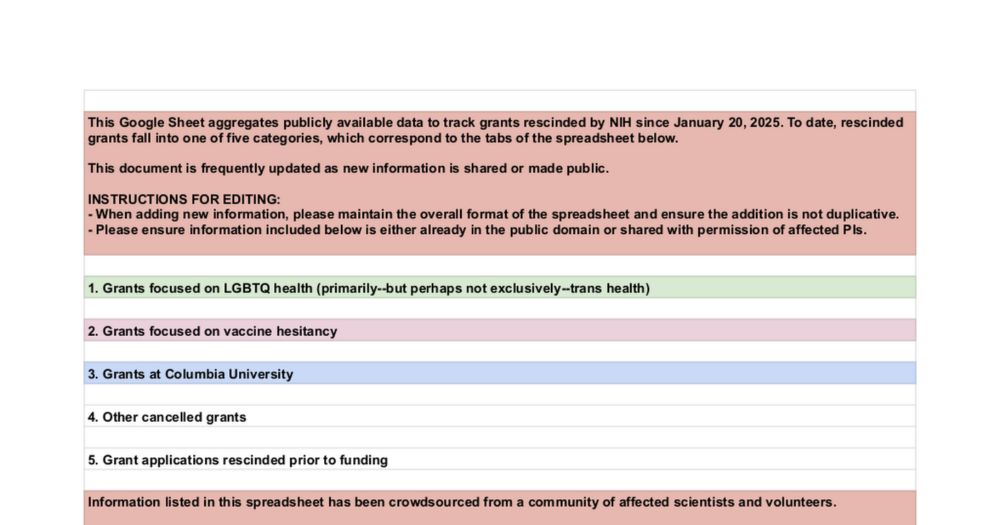

Emily Finn

@esfinn.bsky.social

· May 9

Reposted by Hannah Small

Reposted by Hannah Small

Reposted by Hannah Small

Reposted by Hannah Small

Colton Casto

@coltoncasto.bsky.social

· Apr 21

The cerebellar components of the human language network

The cerebellum's capacity for neural computation is arguably unmatched. Yet despite evidence of cerebellar contributions to cognition, including language, its precise role remains debated. Here, we sy...

www.biorxiv.org

Reposted by Hannah Small

Reposted by Hannah Small

Reposted by Hannah Small

Reposted by Hannah Small