Giorgos Kordopatis-Zilos

@gkordo.bsky.social

110 followers

270 following

24 posts

Postdoct Researcher at Visual Recognition Group, CTU in Prague - gkordo.github.io/

Posts

Media

Videos

Starter Packs

Reposted by Giorgos Kordopatis-Zilos

Reposted by Giorgos Kordopatis-Zilos

Reposted by Giorgos Kordopatis-Zilos

Reposted by Giorgos Kordopatis-Zilos

Spyros Gidaris

@spyrosgidaris.bsky.social

· Jun 13

Reposted by Giorgos Kordopatis-Zilos

Reposted by Giorgos Kordopatis-Zilos

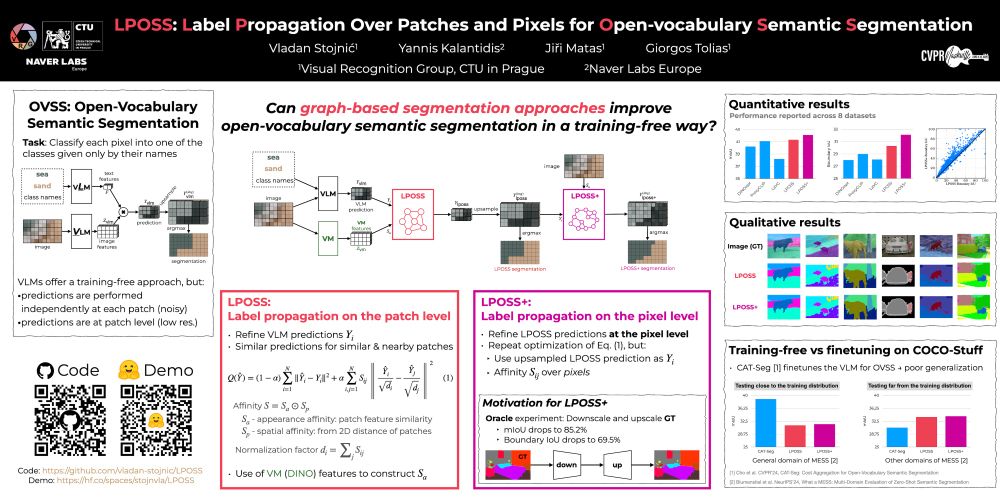

Giorgos Tolias

@gtolias.bsky.social

· Jun 12

Reposted by Giorgos Kordopatis-Zilos

Reposted by Giorgos Kordopatis-Zilos