Graham Flick

@grahamflick.bsky.social

350 followers

390 following

14 posts

NSERC Postdoc @ the Rotman Research Institute, Toronto

Studying language, memory, and neural oscillations

https://sites.google.com/view/grahamflick

Posts

Media

Videos

Starter Packs

Reposted by Graham Flick

Reposted by Graham Flick

Joel Voss

@vosstacular.bsky.social

· May 2

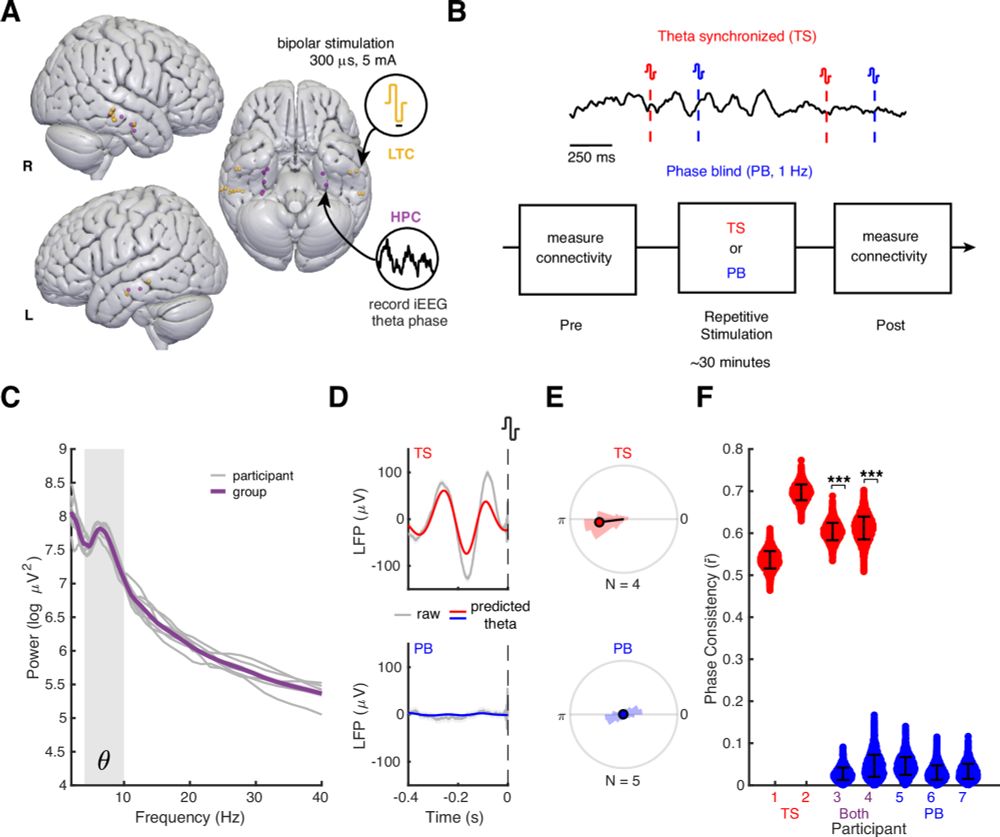

Closed-loop control of theta oscillations enhances human hippocampal network connectivity

Nature Communications - Closed-loop brain stimulation of the human hippocampal theta rhythm produces lasting enhancement of network communication. This implicates theta rhythms in human hippocampal...

rdcu.be

Reposted by Graham Flick

Liina Pylkkänen

@liinapy.bsky.social

· Apr 28

Graham Flick

@grahamflick.bsky.social

· Apr 28

Reading ahead: Localized neural signatures of parafoveal word processing and skipping decisions

Visual reading proceeds fixation-by-fixation, with individual words recognized and integrated into evolving conceptual representations within only hundreds of milliseconds. This relies, in part, on in...

www.biorxiv.org

Graham Flick

@grahamflick.bsky.social

· Apr 28

Graham Flick

@grahamflick.bsky.social

· Apr 28

Graham Flick

@grahamflick.bsky.social

· Apr 28

Graham Flick

@grahamflick.bsky.social

· Apr 28

Reading ahead: Localized neural signatures of parafoveal word processing and skipping decisions

Visual reading proceeds fixation-by-fixation, with individual words recognized and integrated into evolving conceptual representations within only hundreds of milliseconds. This relies, in part, on in...

www.biorxiv.org

Reposted by Graham Flick

Alex White

@alexlw.bsky.social

· Apr 8

Barnard Early-Career Faculty Fellow, Neuroscience & Behavior

If you are a current Barnard College employee, please use the internal career site to apply for this position. Job: Barnard Early-Career Faculty Fellow, Neuroscience & Behavior Job Summary: With B...

barnard.wd1.myworkdayjobs.com

Graham Flick

@grahamflick.bsky.social

· Mar 30

Reposted by Graham Flick

Reposted by Graham Flick

Graham Flick

@grahamflick.bsky.social

· Jan 7

Dan Lametti

@daniellametti.com

· Jan 4

Memories of hand movements are tied to speech through learning - Psychonomic Bulletin & Review

Hand movements frequently occur with speech. The extent to which the memories that guide co-speech hand movements are tied to the speech they occur with is unclear. Here, we paired the acquisition of ...

link.springer.com

Reposted by Graham Flick

Reposted by Graham Flick

Reposted by Graham Flick

Graham Flick

@grahamflick.bsky.social

· Nov 15