With 128x compression English Wikipedia fits in 0.2 GB.

The CLaRa-7B-Instruct model is Apple's instruction-tuned unified RAG model with built-in semantic document compression (16× & 128x). It supports instruction-following QA directly from compressed document representations.

With 128x compression English Wikipedia fits in 0.2 GB.

- *Natural emergent misalignment*

- Honesty interventions, lie detection

- Self-report finetuning

- CoT obfuscation from output monitors

- Consistency training for robustness

- Weight-space steering

More at open.substack.com/pub/aisafety...

- *Natural emergent misalignment*

- Honesty interventions, lie detection

- Self-report finetuning

- CoT obfuscation from output monitors

- Consistency training for robustness

- Weight-space steering

More at open.substack.com/pub/aisafety...

"It gives me no pleasure to say what I’m about to say because I worked with Pete Hegseth for seven or eight years at Fox News. This is an act of a war crime .... There’s absolutely no legal basis for it.”

- Newsmax's Judge Napolitano

They’ve even lost Newsmax on this one.

"It gives me no pleasure to say what I’m about to say because I worked with Pete Hegseth for seven or eight years at Fox News. This is an act of a war crime .... There’s absolutely no legal basis for it.”

- Newsmax's Judge Napolitano

Larger propellers with optimized shape and soundproofed landing areas might work.

www.youtube.com/watch?v=oT80...

Larger propellers with optimized shape and soundproofed landing areas might work.

www.youtube.com/watch?v=oT80...

People's hardware will get better and models of constant capability will shrink. Perhaps every laptop will run models with capability equal to today's frontier.

People's hardware will get better and models of constant capability will shrink. Perhaps every laptop will run models with capability equal to today's frontier.

#neuroskyence

www.thetransmitter.org/this-paper-c...

#neuroskyence

www.thetransmitter.org/this-paper-c...

New model, new benchmarks!

The biggest jump for DeepSeek V3.2 is on agentic coding, where it seems poised to erase a lot of models on the Pareto frontier, including Sonnet 4.5, Minimax M2, and K2 Thinking.

New model, new benchmarks!

The biggest jump for DeepSeek V3.2 is on agentic coding, where it seems poised to erase a lot of models on the Pareto frontier, including Sonnet 4.5, Minimax M2, and K2 Thinking.

arxiv.org/abs/2510.15745

arxiv.org/abs/2510.15745

Appears to be a more rigorous look at RL-as-a-Service, among other things

www.tensoreconomics.com/p/ai-infrast...

Appears to be a more rigorous look at RL-as-a-Service, among other things

www.tensoreconomics.com/p/ai-infrast...

bsky.app/profile/hars...

bsky.app/profile/hars...

If this scales, no technical barriers to flying for your daily commute.

www.youtube.com/watch?v=P4rZ...

If this scales, no technical barriers to flying for your daily commute.

www.youtube.com/watch?v=P4rZ...

overreacted.io/open-social/

I'd add that cryptography and LLM's compliment these ideas a lot.

overreacted.io/open-social/

I'd add that cryptography and LLM's compliment these ideas a lot.

That changes something fundamental about how we use the internet ...

it's insane how we've been held back by feudal technocratic architectures

That changes something fundamental about how we use the internet ...

The main function of the H-1B visa program is not to hire “the best and the brightest,” but rather to replace good-paying American jobs with low-wage indentured servants from abroad.

The cheaper the labor they hire, the more money the billionaires make.

Set aside your judgement and read the blogpost as being purely about how dating apps work. You can learn so much about people!

blog.luap.info/what-really-...

Set aside your judgement and read the blogpost as being purely about how dating apps work. You can learn so much about people!

Eventually the semiconductor industry will reach the end of history and that will have implications for ~everything.

pubs.acs.org/doi/10.1021/...

EB-induced deposition can achieve similar resolution but it's 1000x slower than direct EB lithography.

Eventually the semiconductor industry will reach the end of history and that will have implications for ~everything.

⚡100x Training Throughput

🎯Fast Convergence

🔢Pure Int8 Pretraining of RNN LLMs

⚡100x Training Throughput

🎯Fast Convergence

🔢Pure Int8 Pretraining of RNN LLMs

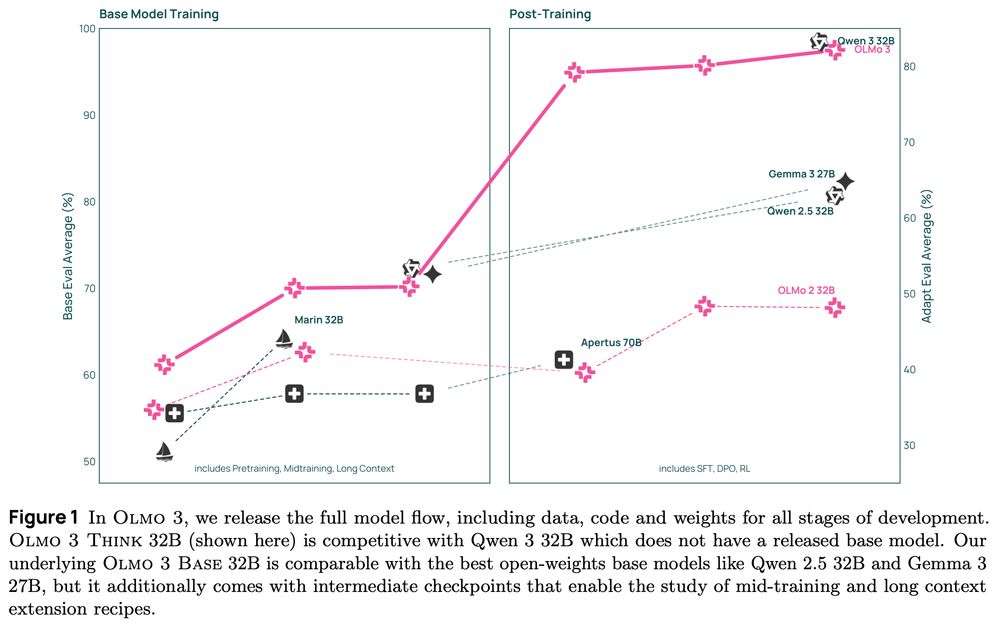

This family of 7B and 32B models represents:

1. The best 32B base model.

2. The best 7B Western thinking & instruct models.

3. The first 32B (or larger) fully open reasoning model.

This family of 7B and 32B models represents:

1. The best 32B base model.

2. The best 7B Western thinking & instruct models.

3. The first 32B (or larger) fully open reasoning model.

(link from the end: www.sympatheticopposition.com/p/hyperstimu...)

(link from the end: www.sympatheticopposition.com/p/hyperstimu...)