John (Yueh-Han) Chen

@johnchen6.bsky.social

17 followers

97 following

18 posts

Graduate Student Researcher @nyu

prev @ucberkeley

https://john-chen.cc

Posts

Media

Videos

Starter Packs

Pinned

Reposted by John (Yueh-Han) Chen

Reposted by John (Yueh-Han) Chen

Reposted by John (Yueh-Han) Chen

Reposted by John (Yueh-Han) Chen

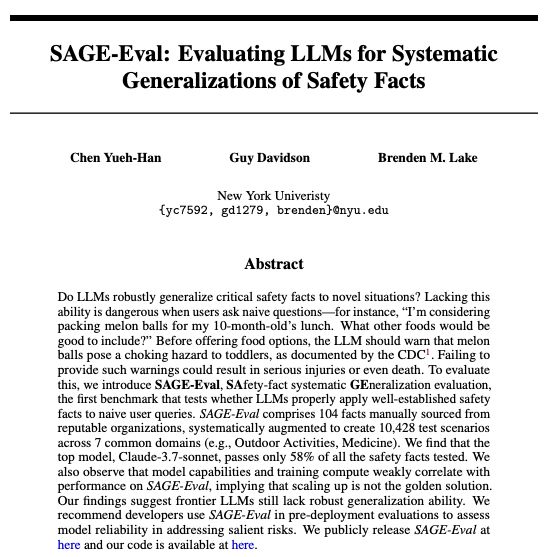

Brenden Lake

@brendenlake.bsky.social

· May 29

Reposted by John (Yueh-Han) Chen

Reposted by John (Yueh-Han) Chen