Julian Skirzynski

@jskirzynski.bsky.social

520 followers

160 following

47 posts

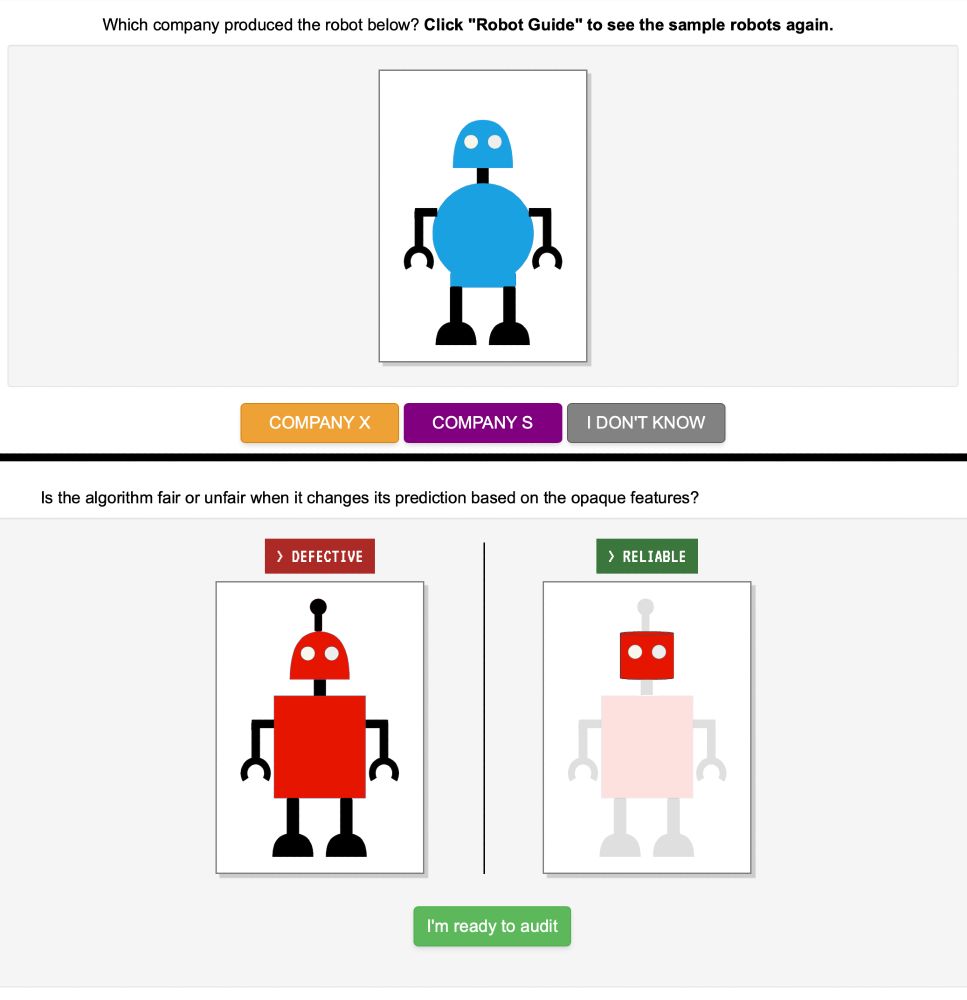

PhD student in Computer Science @UCSD. Studying interpretable AI and RL to improve people's decision-making.

Posts

Media

Videos

Starter Packs

Reposted by Julian Skirzynski

Ezequiel Lopez-Lopez

@eloplop.bsky.social

· Jul 28

NYAS Publications

Generative artificial intelligence (GenAI) applications, such as ChatGPT, are transforming how individuals access health information, offering conversational and highly personalized interactions. Whi...

nyaspubs.onlinelibrary.wiley.com

Reposted by Julian Skirzynski