Karen Levy

@karenlevy.bsky.social

3.5K followers

290 following

12 posts

Law/tech, surveillance, work, truckers. Faculty Cornell Information Science, Cornell Law / Fellow @NewAmerica / Data Driven: http://tinyurl.com/57v559mv / www.karen-levy.net

Posts

Media

Videos

Starter Packs

Reposted by Karen Levy

Reposted by Karen Levy

Reposted by Karen Levy

Reposted by Karen Levy

Reposted by Karen Levy

Karen Levy

@karenlevy.bsky.social

· Jun 3

Pegah Moradi

@pegahmoradi.bsky.social

· Jun 3

The Human in the Loop: Pegah Moradi on Automation, Discretion, and the Future of Frontline Work - Siegel Family Endowment

Pegah Moradi is a PhD candidate in Information Science at Cornell University, where she studies the social and organizational dimensions of digital automation, with a focus on its impacts on work and ...

www.siegelendowment.org

Reposted by Karen Levy

Reposted by Karen Levy

Karen Levy

@karenlevy.bsky.social

· Mar 12

Reposted by Karen Levy

Reposted by Karen Levy

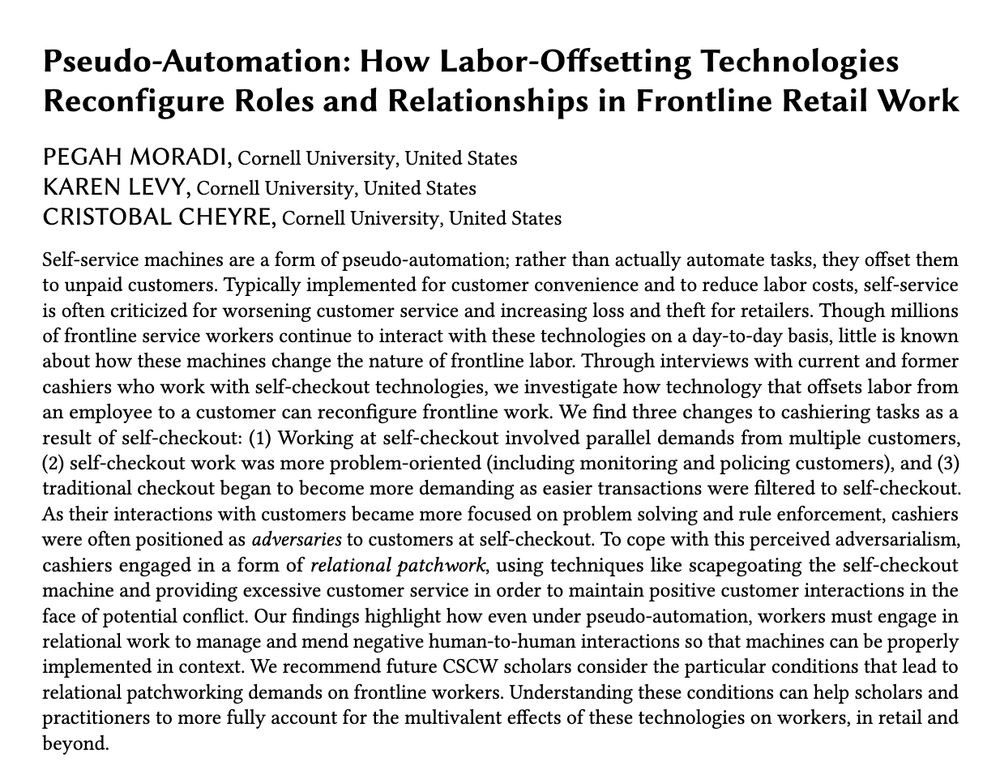

Pegah Moradi

@pegahmoradi.bsky.social

· Mar 12

Sociotechnical Change: Tracing Flows, Languages, and Stakes Across Diverse Cases| “A Fountain Pen Come to Life”: The Anxieties of the Autopen | Moradi | International Journal of Communication

Sociotechnical Change: Tracing Flows, Languages, and Stakes Across Diverse Cases| “A Fountain Pen Come to Life”: The Anxieties of the Autopen

ijoc.org

Reposted by Karen Levy

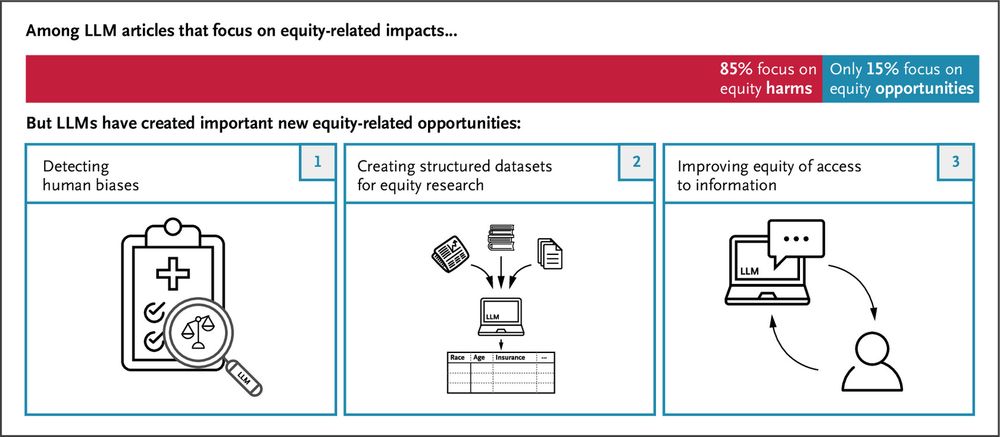

Nikhil Garg

@nkgarg.bsky.social

· Mar 10

Reposted by Karen Levy

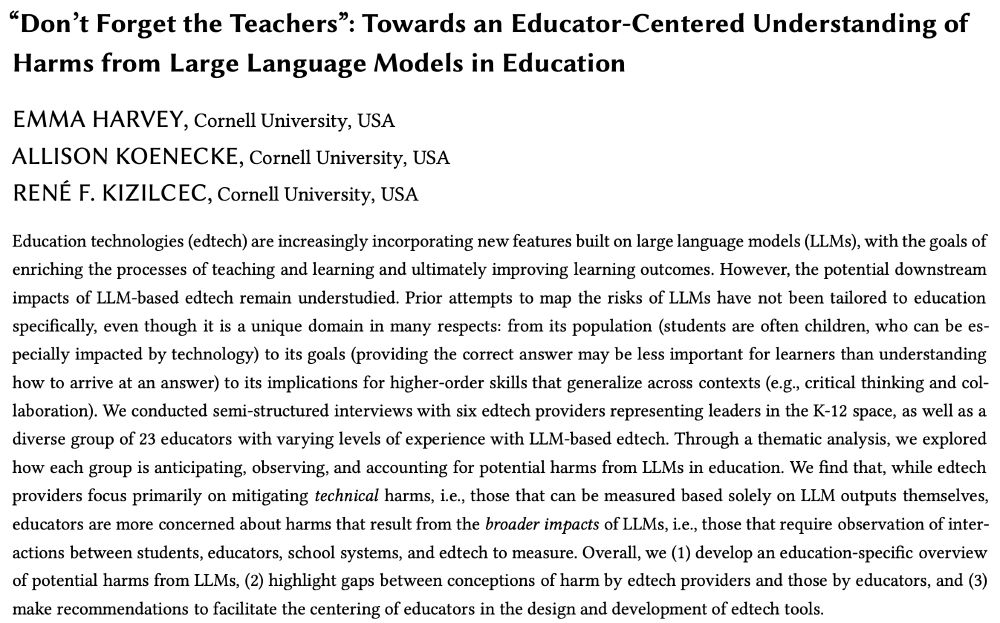

Sophie Greenwood

@sjgreenwood.bsky.social

· Mar 10

Reposted by Karen Levy

Reposted by Karen Levy

Reposted by Karen Levy

Reposted by Karen Levy

Reposted by Karen Levy

Reposted by Karen Levy

Karen Levy

@karenlevy.bsky.social

· Nov 25

Karen Levy

@karenlevy.bsky.social

· Nov 25

Your Boss is Probably Spying on You: New Data on Workplace Surveillance

New research reveals that more than two-thirds of U.S. workers are subject to electronic monitoring, and that more intensive productivity monitoring is associated with higher levels of anxiety…

lpeproject.org

Karen Levy

@karenlevy.bsky.social

· Nov 17

![Text from article: Automation may also have unintended social consequences. Pegah Moradi, a PhD candidate and researcher in Information Science at Cornell University, studies automation in retail. “When [an electronic shelf label] breaks down in the store,” she explained, “the worker has to repair the system even if they don’t have the technical expertise. The customer becomes frustrated: There is no price available, or why has the price changed? The burden falls on the employee to alleviate the tension of that situation.” The technology may put employees in the position of performing what Moradi calls “relationship management,” adding social pressure to service jobs but without additional pay or training.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:iuapdbkkbx2nvfqoqksek4re/bafkreihfqzgnucd7rmexljtf674zig7ahjqkkadhdbvdtbwqmgzptin6vy@jpeg)