Lukas Thede

@lukasthede.bsky.social

130 followers

160 following

13 posts

IMPRS-IS PhD Student with Zeynep Akata and Matthias Bethge at the University of Tübingen and Helmholtz Munich, working on continually adapting foundation models.

Posts

Media

Videos

Starter Packs

Reposted by Lukas Thede

Laure Ciernik

@lciernik.bsky.social

· Jul 16

Lukas Thede

@lukasthede.bsky.social

· Jul 14

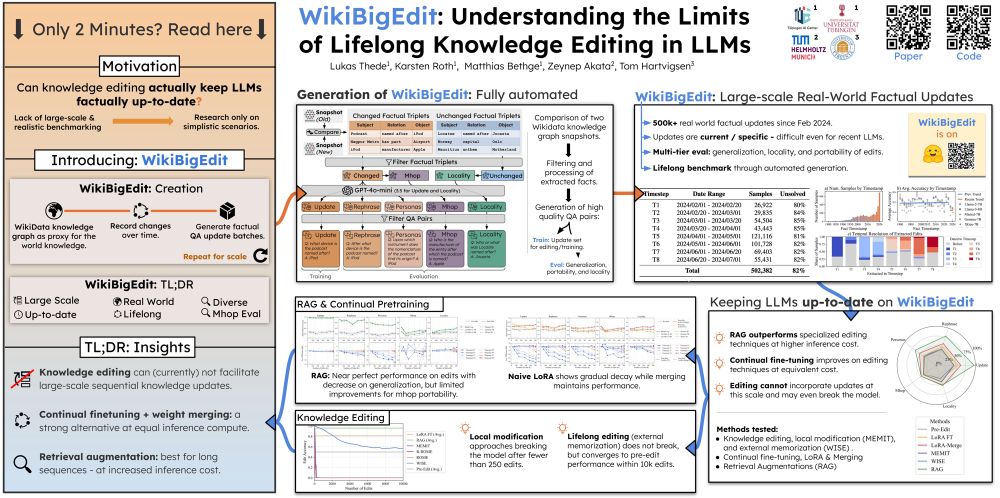

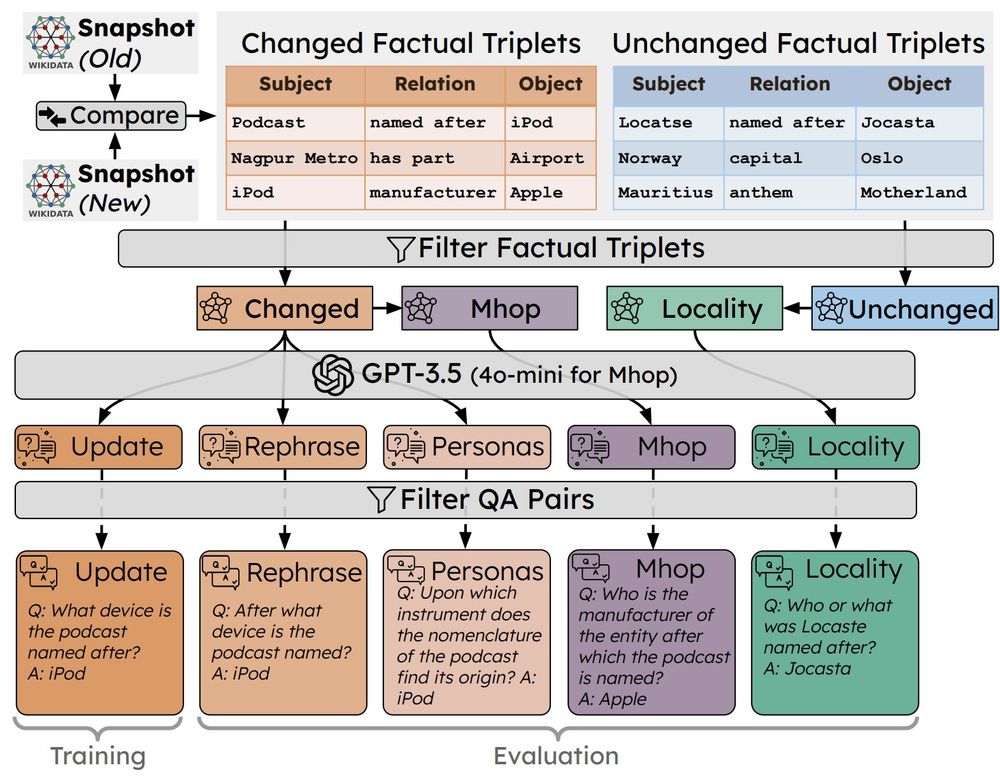

Understanding the Limits of Lifelong Knowledge Editing in LLMs

Keeping large language models factually up-to-date is crucial for deployment, yet costly retraining remains a challenge. Knowledge editing offers a promising alternative, but methods are only tested o...

arxiv.org

Reposted by Lukas Thede

Reposted by Lukas Thede

Reposted by Lukas Thede

Reposted by Lukas Thede

Reposted by Lukas Thede

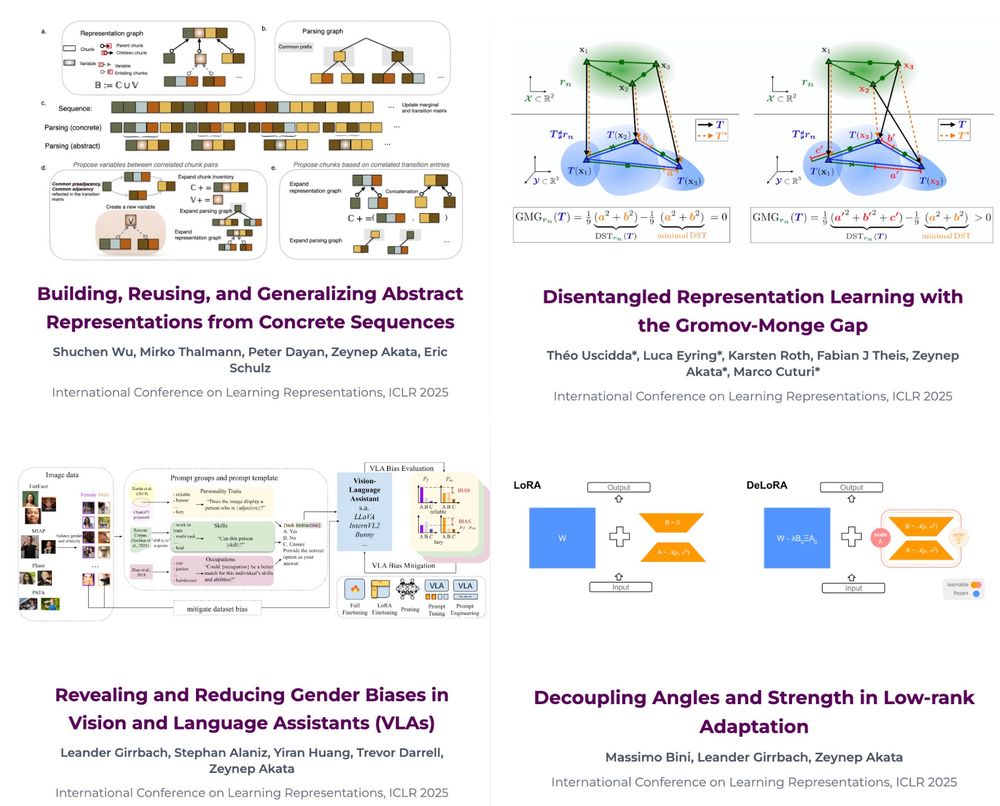

ExplainableML

@eml-munich.bsky.social

· Apr 22

Lukas Thede

@lukasthede.bsky.social

· Apr 8

Lukas Thede

@lukasthede.bsky.social

· Apr 8

Understanding the Limits of Lifelong Knowledge Editing in LLMs

Keeping large language models factually up-to-date is crucial for deployment, yet costly retraining remains a challenge. Knowledge editing offers a promising alternative, but methods are only tested o...

arxiv.org

Lukas Thede

@lukasthede.bsky.social

· Apr 8

Lukas Thede

@lukasthede.bsky.social

· Apr 8

Lukas Thede

@lukasthede.bsky.social

· Apr 8

Reposted by Lukas Thede

Reposted by Lukas Thede

Reposted by Lukas Thede

Reposted by Lukas Thede

Reposted by Lukas Thede

Reposted by Lukas Thede