Mahdi Haghifam

@mahdihaghifam.bsky.social

100 followers

130 following

8 posts

Researcher in ML and Privacy.

PhD @UofT & @VectorInst. previously Research Intern @Google and @ServiceNowRSRCH

https://mhaghifam.github.io/mahdihaghifam/

Posts

Media

Videos

Starter Packs

Reposted by Mahdi Haghifam

Reposted by Mahdi Haghifam

Reposted by Mahdi Haghifam

Reposted by Mahdi Haghifam

Gautam Kamath

@gautamkamath.com

· May 1

Tips on How to Connect at Academic Conferences

I was a kinda awkward teenager. If you are a CS researcher reading this post, then chances are, you were too. How to navigate social situations and make friends is not always intuitive, and has to …

kamathematics.wordpress.com

Reposted by Mahdi Haghifam

Mahdi Haghifam

@mahdihaghifam.bsky.social

· Mar 23

Reposted by Mahdi Haghifam

Thomas Steinke

@stein.ke

· Mar 11

What my privacy papers (don't) have to say about copyright and generative AI

My work on privacy-preserving machine learning is often cited by lawyers arguing for or against how generative AI models violate copyright. This maybe isn't the right work to be citing.

nicholas.carlini.com

Mahdi Haghifam

@mahdihaghifam.bsky.social

· Jan 26

Reposted by Mahdi Haghifam

Reposted by Mahdi Haghifam

Mahdi Haghifam

@mahdihaghifam.bsky.social

· Dec 11

Mahdi Haghifam

@mahdihaghifam.bsky.social

· Nov 30

Mahdi Haghifam

@mahdihaghifam.bsky.social

· Nov 28

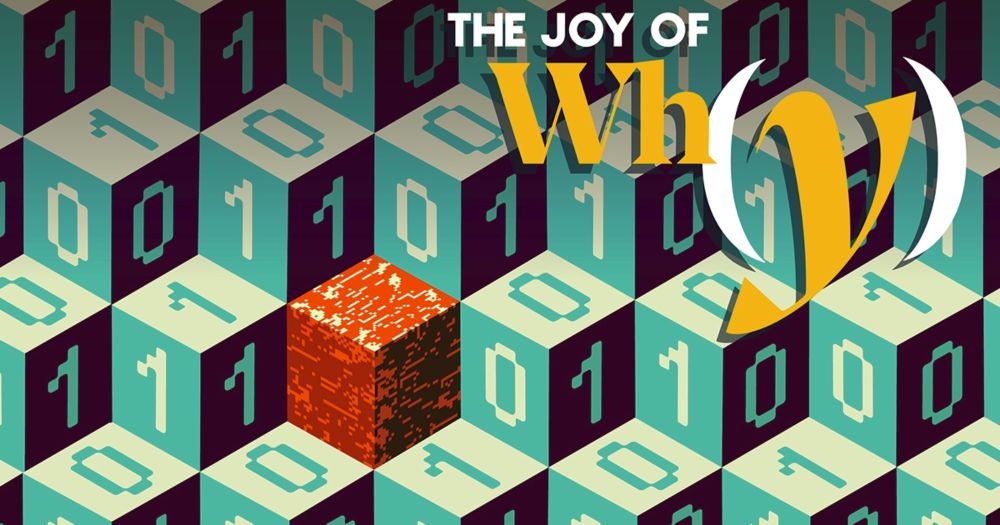

![Full LaTeX source: https://pastebin.com/mA6KjUJs

\begin{proposition}[Triangle-like inequality for KL divergence]\label{prop:kl-triangle}

Let $P$, $R$, and $Q$ be probability distributions with $P$ being absolutely continuous with respect to $R$ and $R$ being absolutely conotinuous with respect to $Q$.

Let $\kappa \in (1,\infty)$.

Then

\[

\dr{\text{KL}}{P}{Q} \le \frac{\kappa}{\kappa-1} \dr{\text{KL}}{P}{R} + \dr{\kappa}{R}{Q},

\]

where $\dr{\text{KL}}{P}{Q} := \ex{X \gets P}{\log(P(X)/Q(X)}$ denotes the KL divergence and\\$\dr{\kappa}{R}{Q} = \frac{1}{\kappa-1} \log \ex{X \gets R}{(R(X)/Q(X))^{\kappa-1}}$ denotes the R\'enyi divergence of order $\kappa$.

\end{proposition}](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:thfnaqgktdwqbm42radc322i/bafkreibz3gfiqvyvbld7jgfnb6kzac2j6qrxirofpwrxiv5mpz6j7r6md4@jpeg)