andrehuang.github.io/loftup-site/

andrehuang.github.io/loftup-site/

jcken95.github.io/projects/cod...

jcken95.github.io/projects/cod...

arxiv.org/abs/2503.08306

Work by @steevenj7.bsky.social et al.

arxiv.org/abs/2503.08306

Work by @steevenj7.bsky.social et al.

We're excited to share MVSAnywhere, which we will present at #CVPR2025. MVSAnywhere produces sharp depths, generalizes and is robust to all kind of scenes, and it's scale agnostic.

More info:

nianticlabs.github.io/mvsanywhere/

We're excited to share MVSAnywhere, which we will present at #CVPR2025. MVSAnywhere produces sharp depths, generalizes and is robust to all kind of scenes, and it's scale agnostic.

More info:

nianticlabs.github.io/mvsanywhere/

Zador Pataki, @pesarlin.bsky.social Johannes L. Schonberger, @marcpollefeys.bsky.social

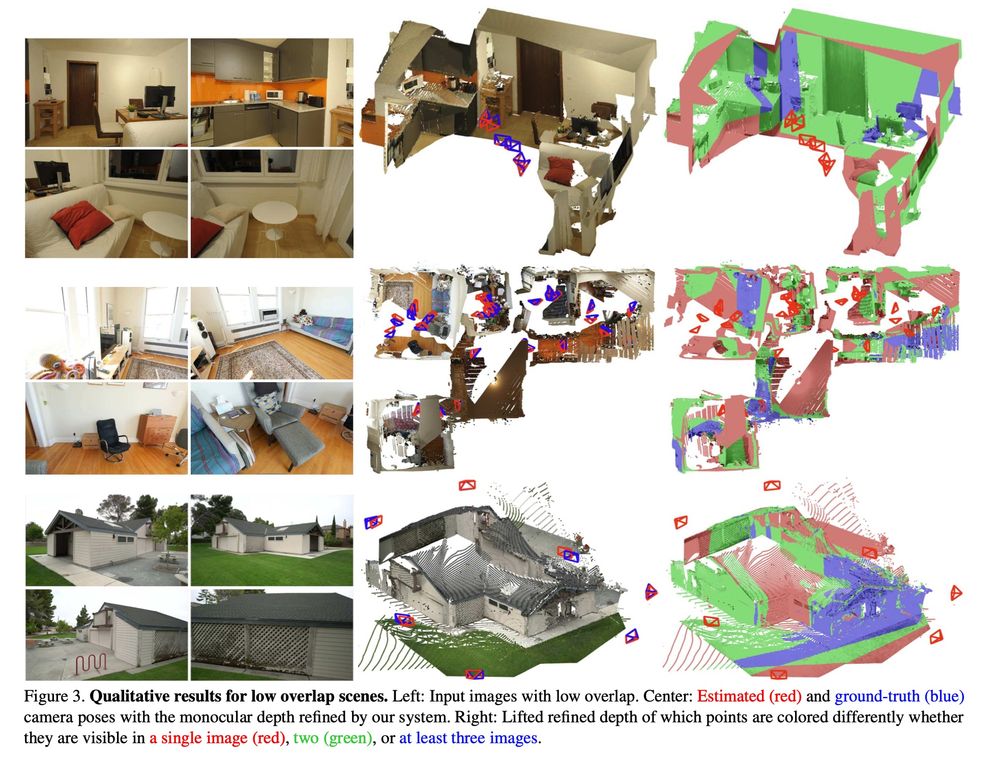

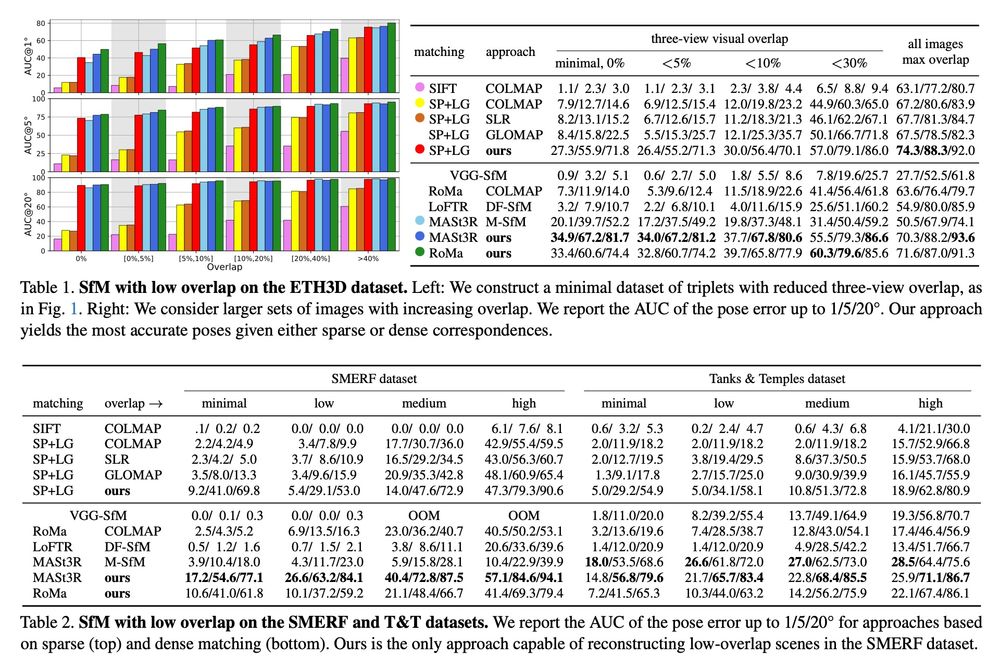

tl;dr: using monodepth to reconstruct w/o co-visible triplets. Many ablations and details. M3Dv2 FTW

demuc.de/papers/patak...

Zador Pataki, @pesarlin.bsky.social Johannes L. Schonberger, @marcpollefeys.bsky.social

tl;dr: using monodepth to reconstruct w/o co-visible triplets. Many ablations and details. M3Dv2 FTW

demuc.de/papers/patak...

Jerred Chen, Ronald Clark

tl;dr:predict flow from blurred image -> solve for velocity, use as IMU information.

arxiv.org/abs/2503.17358

Jerred Chen, Ronald Clark

tl;dr:predict flow from blurred image -> solve for velocity, use as IMU information.

arxiv.org/abs/2503.17358

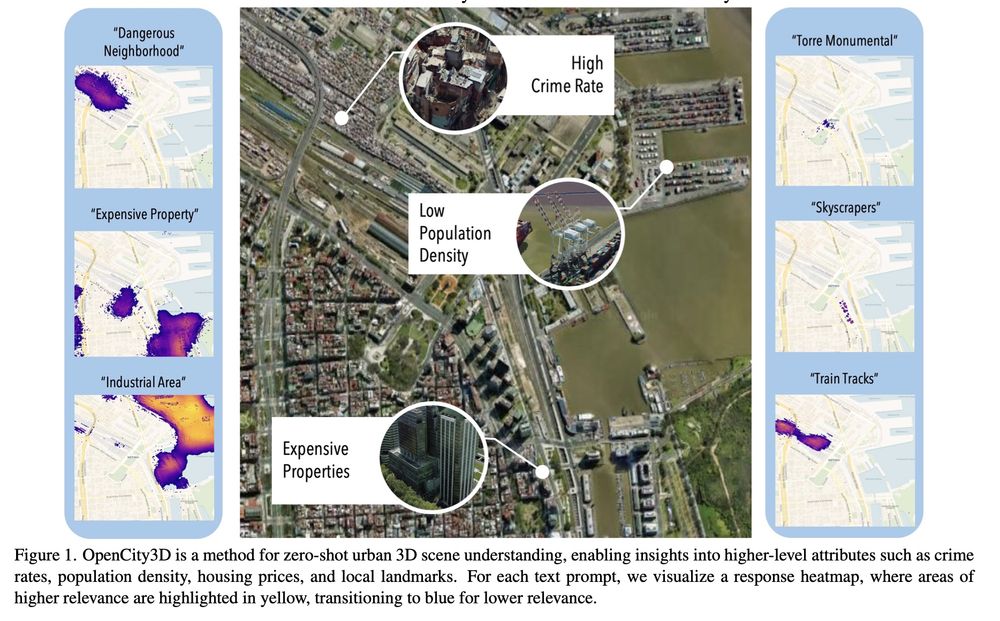

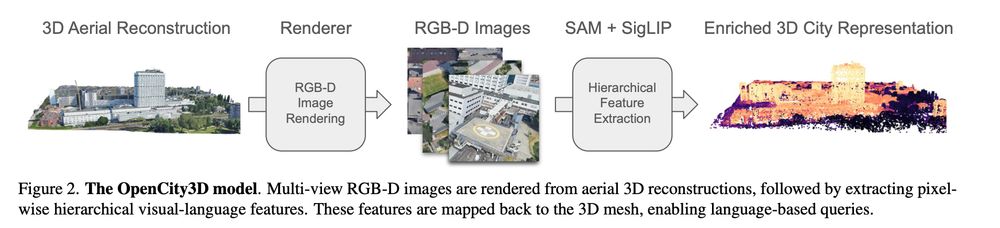

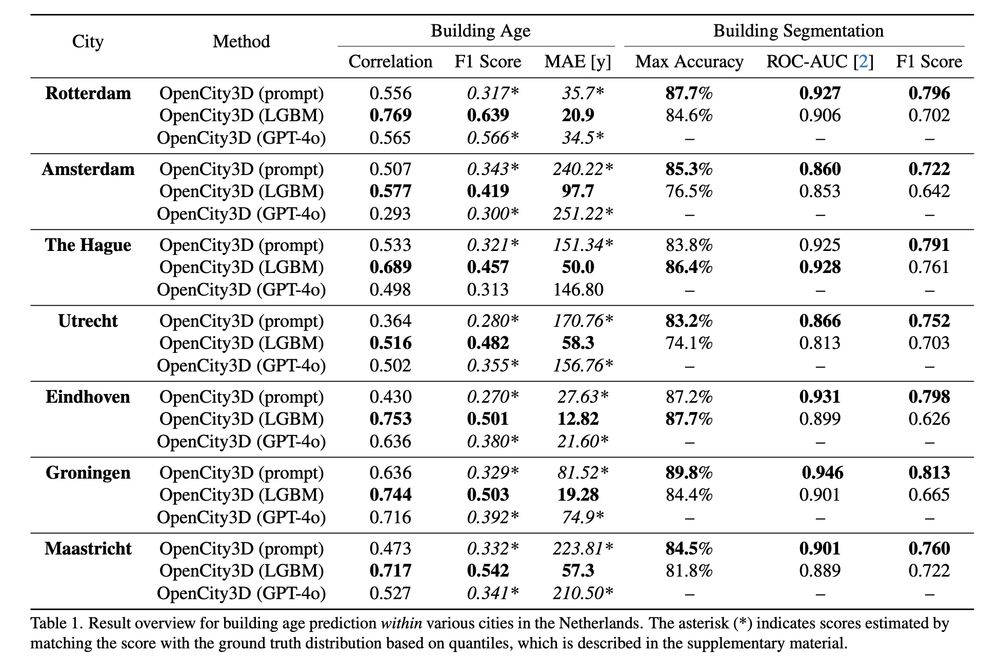

Valentin Bieri, Marco Zamboni, Nicolas S. Blumer, Qingxuan Chen, Francis Engelmann

tl;dr: if you have aerial 3D reconstruction, use SigLIP to be happy.

arxiv.org/abs/2503.16776

Valentin Bieri, Marco Zamboni, Nicolas S. Blumer, Qingxuan Chen, Francis Engelmann

tl;dr: if you have aerial 3D reconstruction, use SigLIP to be happy.

arxiv.org/abs/2503.16776

www.canarymedia.com/articles/foo...

www.canarymedia.com/articles/foo...

Haoyu Guo, He Zhu, Sida Peng, Haotong Lin, Yunzhi Yan, Tao Xie, Wenguan Wang, Xiaowei Zhou, Hujun Bao

arxiv.org/abs/2503.14483

Haoyu Guo, He Zhu, Sida Peng, Haotong Lin, Yunzhi Yan, Tao Xie, Wenguan Wang, Xiaowei Zhou, Hujun Bao

arxiv.org/abs/2503.14483

Stanislaw Szymanowicz, Jason Y. Zhang, Pratul Srinivasan, Ruiqi Gao, Arthur Brussee, @holynski.bsky.social, Ricardo Martin-Brualla, @jonbarron.bsky.social, Philipp Henzler

arxiv.org/abs/2503.14445

Stanislaw Szymanowicz, Jason Y. Zhang, Pratul Srinivasan, Ruiqi Gao, Arthur Brussee, @holynski.bsky.social, Ricardo Martin-Brualla, @jonbarron.bsky.social, Philipp Henzler

arxiv.org/abs/2503.14445

A simple alternative to normalization layers: the scaled tanh function, which they call Dynamic Tanh, or DyT.

A simple alternative to normalization layers: the scaled tanh function, which they call Dynamic Tanh, or DyT.

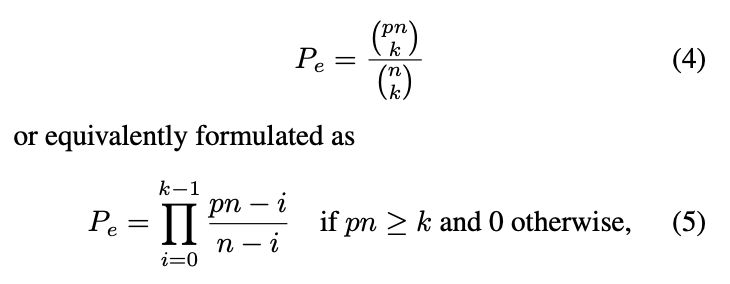

Johannes Schönberger, Viktor Larsson, @marcpollefeys.bsky.social

tl;dr: original RANSAC formula for number of iterations underestimates for hard cases and overestimates for easy. Here is corrected one -> better results

arxiv.org/abs/2503.07829

Johannes Schönberger, Viktor Larsson, @marcpollefeys.bsky.social

tl;dr: original RANSAC formula for number of iterations underestimates for hard cases and overestimates for easy. Here is corrected one -> better results

arxiv.org/abs/2503.07829

Interviewing Eugene Vinitsky (@eugenevinitsky.bsky.social) on self-play for self-driving and what else people do with RL

#13. Reinforcement learning fundamentals and scaling.

Post: buff.ly/8fLBJA6

YouTube: buff.ly/eJ6heSI

Interviewing Eugene Vinitsky (@eugenevinitsky.bsky.social) on self-play for self-driving and what else people do with RL

#13. Reinforcement learning fundamentals and scaling.

Post: buff.ly/8fLBJA6

YouTube: buff.ly/eJ6heSI

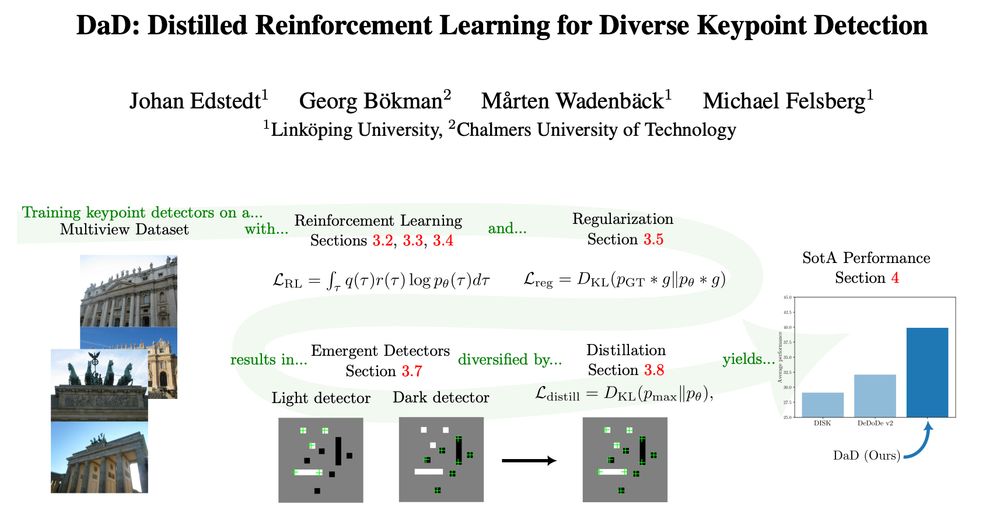

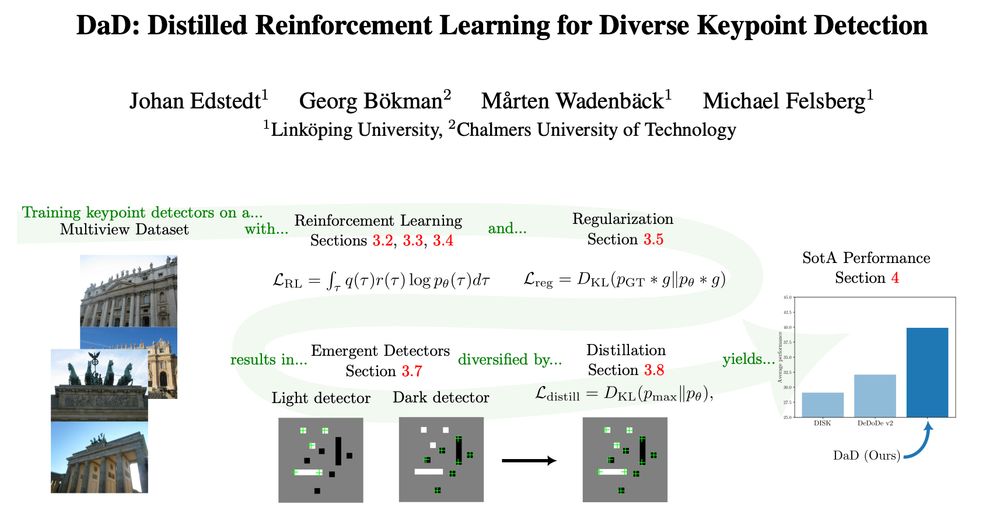

I wish to read more papers like this! Envying the reviewers

As this will get pretty long, this will be two threads.

The first will go into the RL part, and the second on the emergence and distillation.

I wish to read more papers like this! Envying the reviewers

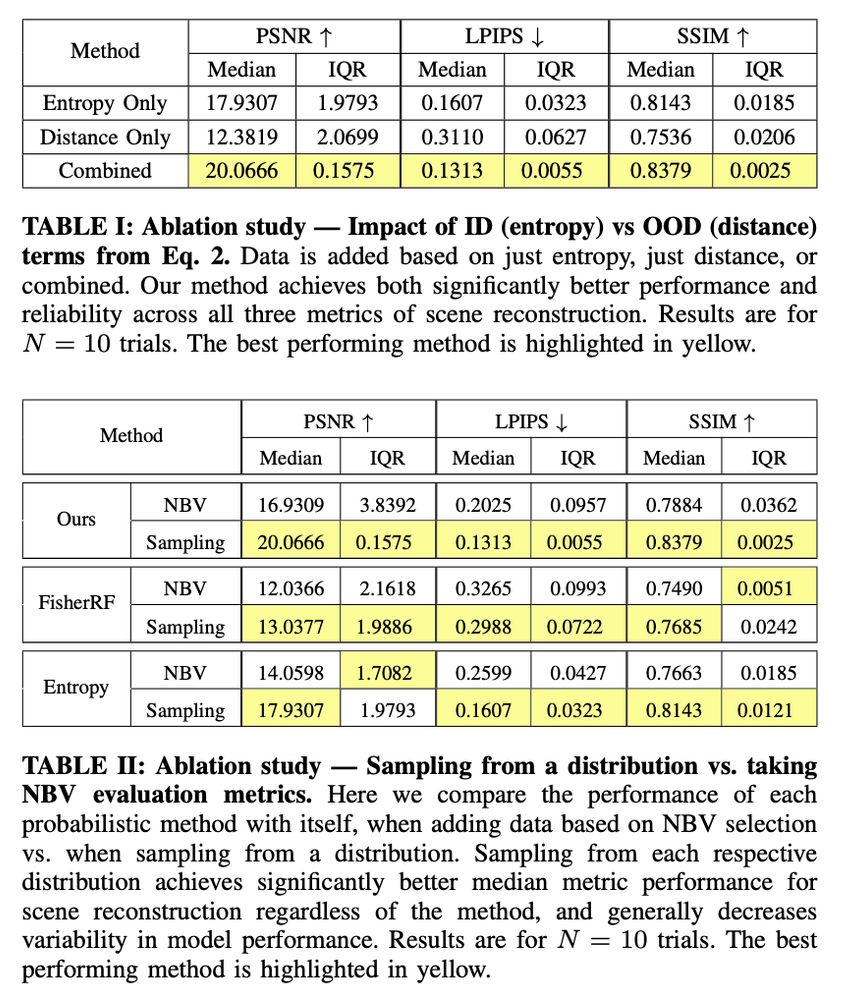

Ayush Gaggar, Todd D. Murphey

tl;dr: any uncertainty-based view sampling is better than next-best-view sampling.

I didn't get where the "augmentation" comes from though

arxiv.org/abs/2503.02092

Ayush Gaggar, Todd D. Murphey

tl;dr: any uncertainty-based view sampling is better than next-best-view sampling.

I didn't get where the "augmentation" comes from though

arxiv.org/abs/2503.02092

As this will get pretty long, this will be two threads.

The first will go into the RL part, and the second on the emergence and distillation.

As this will get pretty long, this will be two threads.

The first will go into the RL part, and the second on the emergence and distillation.

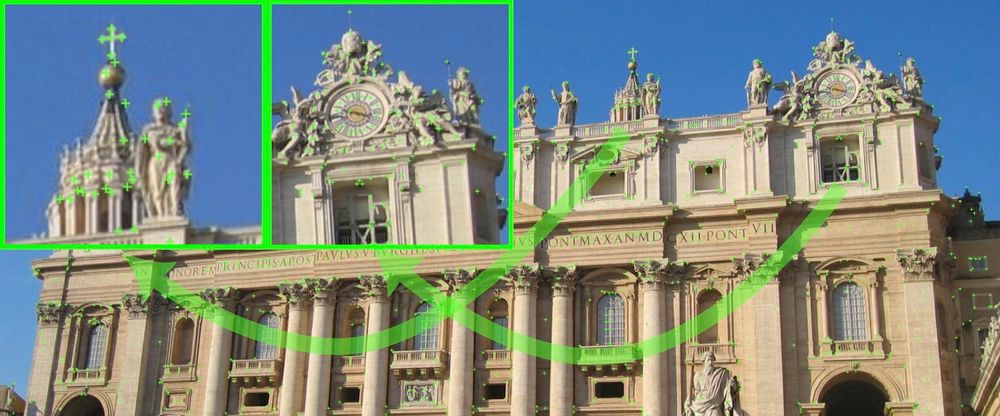

Beverley Gorry, Tobias Fischer, Michael Milford, Alejandro Fontan

tl;dr: SuperPoint +LightGlue can breath underwater.

arxiv.org/abs/2503.04096

Beverley Gorry, Tobias Fischer, Michael Milford, Alejandro Fontan

tl;dr: SuperPoint +LightGlue can breath underwater.

arxiv.org/abs/2503.04096

github.com/Parskatt/dad

github.com/Parskatt/dad

Xiaoyong Lu, Songlin Du

tl;dr: replace Transformer in LoFTR with Mamba

Mamba takes the torch in local feature matching

no eval on IMC

github.com/leoluxxx/JamMa

arxiv.org/abs/2503.03437

Xiaoyong Lu, Songlin Du

tl;dr: replace Transformer in LoFTR with Mamba

Mamba takes the torch in local feature matching

no eval on IMC

github.com/leoluxxx/JamMa

arxiv.org/abs/2503.03437

Timothy D Barfoot

tl;dr: minimal polynomial->Lie algebra->compact analytic results

transfer back and forth between series form and integra

arxiv.org/abs/2503.02820

Timothy D Barfoot

tl;dr: minimal polynomial->Lie algebra->compact analytic results

transfer back and forth between series form and integra

arxiv.org/abs/2503.02820

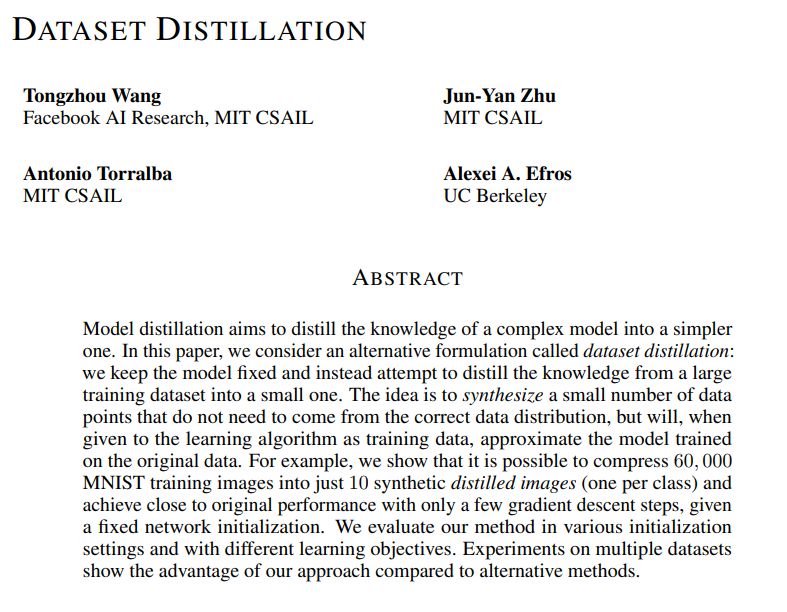

They show that it is possible to compress 60,000 MNIST training images into just 10 synthetic distilled images (one per class) and achieve close to original performance with only a few gradient descent steps, given a fixed network initialization.

They show that it is possible to compress 60,000 MNIST training images into just 10 synthetic distilled images (one per class) and achieve close to original performance with only a few gradient descent steps, given a fixed network initialization.

A lightweight diffusion library for training and sampling from diffusion models. The core of this library for diffusion training and sampling is implemented in less than 100 lines of very readable pytorch code.

github.com/yuanchenyang...

A lightweight diffusion library for training and sampling from diffusion models. The core of this library for diffusion training and sampling is implemented in less than 100 lines of very readable pytorch code.

github.com/yuanchenyang...