Natasha Jaques

@natashajaques.bsky.social

4.2K followers

280 following

52 posts

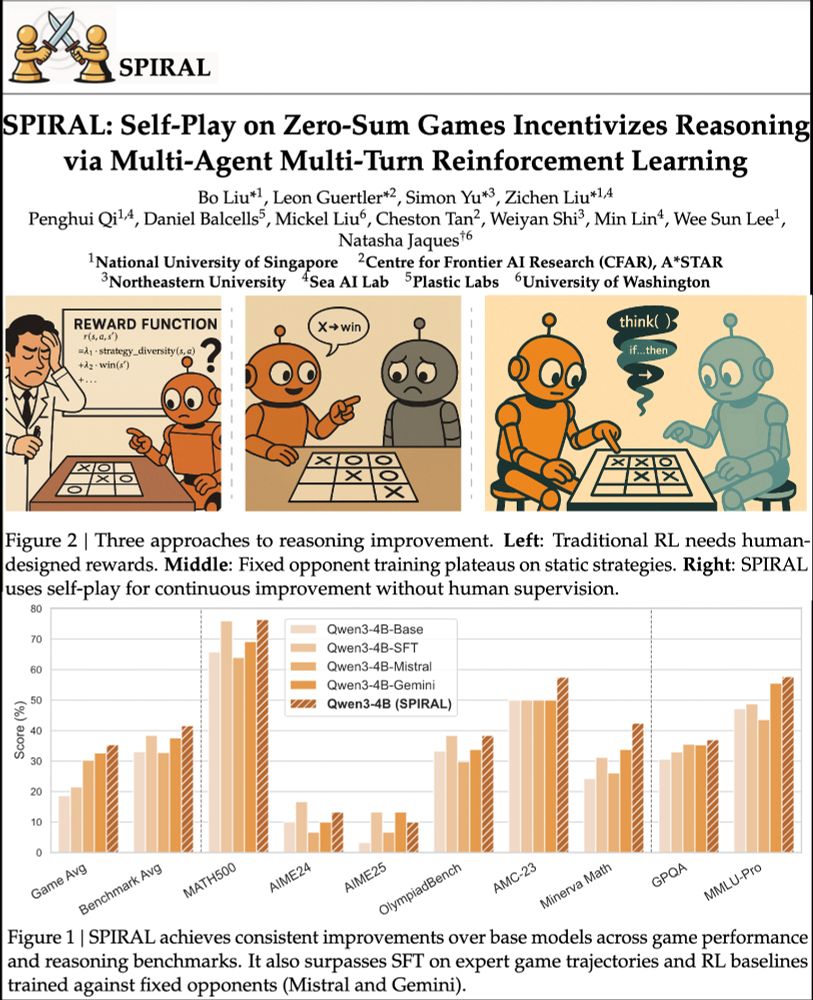

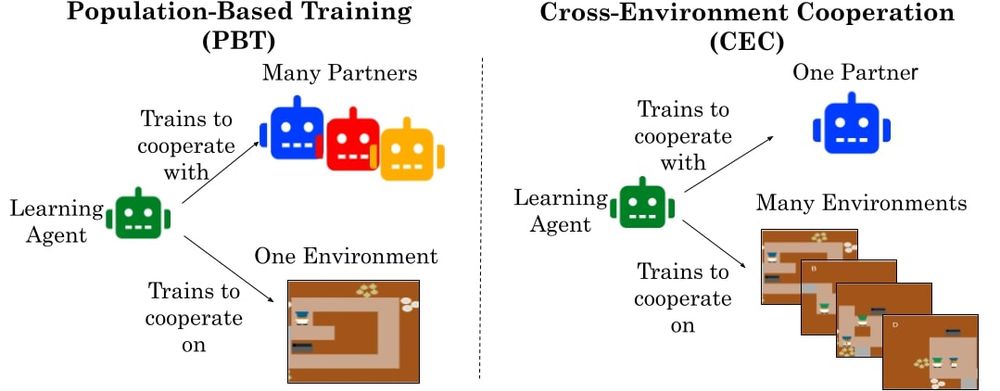

Assistant Professor at UW and Staff Research Scientist at Google DeepMind. Social Reinforcement Learning in multi-agent and human-AI interactions. PhD from MIT. Check out https://socialrl.cs.washington.edu/ and https://natashajaques.ai/.

Posts

Media

Videos

Starter Packs

Natasha Jaques

@natashajaques.bsky.social

· Jun 12

Reposted by Natasha Jaques

Natasha Jaques

@natashajaques.bsky.social

· Jun 12

Natasha Jaques

@natashajaques.bsky.social

· Jun 12

Reposted by Natasha Jaques

Natasha Jaques

@natashajaques.bsky.social

· Apr 19

Reposted by Natasha Jaques

Natasha Jaques

@natashajaques.bsky.social

· Mar 28

Reposted by Natasha Jaques

Sharon

@sharonk.bsky.social

· Mar 12

Natasha Jaques

@natashajaques.bsky.social

· Feb 16

Reposted by Natasha Jaques

Natasha Jaques

@natashajaques.bsky.social

· Feb 13

Natasha Jaques

@natashajaques.bsky.social

· Feb 13

Natasha Jaques

@natashajaques.bsky.social

· Feb 13