Patrick Cooper

@neurocoops.bsky.social

290 followers

410 following

31 posts

Cognitive neuroscientist at CSIRO

Current: Human-AI collaboration 🤖🤝😀

Previous: cognitive control and theta oscillations, non-instrumental information, curiosity, EEG

Other: Mind controlled video games 🧠👾🕹

He/Him

Posts

Media

Videos

Starter Packs

Patrick Cooper

@neurocoops.bsky.social

· Jul 21

Towards a Criteria‐Based Approach to Selecting Human‐AI Interaction Mode

Artificial intelligence (AI) tools are now prevalent in many knowledge work industries. As AI becomes more capable and interactive, there is a growing need for guidance on how to employ AI most effec...

dx.doi.org

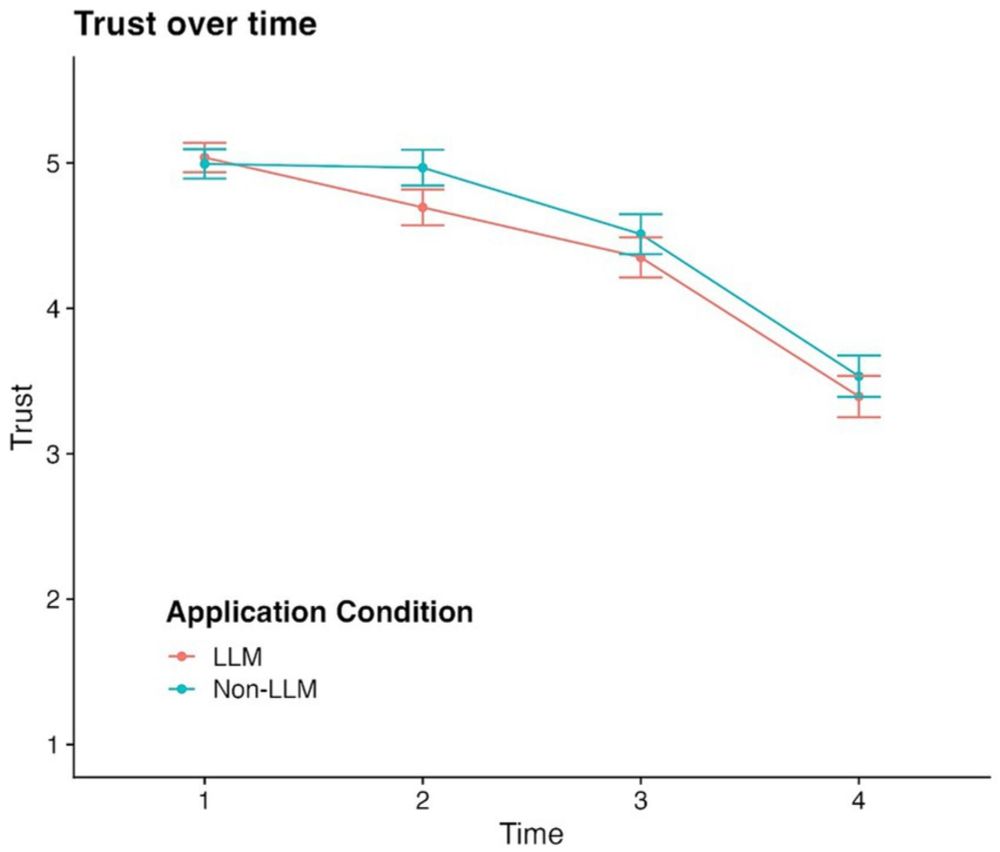

Patrick Cooper

@neurocoops.bsky.social

· Jun 24

Patrick Cooper

@neurocoops.bsky.social

· Mar 25

Reposted by Patrick Cooper

Patrick Cooper

@neurocoops.bsky.social

· Mar 20

Patrick Cooper

@neurocoops.bsky.social

· Mar 20

Reposted by Patrick Cooper

Genevieve Quek

@drquekles.bsky.social

· Feb 19

Reposted by Patrick Cooper

Reposted by Patrick Cooper

Reposted by Patrick Cooper

Reposted by Patrick Cooper

Jaan Aru

@jaanaru.bsky.social

· Nov 20

Does brain activity cause consciousness? A thought experiment

The authors of this Essay examine whether action potentials cause consciousness in a three-step thought experiment that assumes technology is advanced enough to fully manipulate our brains.

journals.plos.org

Reposted by Patrick Cooper

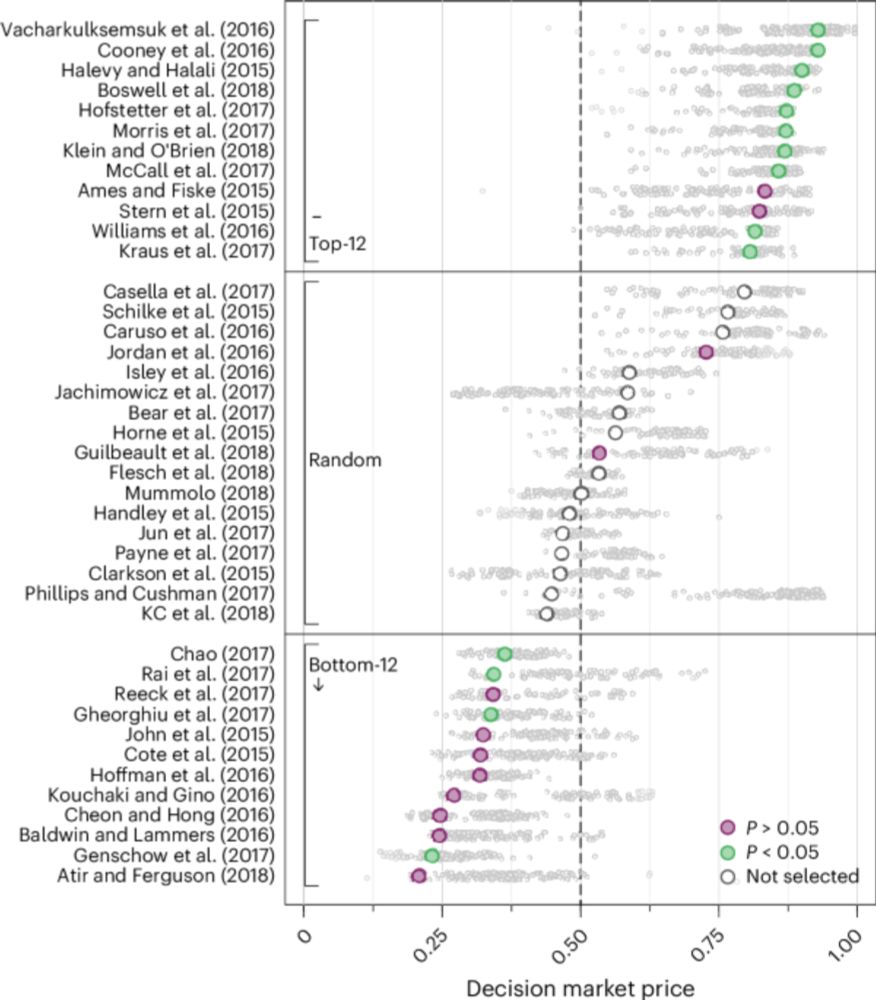

Brian Nosek

@briannosek.bsky.social

· Nov 19

Examining the replicability of online experiments selected by a decision market - Nature Human Behaviour

This study finds that decision markets can be a useful tool for selecting studies for replication. For a sample of 26 online experiments published in PNAS selected by a decision market, the authors fi...

www.nature.com

Reposted by Patrick Cooper

Patrick Cooper

@neurocoops.bsky.social

· Nov 19

Patrick Cooper

@neurocoops.bsky.social

· Nov 19