Will Turner

@renrutmailliw.bsky.social

110 followers

160 following

5 posts

cognitive neuroscience postdoc at stanford

https://bootstrapbill.github.io/

he/him

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Will Turner

Reposted by Will Turner

Reposted by Will Turner

Reposted by Will Turner

Reposted by Will Turner

Reposted by Will Turner

Reposted by Will Turner

Benjamin Lowe

@brainboyben.bsky.social

· Aug 19

The Latency of a Domain-General Visual Surprise Signal is Attribute Dependent

Predictions concerning upcoming visual input play a key role in resolving percepts. Sometimes input is surprising, under which circumstances the brain must calibrate erroneous predictions so that perc...

doi.org

Reposted by Will Turner

Reposted by Will Turner

Reposted by Will Turner

Reposted by Will Turner

Benjamin Lowe

@brainboyben.bsky.social

· Jul 15

Blake Richards

@tyrellturing.bsky.social

· Jul 11

Sensory responses of visual cortical neurons are not prediction errors

Predictive coding is theorized to be a ubiquitous cortical process to explain sensory responses. It asserts that the brain continuously predicts sensory information and imposes those predictions on lo...

www.biorxiv.org

Reposted by Will Turner

Reposted by Will Turner

Will Turner

@renrutmailliw.bsky.social

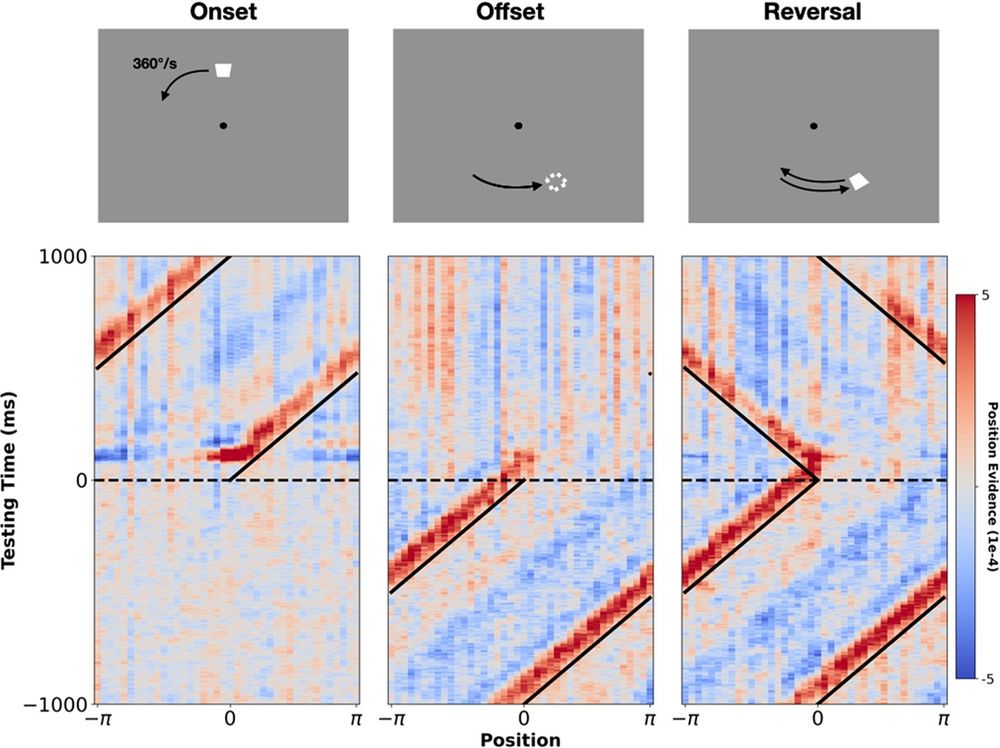

· May 23

Reposted by Will Turner

Will Turner

@renrutmailliw.bsky.social

· May 23

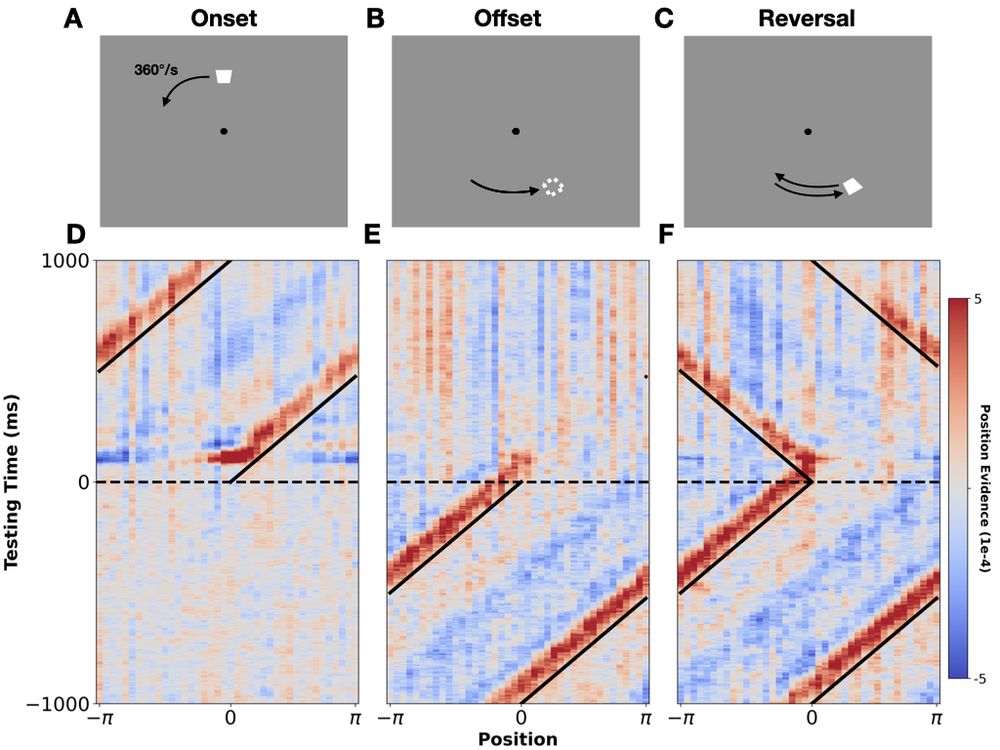

GitHub - bootstrapbill/neural-location-decoding: Contains scripts for decoding location of a moving object from EEG data. Preprint: https://www.biorxiv.org/content/10.1101/2024.04.22.590502v2

Contains scripts for decoding location of a moving object from EEG data. Preprint: https://www.biorxiv.org/content/10.1101/2024.04.22.590502v2 - bootstrapbill/neural-location-decoding

github.com

Reposted by Will Turner

Reposted by Will Turner