RJ Antonello

@rjantonello.bsky.social

49 followers

69 following

7 posts

Postdoc in the Mesgarani Lab. Studying how we can use AI to understand language processing in the brain.

Posts

Media

Videos

Starter Packs

Reposted by RJ Antonello

RJ Antonello

@rjantonello.bsky.social

· Aug 18

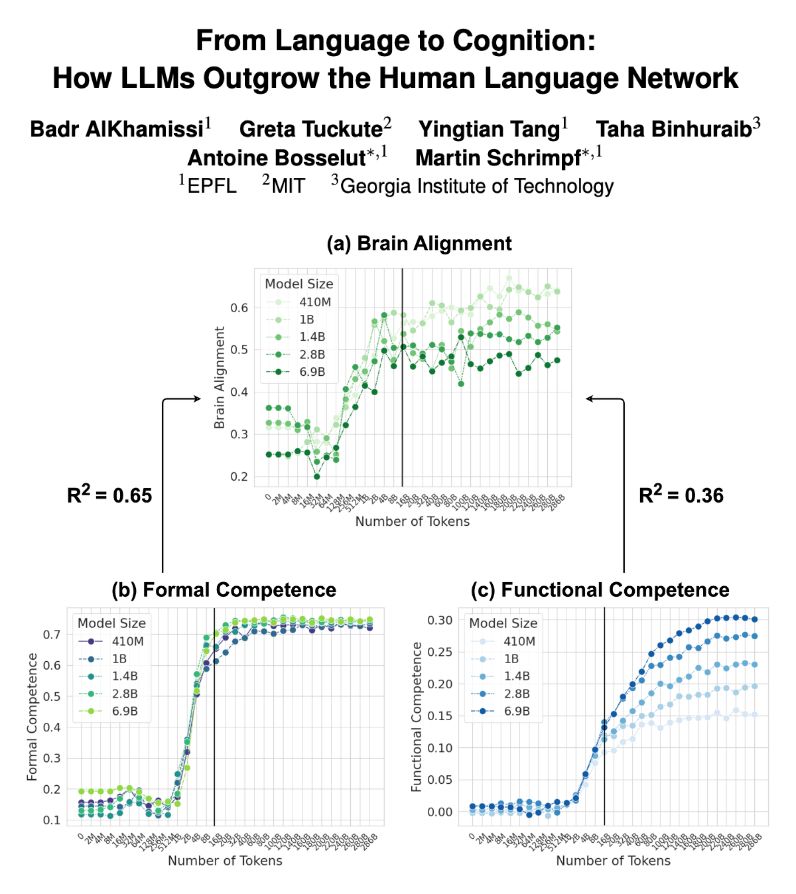

Evaluating scientific theories as predictive models in language neuroscience

Modern data-driven encoding models are highly effective at predicting brain responses to language stimuli. However, these models struggle to explain the underlying phenomena, i.e. what features of the...

www.biorxiv.org

RJ Antonello

@rjantonello.bsky.social

· Aug 18

RJ Antonello

@rjantonello.bsky.social

· Aug 18

RJ Antonello

@rjantonello.bsky.social

· Aug 18

Reposted by RJ Antonello

Reposted by RJ Antonello

Reposted by RJ Antonello

Reposted by RJ Antonello

Reposted by RJ Antonello

Reposted by RJ Antonello

Ev Fedorenko

@evfedorenko.bsky.social

· Dec 27

RJ Antonello

@rjantonello.bsky.social

· Dec 8