Sami Beaumont

@samibeaumont.bsky.social

27 followers

72 following

27 posts

Psychiatrist @ GHU Paris psychiatry and neurosciences

Research in computational cognitive science @computationalbrain.bsky.social

Posts

Media

Videos

Starter Packs

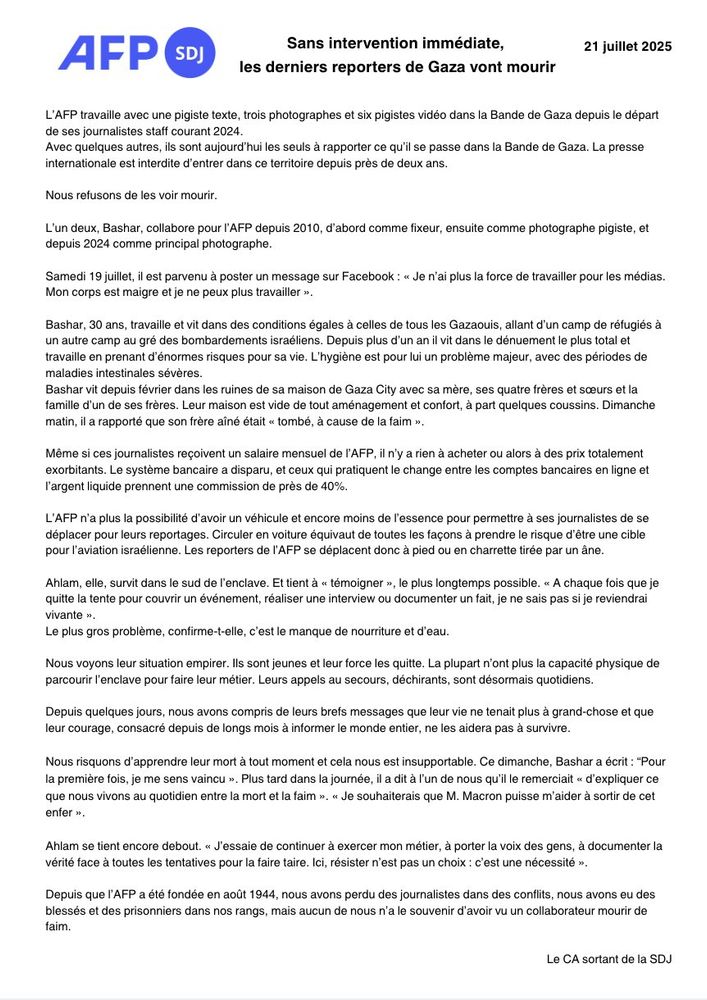

Reposted by Sami Beaumont

Esther Mondragón

@emp1.bsky.social

· Sep 6

Reposted by Sami Beaumont

Reposted by Sami Beaumont

Mel Andrews

@bayesianboy.bsky.social

· Jul 17

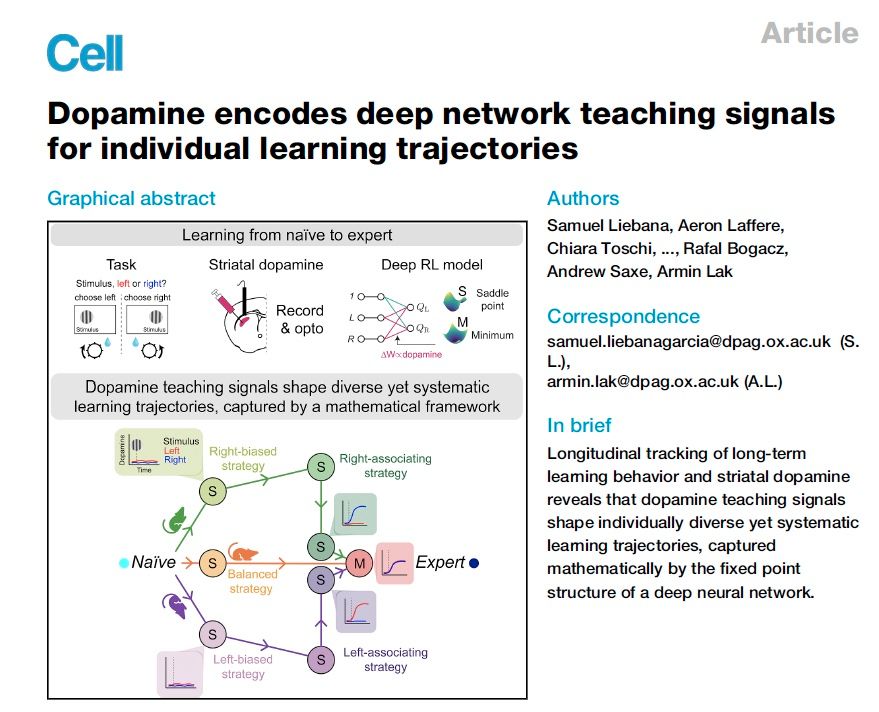

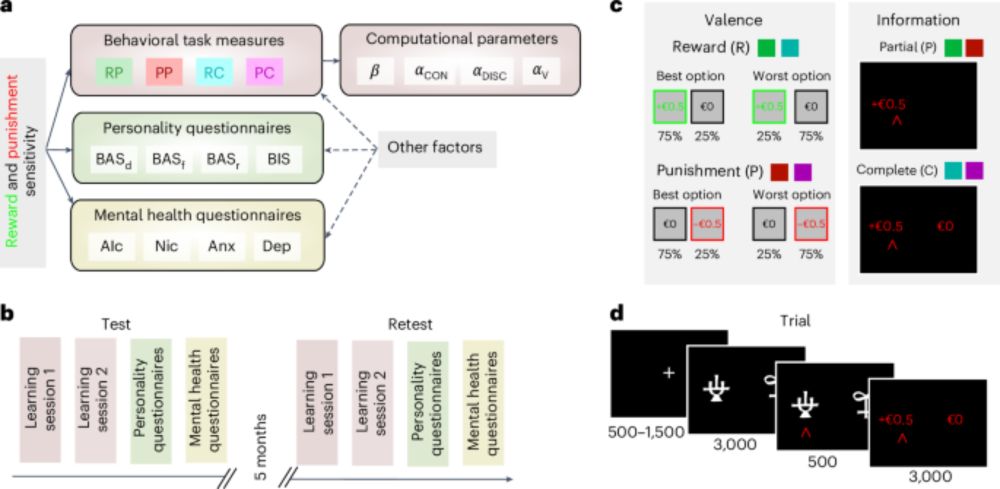

Reposted by Sami Beaumont

Reposted by Sami Beaumont

Sami Beaumont

@samibeaumont.bsky.social

· May 28

Sami Beaumont

@samibeaumont.bsky.social

· May 28

Reposted by Sami Beaumont

Reposted by Sami Beaumont

Reposted by Sami Beaumont

Reposted by Sami Beaumont

Tobias Hauser

@tobiasuhauser.bsky.social

· May 14

Brain Explorer - Test your brain power

The brain explorer app for Apple and Android tests your brain functions and helps researchers to understand the brain. Explore your brain power and how brain functions are important for mental health.

www.brainexplorer.net

Reposted by Sami Beaumont

Reposted by Sami Beaumont

Reposted by Sami Beaumont

Sami Beaumont

@samibeaumont.bsky.social

· Apr 16

Reposted by Sami Beaumont

Reposted by Sami Beaumont

Javier Abalos

@abalosaurus.bsky.social

· Nov 15

Behavioral threat and appeasement signals take precedence over static colors in lizard contests

Behavioral signals outweigh static color patches in determining the winner of territorial disputes. To understand what limits aggression in wall lizards, w

academic.oup.com

Reposted by Sami Beaumont

Reposted by Sami Beaumont

Reposted by Sami Beaumont