Vaidehi Patil

@vaidehipatil.bsky.social

860 followers

150 following

27 posts

Ph.D. Student at UNC NLP | Prev: Apple, Amazon, Adobe (Intern) vaidehi99.github.io | Undergrad @IITBombay

Posts

Media

Videos

Starter Packs

Reposted by Vaidehi Patil

Reposted by Vaidehi Patil

Reposted by Vaidehi Patil

Reposted by Vaidehi Patil

Reposted by Vaidehi Patil

Reposted by Vaidehi Patil

Elias Stengel-Eskin

@esteng.bsky.social

· Feb 25

Vaidehi Patil

@vaidehipatil.bsky.social

· Feb 25

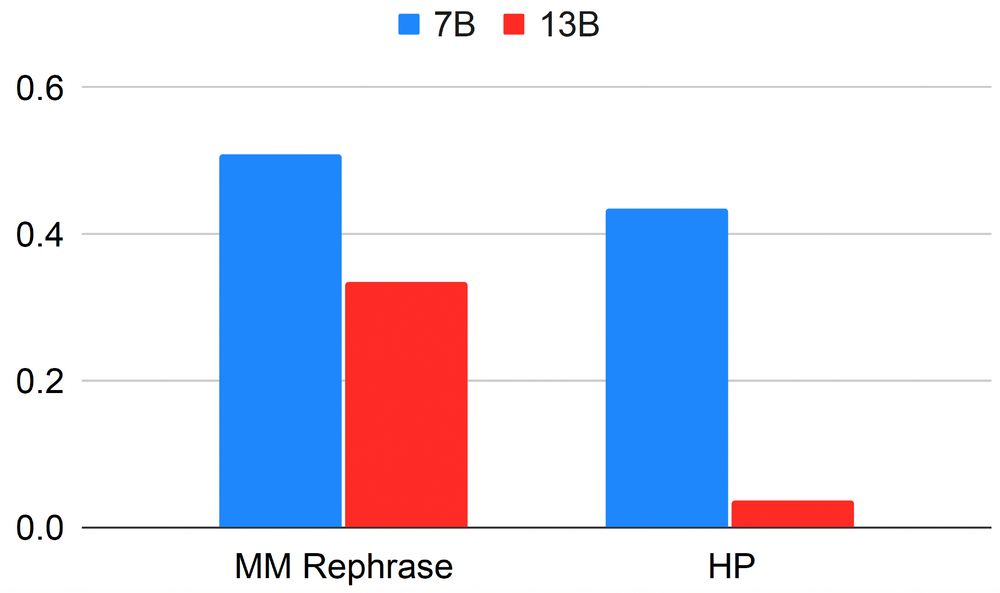

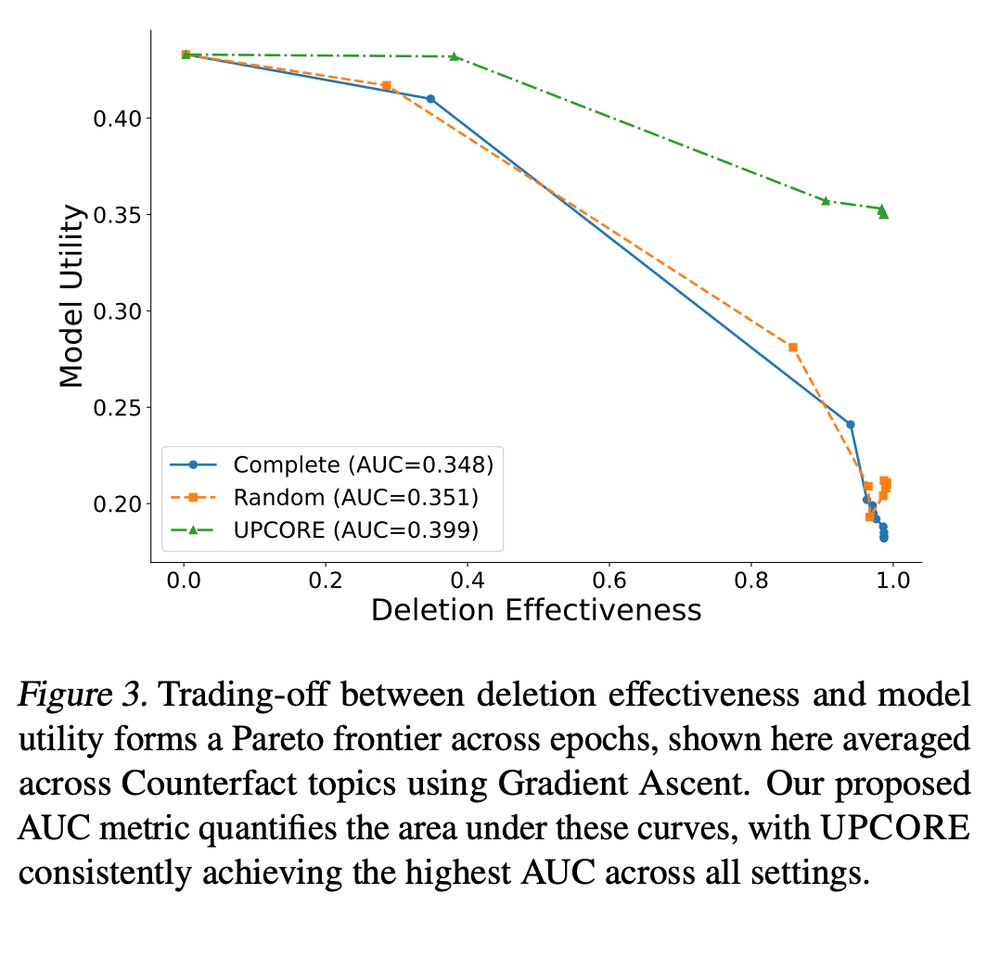

UPCORE: Utility-Preserving Coreset Selection for Balanced Unlearning

User specifications or legal frameworks often require information to be removed from pretrained models, including large language models (LLMs). This requires deleting or "forgetting" a set of data poi...

arxiv.org

Vaidehi Patil

@vaidehipatil.bsky.social

· Feb 25