Valentina Pyatkin

@valentinapy.bsky.social

5.7K followers

570 following

74 posts

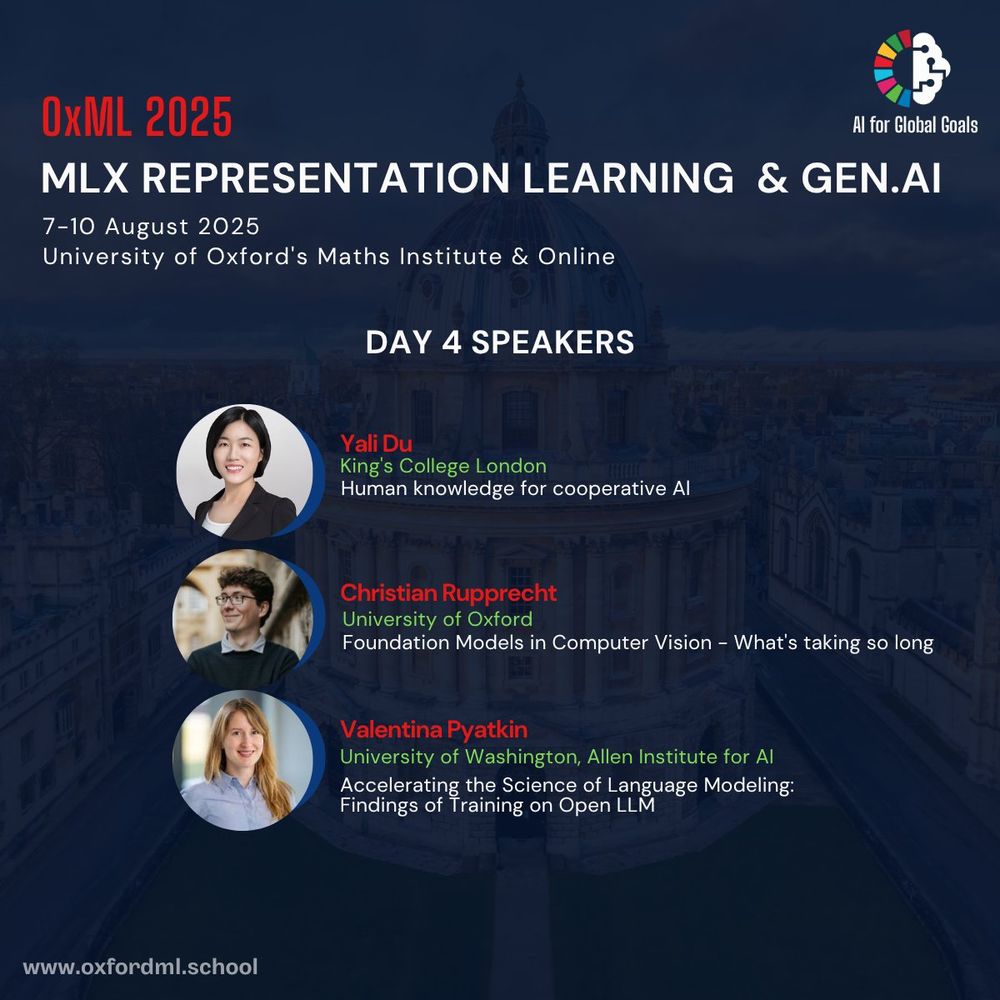

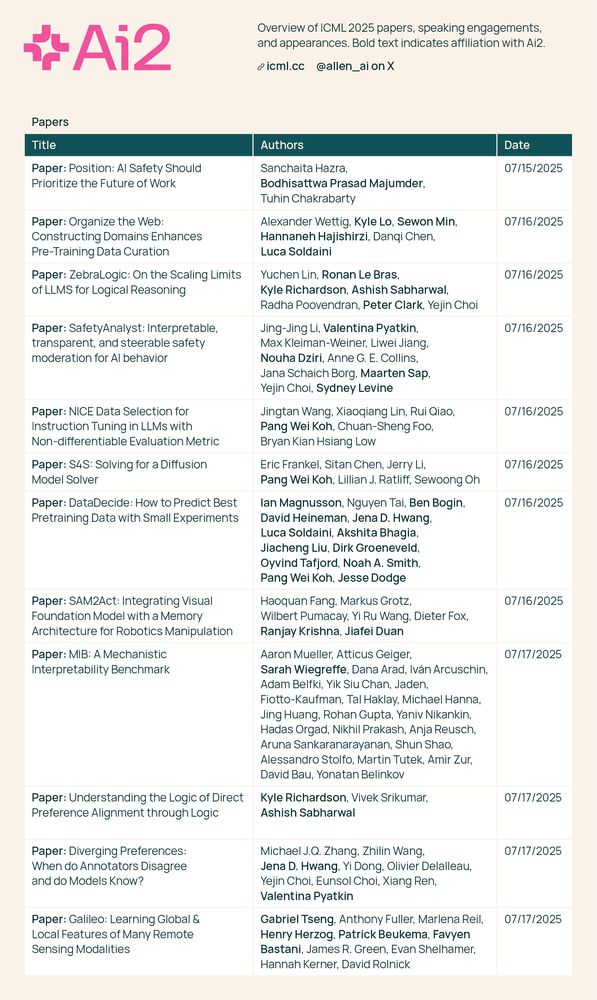

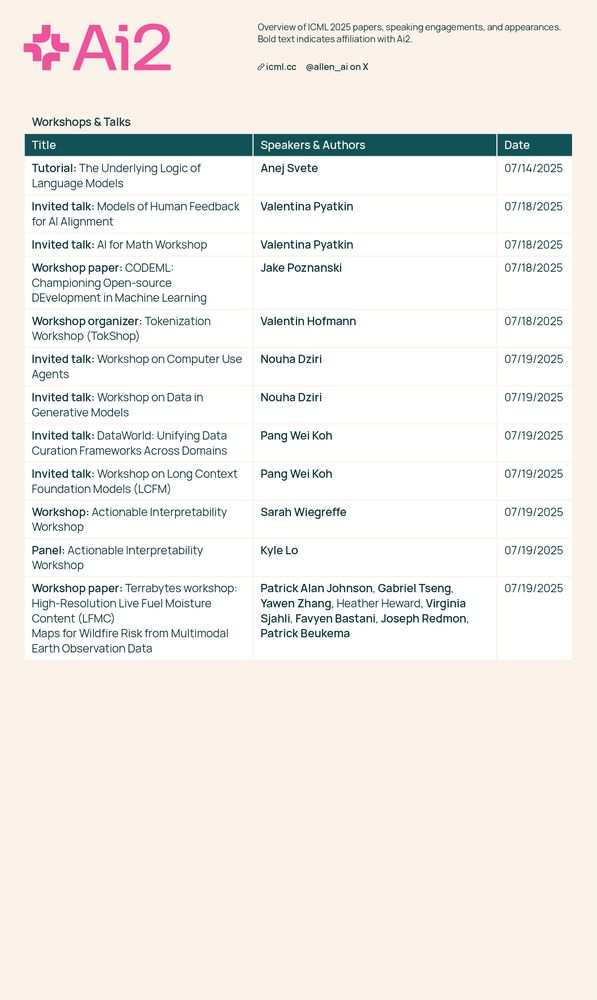

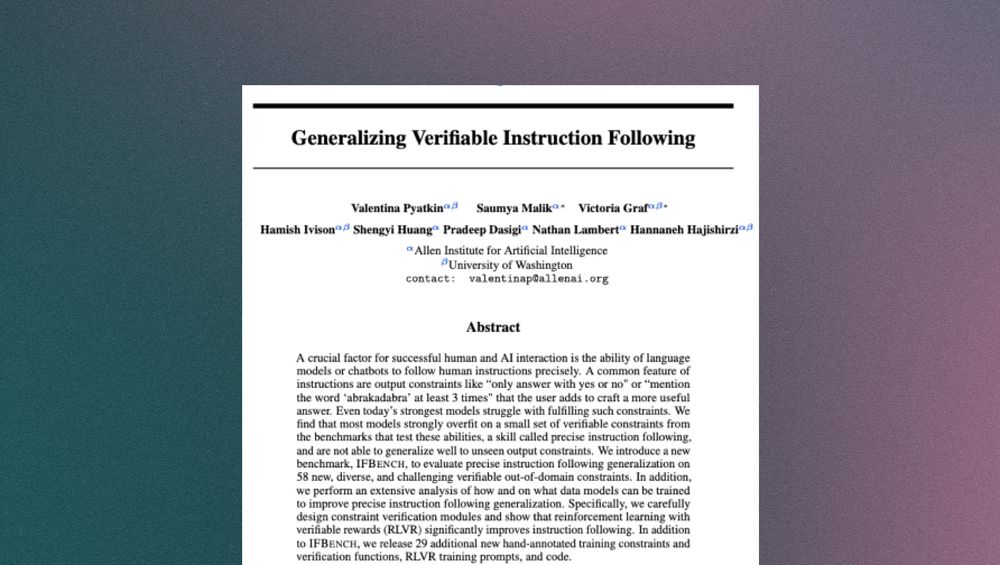

Postdoc in AI at the Allen Institute for AI & the University of Washington.

🌐 https://valentinapy.github.io

Posts

Media

Videos

Starter Packs

Reposted by Valentina Pyatkin

Reposted by Valentina Pyatkin

Reposted by Valentina Pyatkin

Reposted by Valentina Pyatkin

Yanai Elazar

@yanai.bsky.social

· Aug 2

Reposted by Valentina Pyatkin

Reposted by Valentina Pyatkin

Reposted by Valentina Pyatkin

Reposted by Valentina Pyatkin

Reposted by Valentina Pyatkin