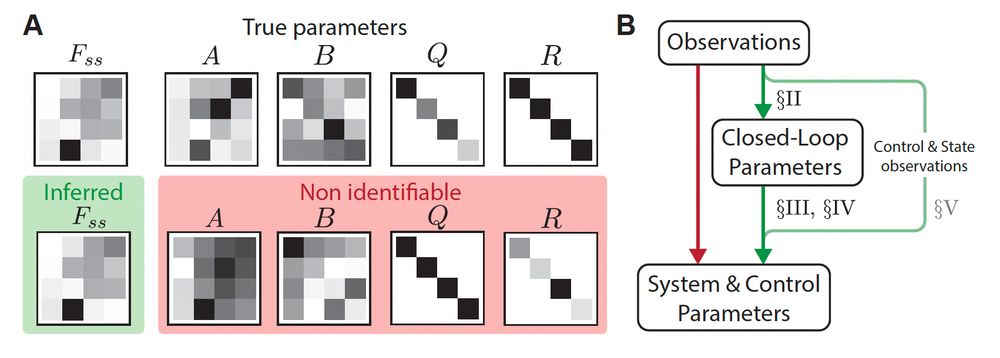

A year later, I see this as clarifying how unobserved objectives and dynamics interact to produce a continuum of explanations, and which perturbations are needed.

A year later, I see this as clarifying how unobserved objectives and dynamics interact to produce a continuum of explanations, and which perturbations are needed.

This reveals a continuum of environment-objective pairs consistent with behavior. Inverse RL / IOC typically lies at one end of this continuum.

This reveals a continuum of environment-objective pairs consistent with behavior. Inverse RL / IOC typically lies at one end of this continuum.

1. Infer closed-loop parameters (which can be done efficiently with SSM methods ✅)

2. Derive equations relating the parameters of interest in setting the closed-loop dynamics.

See our paper (also on arXiv, link above) for details!

1. Infer closed-loop parameters (which can be done efficiently with SSM methods ✅)

2. Derive equations relating the parameters of interest in setting the closed-loop dynamics.

See our paper (also on arXiv, link above) for details!

- Interpretable: linear dynamics conditioned on task variables

- Expressive: parameters vary nonlinearly over conditions

- Efficient: closed-form and fast inference, and shares statistical power across conditions. [6/6]

- Interpretable: linear dynamics conditioned on task variables

- Expressive: parameters vary nonlinearly over conditions

- Efficient: closed-form and fast inference, and shares statistical power across conditions. [6/6]

✅ Exact latent state inference with Kalman filtering/smoothing;

✅ Tractable Bayesian learning via closed-form EM updates using “conditionally linear regression”, a trick in a basis-function space. [4/5]

✅ Exact latent state inference with Kalman filtering/smoothing;

✅ Tractable Bayesian learning via closed-form EM updates using “conditionally linear regression”, a trick in a basis-function space. [4/5]

CLDS leverages conditions to approximate the full nonlinear dynamics with locally linear LDSs, bridging the benefits of linear and nonlinear models. [3/5]

CLDS leverages conditions to approximate the full nonlinear dynamics with locally linear LDSs, bridging the benefits of linear and nonlinear models. [3/5]

@lipshutz.bsky.social), Jonathan Pillow (@jpillowtime.bsky.social), and Alex Williams (@itsneuronal.bsky.social).

🔗 OpenReview: openreview.net/forum?id=xgm...

🖥️ Code: github.com/neurostatsla...

@lipshutz.bsky.social), Jonathan Pillow (@jpillowtime.bsky.social), and Alex Williams (@itsneuronal.bsky.social).

🔗 OpenReview: openreview.net/forum?id=xgm...

🖥️ Code: github.com/neurostatsla...