Interested in: rl, cv, 3d reconstruction, robotics, sfm etc.

Climber, ashtangi.

diffusion.csail.mit.edu

www.youtube.com/playlist?lis...

diffusion.csail.mit.edu

www.youtube.com/playlist?lis...

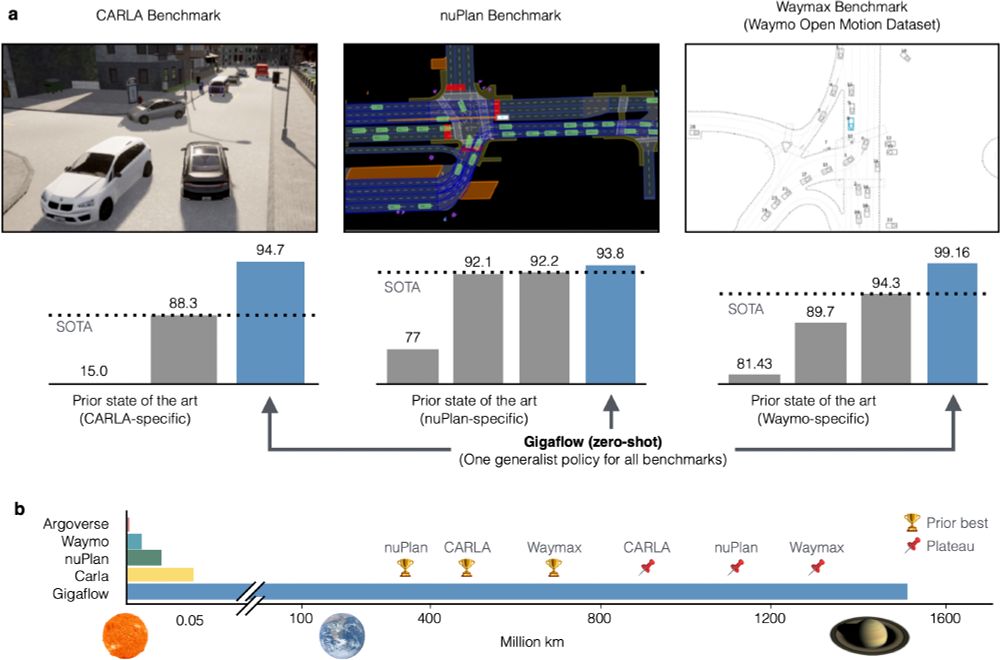

It is SOTA on every planning benchmark we tried.

In self-play, it goes 20 years between collisions.

It is SOTA on every planning benchmark we tried.

In self-play, it goes 20 years between collisions.

Sven Elflein, Qunjie Zhou, Sérgio Agostinho, @lealtaixe.bsky.social

tl;dr: feed-forward multiview *3R, good for rough pose estimation, optimization might be needed to be more precise

arxiv.org/abs/2501.14914

Sven Elflein, Qunjie Zhou, Sérgio Agostinho, @lealtaixe.bsky.social

tl;dr: feed-forward multiview *3R, good for rough pose estimation, optimization might be needed to be more precise

arxiv.org/abs/2501.14914

Yan Xia, Yunxiang Lu, Oussema Dhaouadi, João F. Henriques, Daniel Cremers

tl;dr: DUSt3r-PointNet matching with transformer like SG/LG.

arxiv.org/abs/2412.10308

Yan Xia, Yunxiang Lu, Oussema Dhaouadi, João F. Henriques, Daniel Cremers

tl;dr: DUSt3r-PointNet matching with transformer like SG/LG.

arxiv.org/abs/2412.10308

Honggyu An, Jinhyeon Kim, Seonghoon Park, Jaewoo Jung, Jisang Han, Sunghwan Hong, Seungryong Kim

arxiv.org/abs/2412.09072

Honggyu An, Jinhyeon Kim, Seonghoon Park, Jaewoo Jung, Jisang Han, Sunghwan Hong, Seungryong Kim

arxiv.org/abs/2412.09072

Zhenggang Tang, Yuchen Fan, Dilin Wang, Hongyu Xu, Rakesh Ranjan, Alexander Schwing, Zhicheng Yan

tl;dr: multi-view decoder blocks/Cross-Reference-View attention blocks->DUSt3R

arxiv.org/abs/2412.06974

Zhenggang Tang, Yuchen Fan, Dilin Wang, Hongyu Xu, Rakesh Ranjan, Alexander Schwing, Zhicheng Yan

tl;dr: multi-view decoder blocks/Cross-Reference-View attention blocks->DUSt3R

arxiv.org/abs/2412.06974

Qiankun Gao, et al.

tl;dr: learnable mask->high dynamic foreground+low dynamic background Gaussians; Relay Gaussians->motion trajectories->motion segments

arxiv.org/abs/2412.02493

Qiankun Gao, et al.

tl;dr: learnable mask->high dynamic foreground+low dynamic background Gaussians; Relay Gaussians->motion trajectories->motion segments

arxiv.org/abs/2412.02493

Yunyang Xiong et 12 al.

tl;dr: ViT + Memory bank attention = speed

arxiv.org/abs/2411.18933

Yunyang Xiong et 12 al.

tl;dr: ViT + Memory bank attention = speed

arxiv.org/abs/2411.18933

- incremental mapper with absolute pose priors

- CUDA-based BA through Ceres (disabled by default).

- PoseLib's RANSACs

- New BA covariance estimation, faster and more robust than Ceres.

- Fix Affine-covariant SIFT

github.com/colmap/colma...

- incremental mapper with absolute pose priors

- CUDA-based BA through Ceres (disabled by default).

- PoseLib's RANSACs

- New BA covariance estimation, faster and more robust than Ceres.

- Fix Affine-covariant SIFT

github.com/colmap/colma...

Junyuan Deng, Wei Yin, Xiaoyang Guo, Qian Zhang, Xiaotao Hu, Weiqiang Ren, Xiaoxiao Long, Ping Tan

tl;dr: CameraImage ~perspective field+grayscale for diffusion-based monodepth&calibration

arxiv.org/abs/2411.17240

Junyuan Deng, Wei Yin, Xiaoyang Guo, Qian Zhang, Xiaotao Hu, Weiqiang Ren, Xiaoxiao Long, Ping Tan

tl;dr: CameraImage ~perspective field+grayscale for diffusion-based monodepth&calibration

arxiv.org/abs/2411.17240

fleetwood.dev/posts/you-co...

fleetwood.dev/posts/you-co...

If you fix the # of iterations, RANSAC is an argmax over hypotheses. You turn the inlier count into your policy for hypothesis selection, and train with policy gradient (DSAC, CVPR17).

github.com/vislearn/DSA...

If you fix the # of iterations, RANSAC is an argmax over hypotheses. You turn the inlier count into your policy for hypothesis selection, and train with policy gradient (DSAC, CVPR17).

github.com/vislearn/DSA...

Excited to share our latest work (accepted to NeurIPS2024) on understanding working memory in multi-task RNN models using naturalistic stimuli!: with @takuito.bsky.social and @bashivan.bsky.social

#tweeprint below:

Excited to share our latest work (accepted to NeurIPS2024) on understanding working memory in multi-task RNN models using naturalistic stimuli!: with @takuito.bsky.social and @bashivan.bsky.social

#tweeprint below:

vladimiryugay.github.io/magic_slam/i...

1/7

vladimiryugay.github.io/magic_slam/i...

1/7

Yuzhou Tang, Dejun Xu, Yongjie Hou, Zhenzhong Wang, Min Jiang

tl;dr: nexus kernel->voxel segment->Gaussian primitives->coordinated color mapping; GS-wise uncertainty+boundary penalty

arxiv.org/abs/2411.14514

Yuzhou Tang, Dejun Xu, Yongjie Hou, Zhenzhong Wang, Min Jiang

tl;dr: nexus kernel->voxel segment->Gaussian primitives->coordinated color mapping; GS-wise uncertainty+boundary penalty

arxiv.org/abs/2411.14514