DeepSeek R1 comes in various sizes, but the 1.5B model is crap - you need to at least use the 7B to get reasonable results

GPT4 & 5 are estimated to have over a trillion

I've run 7B models locally on an 8Gb GPU btw

(via @deanbaker13.bsky.social)

venturebeat.com/ai/weibos-ne...

DeepSeek R1 comes in various sizes, but the 1.5B model is crap - you need to at least use the 7B to get reasonable results

GPT4 & 5 are estimated to have over a trillion

I've run 7B models locally on an 8Gb GPU btw

https://qian.cx/posts/AA5F6A19-FE5B-42B1-9AB2-87DFA7D7FD8C

https://qian.cx/posts/AA5F6A19-FE5B-42B1-9AB2-87DFA7D7FD8C

The industry logic is “more data = better AI”. This is unsustainable.

www.frontiersin.org/journals/com...

The industry logic is “more data = better AI”. This is unsustainable.

www.frontiersin.org/journals/com...

Switzerland's diving into open-source LLMs, blending transparency, sustainability & Web3 vibes. OLMo 7B & DeepSeek R1 are here to shake up AI's status quo.

Big tech's monopoly might be on the brink. Stay tuned!

Switzerland's diving into open-source LLMs, blending transparency, sustainability & Web3 vibes. OLMo 7B & DeepSeek R1 are here to shake up AI's status quo.

Big tech's monopoly might be on the brink. Stay tuned!

When is more reasoning no longer worth it?

This paper finds near-optimal results when CoT reasoning terminates ... almost immediately?

More reason to think CoT's overrated?

doi.org/10.48550/arX...

!["Figure 4: Accuracy of DeepSeek-R1-Distill-Qwen-7B vs. position where </think> is inserted. ... even early [termination] already yield hidden states highly similar to the final one—supporting the view that most useful reasoning content is distilled early, and extended CoT traces incur diminishing returns."](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:jf4udtyuylqocyyrqdwqoxuf/bafkreiahfbtychgcilgjlu7s2ggvft66bh45u2c6xboqutnf3m6pjz2l5a@jpeg)

When is more reasoning no longer worth it?

This paper finds near-optimal results when CoT reasoning terminates ... almost immediately?

More reason to think CoT's overrated?

doi.org/10.48550/arX...

This is also making me wonder about the list of models to hold the title "most powerful open source LLM in the world." GPT-2 > GPT-Neo > GPT-J > FairSeq Dense > GPT-NeoX-20B > MPT-7B > Falcon-40B > ??? > DeepSeek-R1

This is also making me wonder about the list of models to hold the title "most powerful open source LLM in the world." GPT-2 > GPT-Neo > GPT-J > FairSeq Dense > GPT-NeoX-20B > MPT-7B > Falcon-40B > ??? > DeepSeek-R1

Interest | Match | Feed

The models are based on Qwen2.5 architecture, trained with SFT on the data generated with DeepSeek-R1-0528.

The models are based on Qwen2.5 architecture, trained with SFT on the data generated with DeepSeek-R1-0528.

- reasoning model

- pre-trained on 6T tokens

- structured like a mistral

it's entire pre-training dataset is oriented around language translation, often using bilingual samples

- reasoning model

- pre-trained on 6T tokens

- structured like a mistral

it's entire pre-training dataset is oriented around language translation, often using bilingual samples

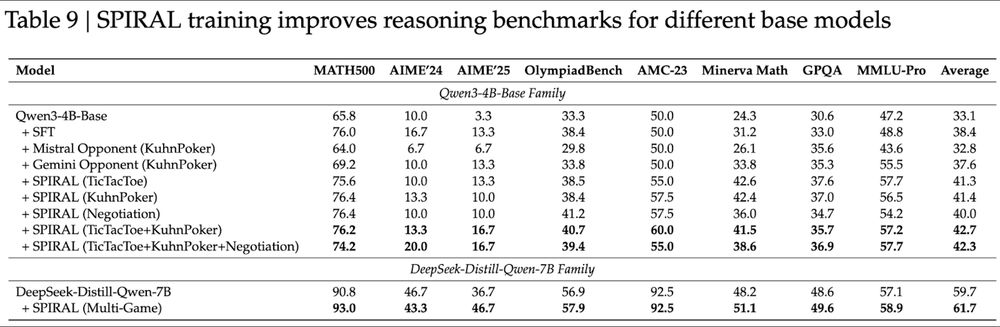

Single game: ~41% reasoning average

Multi-game: 42.7% - skills synergize!

Even strong models improve:

DeepSeek-R1-Distill-Qwen-7B jumps 59.7%→61.7%. AIME'25 +10 points! 📈

Single game: ~41% reasoning average

Multi-game: 42.7% - skills synergize!

Even strong models improve:

DeepSeek-R1-Distill-Qwen-7B jumps 59.7%→61.7%. AIME'25 +10 points! 📈

2506.04178, cs․LG, 05 Jun 2025

🆕OpenThoughts: Data Recipes for Reasoning Models

Etash Guha, Ryan Marten, Sedrick Keh, Negin Raoof, Georgios Smyrnis, Hritik Bansal, Marianna Nezhurina, Jean Mercat, Trung Vu, Zayne Sprague, Ashima Suvarna, Benjamin Feuer, ...

2506.04178, cs․LG, 05 Jun 2025

🆕OpenThoughts: Data Recipes for Reasoning Models

Etash Guha, Ryan Marten, Sedrick Keh, Negin Raoof, Georgios Smyrnis, Hritik Bansal, Marianna Nezhurina, Jean Mercat, Trung Vu, Zayne Sprague, Ashima Suvarna, Benjamin Feuer, ...

Un avis ?

Un avis ?