I notice somebody asked for a python version on the duckplyr github and they recommended ibis ibis-project.org/posts/ibis-d... which seems like it's probably the answer im looking for (!) (cc @urschrei.bsky.social , @jreades.bsky.social , @urbandemog.bsky.social )

I notice somebody asked for a python version on the duckplyr github and they recommended ibis ibis-project.org/posts/ibis-d... which seems like it's probably the answer im looking for (!) (cc @urschrei.bsky.social , @jreades.bsky.social , @urbandemog.bsky.social )

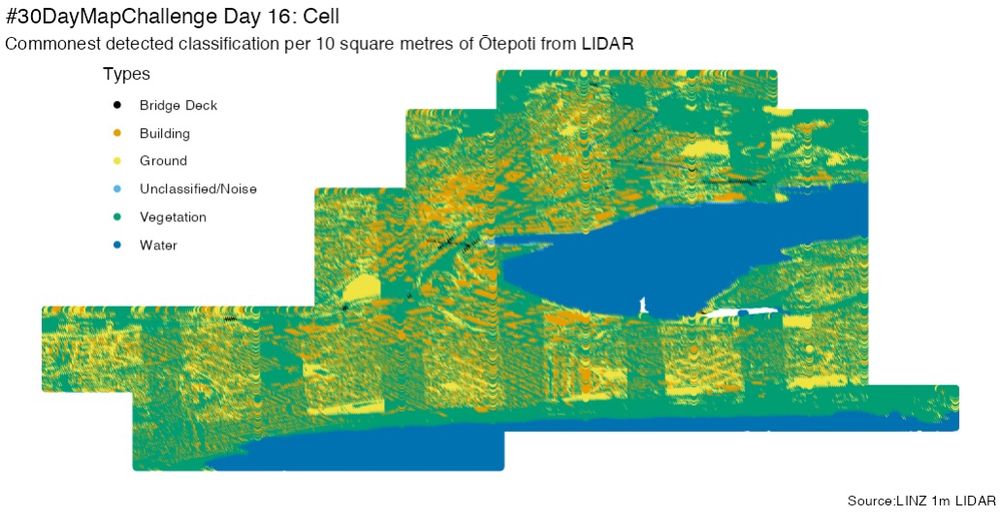

LINZ ground LIDAR point cloud data for Ōtepoti (13.64 points per m²) & showing the commonest classification in each 10 square metres (so some Duckplyr in #rstats to crunch that out). It looks like the the classification is partially sensitive to the sweep reading

LINZ ground LIDAR point cloud data for Ōtepoti (13.64 points per m²) & showing the commonest classification in each 10 square metres (so some Duckplyr in #rstats to crunch that out). It looks like the the classification is partially sensitive to the sweep reading

tidyverse.org/blog/2025/06...

tidyverse.org/blog/2025/06...

Full blog post:

www.tidyverse.org/blog/2025/0...

duckplyr 1.1.0: now part of the tidyverse.

Familiar. Blazing fast. Made for modern data.

Full blog post:

www.tidyverse.org/blog/2025/0...

duckplyr 1.1.0: now part of the tidyverse.

Familiar. Blazing fast. Made for modern data.

Install it now:

install.packages("duckplyr")

Start using your dplyr code—at DuckDB speed.

Install it now:

install.packages("duckplyr")

Start using your dplyr code—at DuckDB speed.

Warning: duckplyr is fast, but R might not show memory usage correctly.

Always monitor RAM if you're near the limit.

Warning: duckplyr is fast, but R might not show memory usage correctly.

Always monitor RAM if you're near the limit.

duckplyr tracks fallbacks.

You can review them and submit reports—help make it smarter.

Every fallback is a future speed boost.

duckplyr tracks fallbacks.

You can review them and submit reports—help make it smarter.

Every fallback is a future speed boost.

If you use dbplyr, good news:

duckplyr plays nice.

Convert duck frames to lazy dbplyr tables and back in one line.

If you use dbplyr, good news:

duckplyr plays nice.

Convert duck frames to lazy dbplyr tables and back in one line.

Need SQL-like power?

Use DuckDB functions right inside duckplyr pipelines with dd$.

Yes, even Levenshtein distance.

Need SQL-like power?

Use DuckDB functions right inside duckplyr pipelines with dd$.

Yes, even Levenshtein distance.

Got data too big for RAM?

duckplyr works out-of-memory.

Read/write Parquet. Process directly from disk. It just works.

Got data too big for RAM?

duckplyr works out-of-memory.

Read/write Parquet. Process directly from disk. It just works.

Example:

library(duckplyr)

df <- as_duckplyr_df(bigdata)

df |> filter(x > 10) |> group_by(y) |> summarise(n = n())

Familiar code. Faster engine.

Example:

library(duckplyr)

df <- as_duckplyr_df(bigdata)

df |> filter(x > 10) |> group_by(y) |> summarise(n = n())

Familiar code. Faster engine.

Use it two ways:

Load duckplyr to override dplyr globally

Or just convert specific data frames with as_duckplyr_df()

Use it two ways:

Load duckplyr to override dplyr globally

Or just convert specific data frames with as_duckplyr_df()

When DuckDB can’t handle something, duckplyr falls back to dplyr.

Same results. Always.

It’s safe to try.

When DuckDB can’t handle something, duckplyr falls back to dplyr.

Same results. Always.

It’s safe to try.

duckplyr handles huge datasets with ease.

6M rows?

10× faster than dplyr in benchmarks.

Less memory. Less time. No sweat.

duckplyr handles huge datasets with ease.

6M rows?

10× faster than dplyr in benchmarks.

Less memory. Less time. No sweat.

duckplyr is a drop-in replacement for dplyr.

Same code. Same verbs.

But it runs on DuckDB under the hood—for serious speed.

duckplyr is a drop-in replacement for dplyr.

Same code. Same verbs.

But it runs on DuckDB under the hood—for serious speed.

Your wrangling just got a massive upgrade.

duckplyr is now in the tidyverse—and it’s fast. Really fast. 🧵

Your wrangling just got a massive upgrade.

duckplyr is now in the tidyverse—and it’s fast. Really fast. 🧵

Trick example: DelayedArray. Duckplyr with DuckDB

R can now work on chunks of data, lazily, like Python’s dask or pandas.read_csv(chunksize=…).

Trick example: DelayedArray. Duckplyr with DuckDB

R can now work on chunks of data, lazily, like Python’s dask or pandas.read_csv(chunksize=…).

* dplyr: ~12–80s 🐢

* duckplyr: ~2–10s 🚀

* polars: ~1.8–10s 🚀🚀

* dbplyr: 47–171s 😬 (but i'm probably bad at it)

💾 Memory:

* dplyr: up to 2,099 MB

* dbplyr: ~2 GB

* duckplyr: up to 675 MB

* polars: as low as 35 MB ✨

* dplyr: ~12–80s 🐢

* duckplyr: ~2–10s 🚀

* polars: ~1.8–10s 🚀🚀

* dbplyr: 47–171s 😬 (but i'm probably bad at it)

💾 Memory:

* dplyr: up to 2,099 MB

* dbplyr: ~2 GB

* duckplyr: up to 675 MB

* polars: as low as 35 MB ✨