Reposted by: Amy Donovan, Michelle L. Mazurek, Noah L. Nathan

www.nature.com/articles/d41...

Reposted by: Amy Donovan

www.nature.com/articles/d41...

Reposted by: Amy Donovan

Reposted by: Amy Donovan

Reposted by: Amy Donovan

www.bristol.ac.uk/jobs/find/de...

Reposted by: Amy Donovan

Reposted by: Amy Donovan

#JedBartlett

#TheWestWing

#MartinSheen

#Democracy

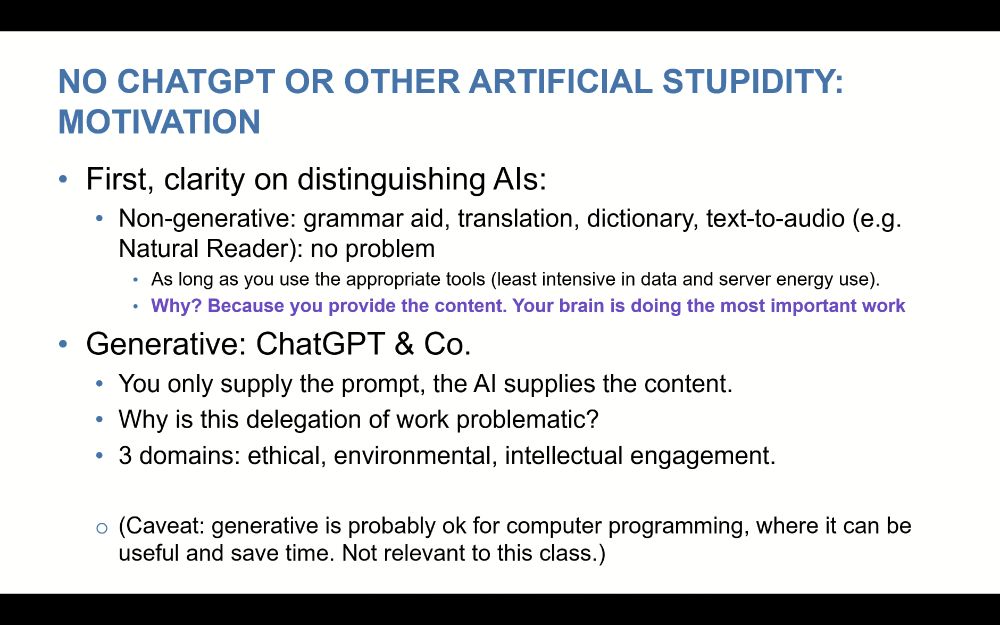

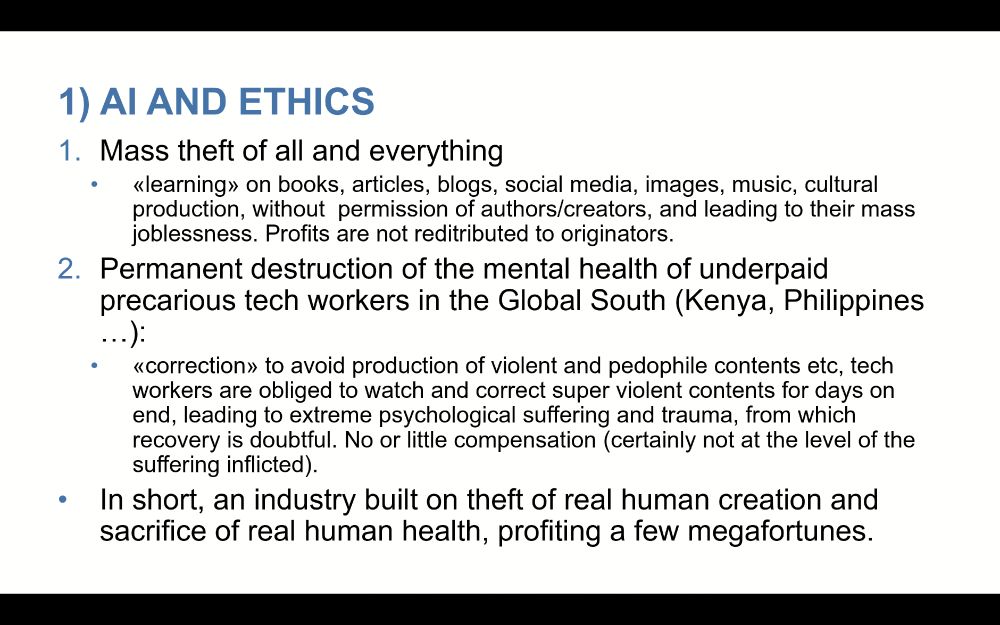

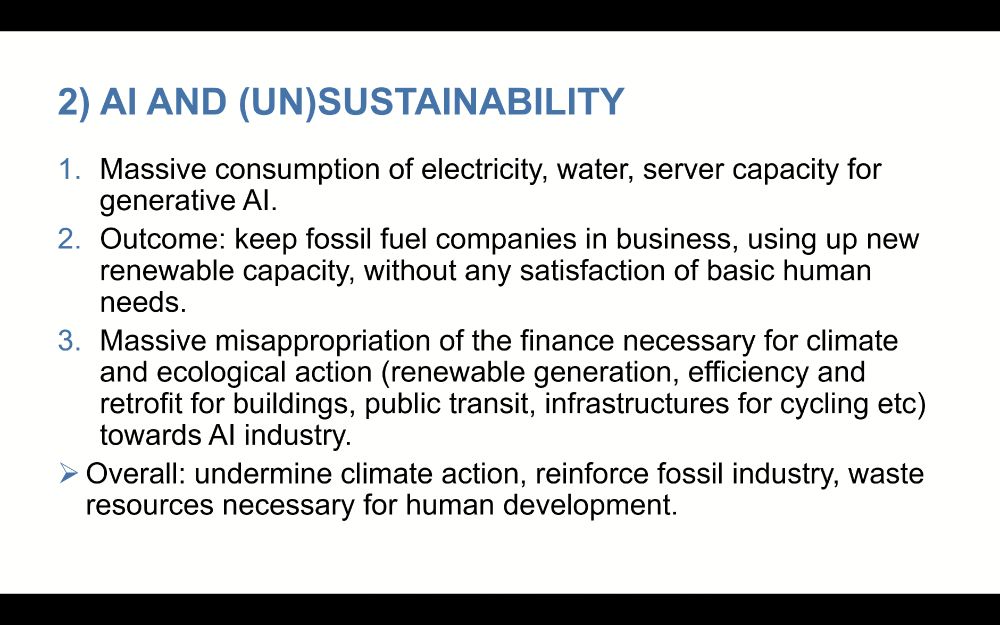

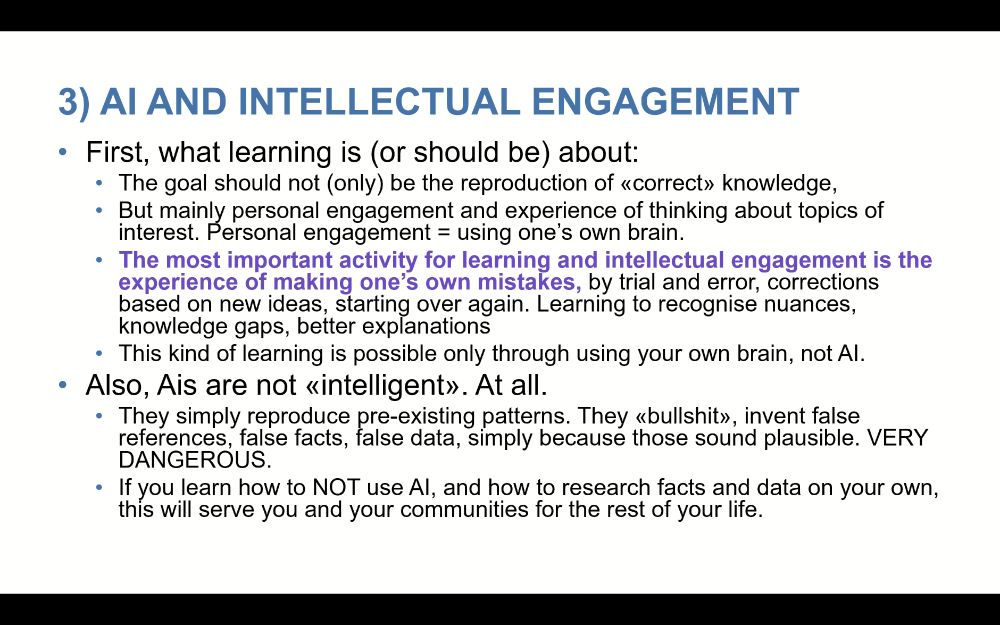

TL;DR? AI is evil, unsustainable and stupid, and I'd much rather they use their own brains, make their own mistakes, and actually learn something. 🪄

Reposted by: Amy Donovan, David Learmount

Today, the Baltic states disconnected their power grids from that of Russia.

It’s taken years of prep. What a moment.

by Amy Donovan

by Amy Donovan

Reposted by: Amy Donovan

by Jenni Barclay — Reposted by: Amy Donovan

Reposted by: Amy Donovan

www.eas.cornell.edu/eas/programs...

Reposted by: Amy Donovan

with Dr. Symeon Makris (BGS), Dr. Max Van Wyk de Vries (University of Cambridge), Dr. Beatriz Recinos, and Prof. Simon Mudd (UoE) #naturalhazards