Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior!

shorturl.at/siUYI%F0%9F%...

Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior!

shorturl.at/siUYI%F0%9F%...

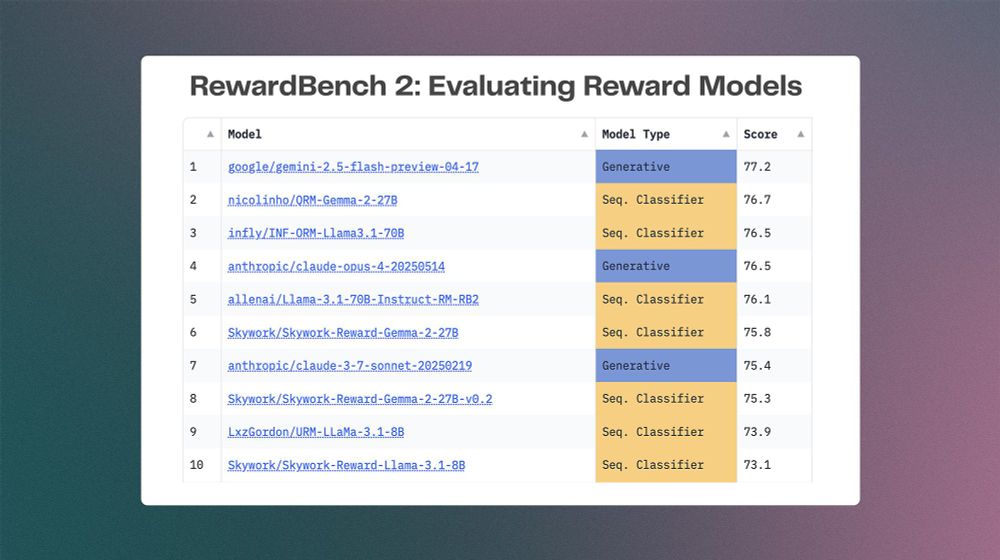

Read more in the paper here (ArXiv soon!): github.com/allenai/rewa...

Dataset, leaderboard, and models here: huggingface.co/collections/...

Read more in the paper here (ArXiv soon!): github.com/allenai/rewa...

Dataset, leaderboard, and models here: huggingface.co/collections/...

🖇️To present my paper "Superlatives in Context", showing how the interpretation of superlatives is very context dependent and often implicit, and how LLMs handle such semantic underspecification

🖇️And we will present RewardBench on Friday

Reach out if you want to chat!

🖇️To present my paper "Superlatives in Context", showing how the interpretation of superlatives is very context dependent and often implicit, and how LLMs handle such semantic underspecification

🖇️And we will present RewardBench on Friday

Reach out if you want to chat!

🖇️To present my paper "Superlatives in Context", showing how the interpretation of superlatives is very context dependent and often implicit, and how LLMs handle such semantic underspecification

🖇️And we will present RewardBench on Friday

Reach out if you want to chat!

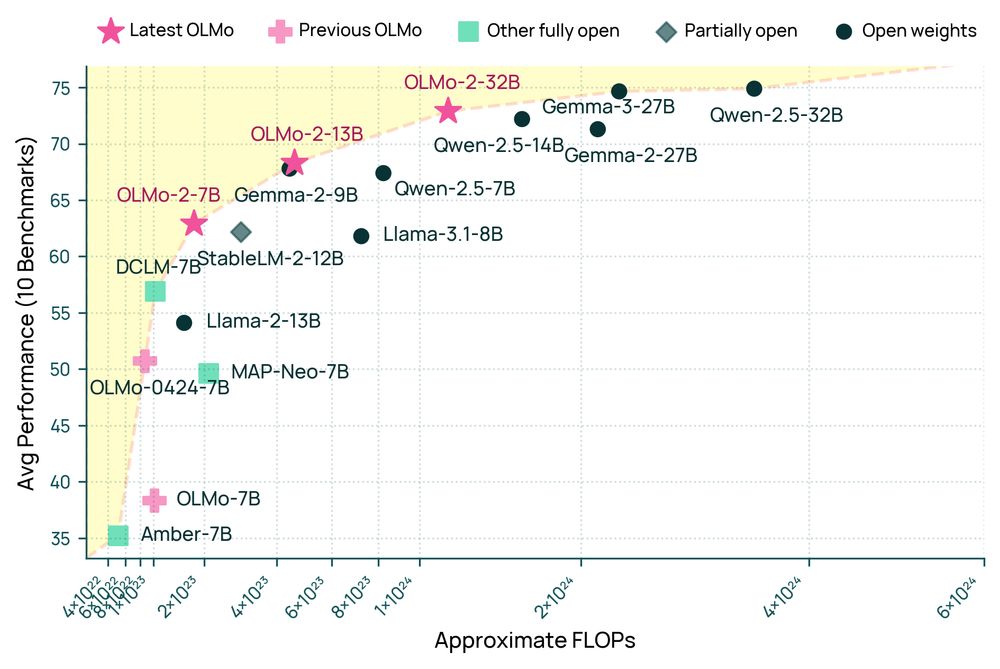

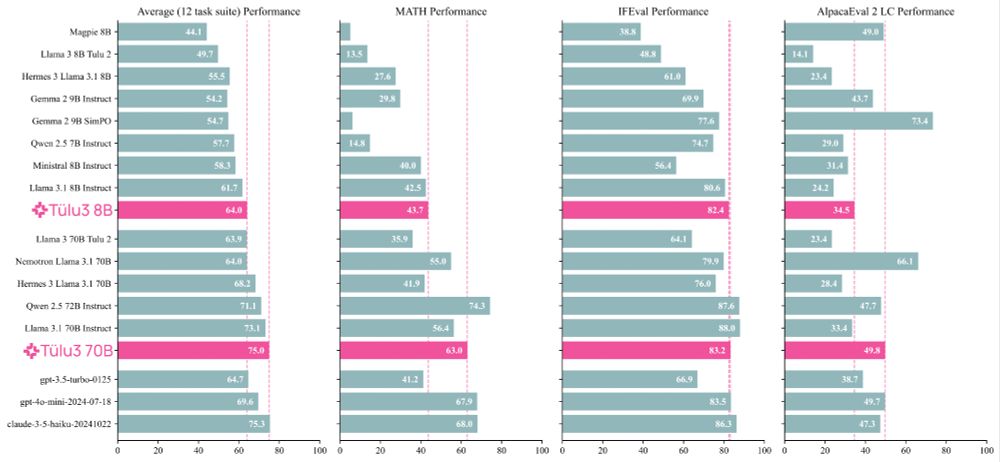

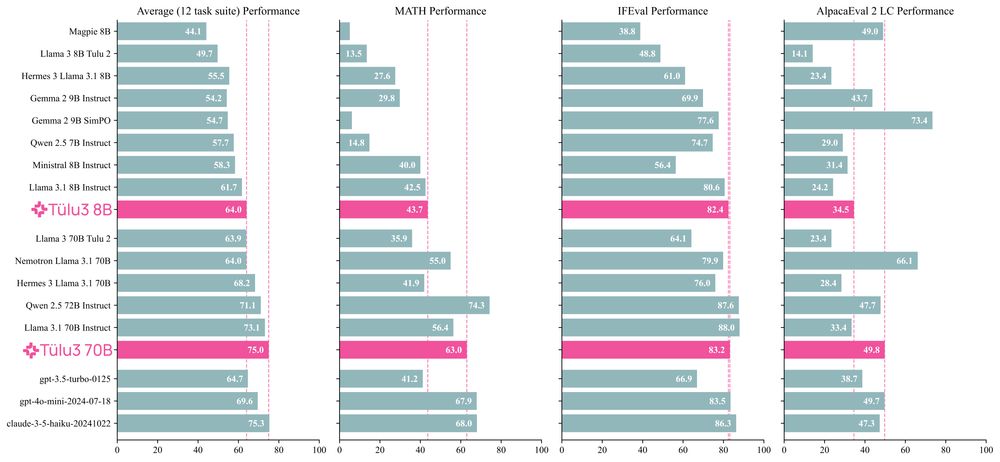

Comparable to best open-weight models, but a fraction of training compute. When you have a good recipe, ✨ magical things happen when you scale it up!

Comparable to best open-weight models, but a fraction of training compute. When you have a good recipe, ✨ magical things happen when you scale it up!

and @vwxyzjn.bsky.social, and preference data from @ljvmiranda.bsky.social

on an unrelated note, I'm applying to phd programs this year 👀

and @vwxyzjn.bsky.social, and preference data from @ljvmiranda.bsky.social

on an unrelated note, I'm applying to phd programs this year 👀

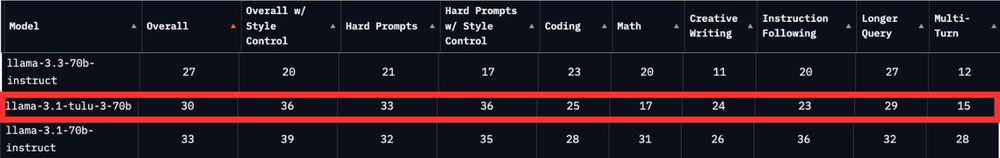

Particularly happy it is top 20 for Math and Multi-turn prompts :)

All the details and data on how to train a model this good are right here: arxiv.org/abs/2411.15124

Particularly happy it is top 20 for Math and Multi-turn prompts :)

All the details and data on how to train a model this good are right here: arxiv.org/abs/2411.15124

Next looking for OLMo 2 numbers.

Next looking for OLMo 2 numbers.

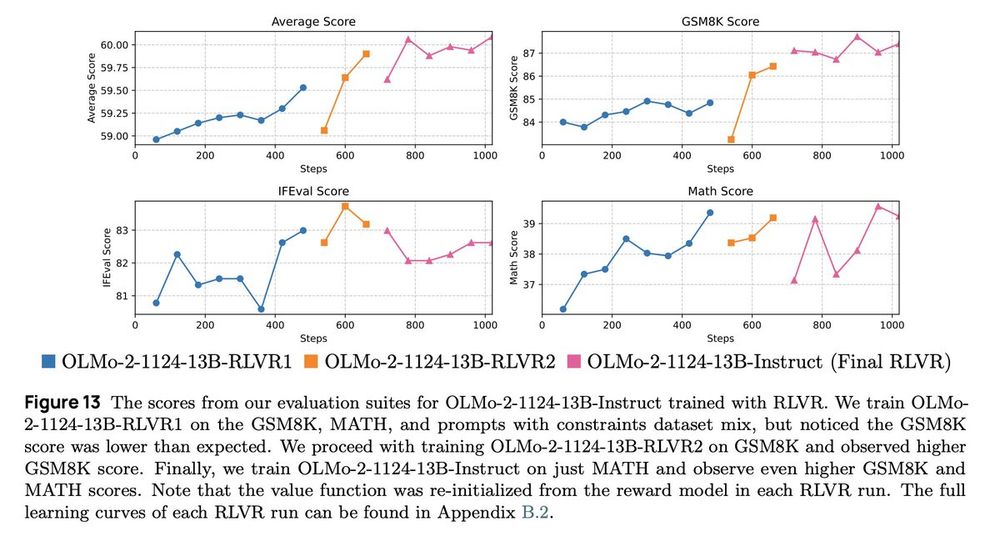

This time, we applied RLVR iteratively! Our initial RLVR checkpoint on the RLVR dataset mix shows a low GSM8K score, so we did another RLVR on GSM8K only and another on MATH only 😆.

And it works! A thread 🧵 1/N

This time, we applied RLVR iteratively! Our initial RLVR checkpoint on the RLVR dataset mix shows a low GSM8K score, so we did another RLVR on GSM8K only and another on MATH only 😆.

And it works! A thread 🧵 1/N

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

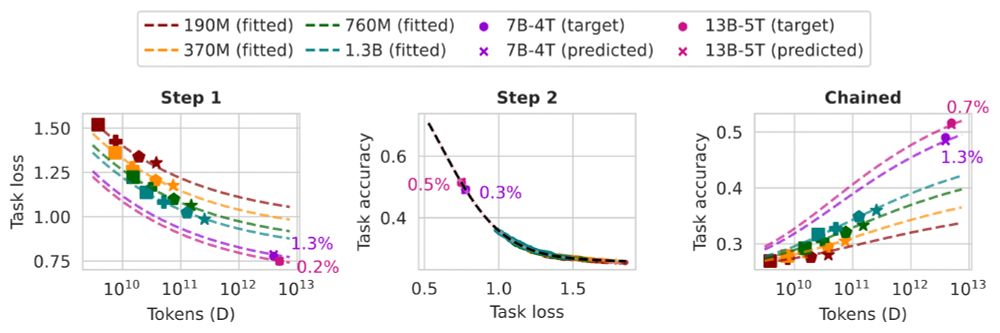

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

- brat tulu, a @jacobcares.bsky.social favorite

- PNW tulu, don’t forget where @ai2.bsky.social is from

- dank tulu 💪

- tulu at tulu, bc tulu means sunrise in farsi

- brat tulu, a @jacobcares.bsky.social favorite

- PNW tulu, don’t forget where @ai2.bsky.social is from

- dank tulu 💪

- tulu at tulu, bc tulu means sunrise in farsi

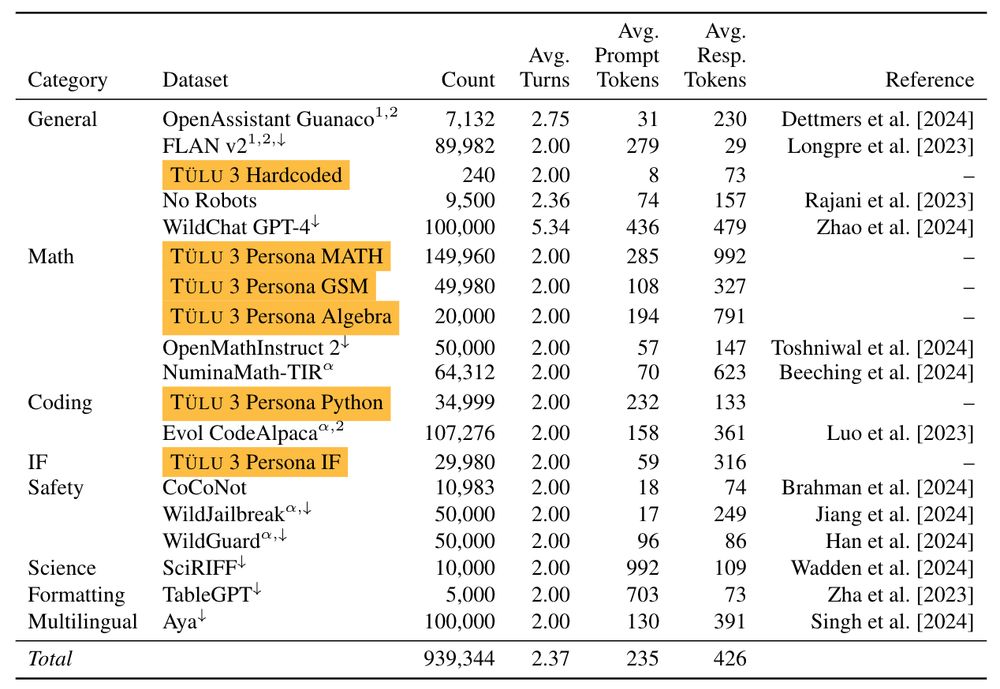

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

Thread.

Thread.