Current quest: never leave Emacs.

(Personal account. Opinions not even my own.)

SCENARIO 1: The viewpoint is absurd.

SCENARIO 2: You don't know what you're talking about.

Most of us will be in Scenario 2 more often than we'd like to admit.

As an alternative, Gemini suggests roleplay a specific character. So I said "embody Begbie from Trainspotting".

The funniest "thinking" step:

As an alternative, Gemini suggests roleplay a specific character. So I said "embody Begbie from Trainspotting".

The funniest "thinking" step:

I am happy they rejected this compact, at the very least, though I would have liked a more forceful statement.

I am happy they rejected this compact, at the very least, though I would have liked a more forceful statement.

Our culture of “now-ism” risks pushing climate, economies and societies past their limits

The latest #ComplexityThoughts:

👉 manlius.substack.com/p/how-modern...

🎧 on Spotify and Apple

#ComplexSystems #Resilience

@ricardsole.bsky.social

![Output from Gemini 2.5 Pro showing its "thinking" arriving at the correct answer when solving for x in [5.9 = x + 5.11], where x = 0.79, but still reporting an incorrect answer: x = -0.21.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:yc6rv5mhfnery26hn3uo5fl3/bafkreiafz56j3lvddmfxb5ayguc7lksrmqqv3bknxkqeyf67wbpemc3ida@jpeg)

Make up a story about antisemitism, plop it into an anonymous student evaluation, and watch as your prof's life is upturned faster than you can spell "fascism."

Read it for yourself here:

www.brown.edu/sites/defaul...

This misalignment isn't just theoretical: it’s showing up in fabricated data, hallucinated praise, even red-teamed blackmail.

www.science.org/doi/10.1126/...

This misalignment isn't just theoretical: it’s showing up in fabricated data, hallucinated praise, even red-teamed blackmail.

www.science.org/doi/10.1126/...

Something tells me this cannot be Lindy.

Something tells me this cannot be Lindy.

garymarcus.substack.com/p/are-llms-s...

garymarcus.substack.com/p/are-llms-s...

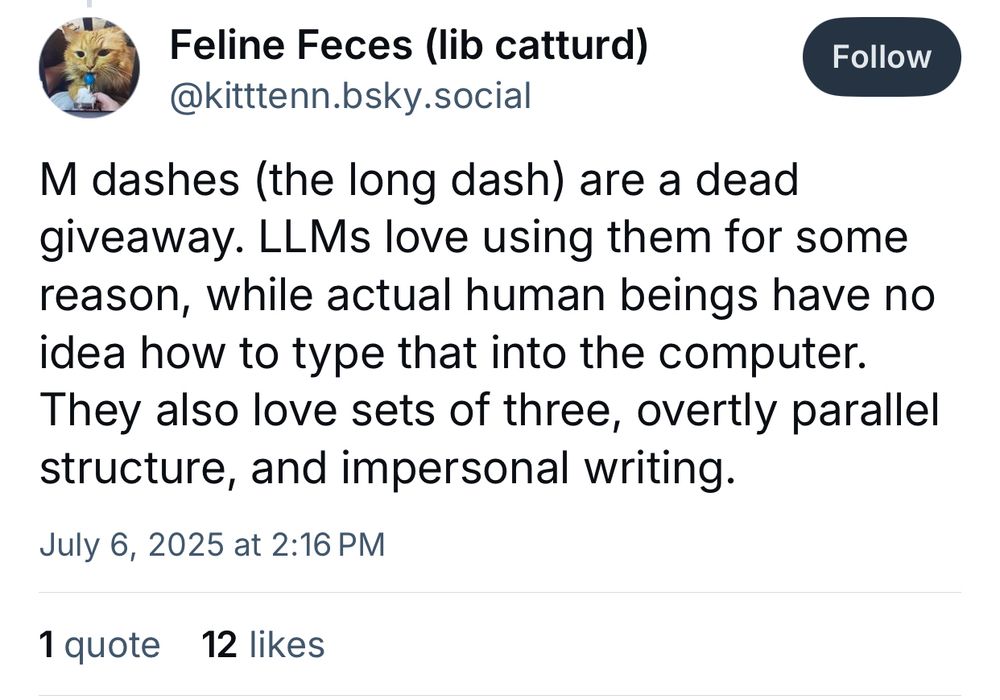

Plenty of actual human beings know how to type em dashes! We’re called “writers” and “editors” — maybe you’ve heard of us?

Plenty of actual human beings know how to type em dashes! We’re called “writers” and “editors” — maybe you’ve heard of us?