Research interests: Complexity Sciences, Matrix Decomposition, Clustering, Manifold Learning, Networks, Synthetic (numerical) data, Portfolio optimization. 🇨🇮🇿🇦

www.nature.com/articles/s41...

www.nature.com/articles/s41...

📆 Nov 25, 2025

📆 Nov 25, 2025

arxiv.org/abs/2511.17247

arxiv.org/abs/2511.17247

Grok is sloppy about it.

Other companies are subtle about it.

The only difference is competence, not intent.

My guess is that this will get worse as AI tech improves. For instance, fake videos of minorities doing crime.

Gradient descent and backpropagation have a lot of problems, alignment becomes a nightmare. Evolutionary algos fix this, but they don’t scale

A recent paper, EGGROLL, makes it computationally feasible to do now

www.alphaxiv.org/abs/2511.16652

Sinong Geng, houssam nassif, Zhaobin Kuang, Anders Max Reppen, K. Ronnie Sircar

Action editor: Reza Babanezhad Harikandeh

https://openreview.net/forum?id=KLOJUGusVE

#portfolio #finance #financial

Sinong Geng, houssam nassif, Zhaobin Kuang, Anders Max Reppen, K. Ronnie Sircar

Action editor: Reza Babanezhad Harikandeh

https://openreview.net/forum?id=KLOJUGusVE

#portfolio #finance #financial

This post is a good example of that.

Once you accept that the origin is special, then….

This post is a good example of that.

Once you accept that the origin is special, then….

Call for abstracts: tinyurl.com/3tbj2v83

Call for satellites: tinyurl.com/42sru6kz

Call for abstracts: tinyurl.com/3tbj2v83

Call for satellites: tinyurl.com/42sru6kz

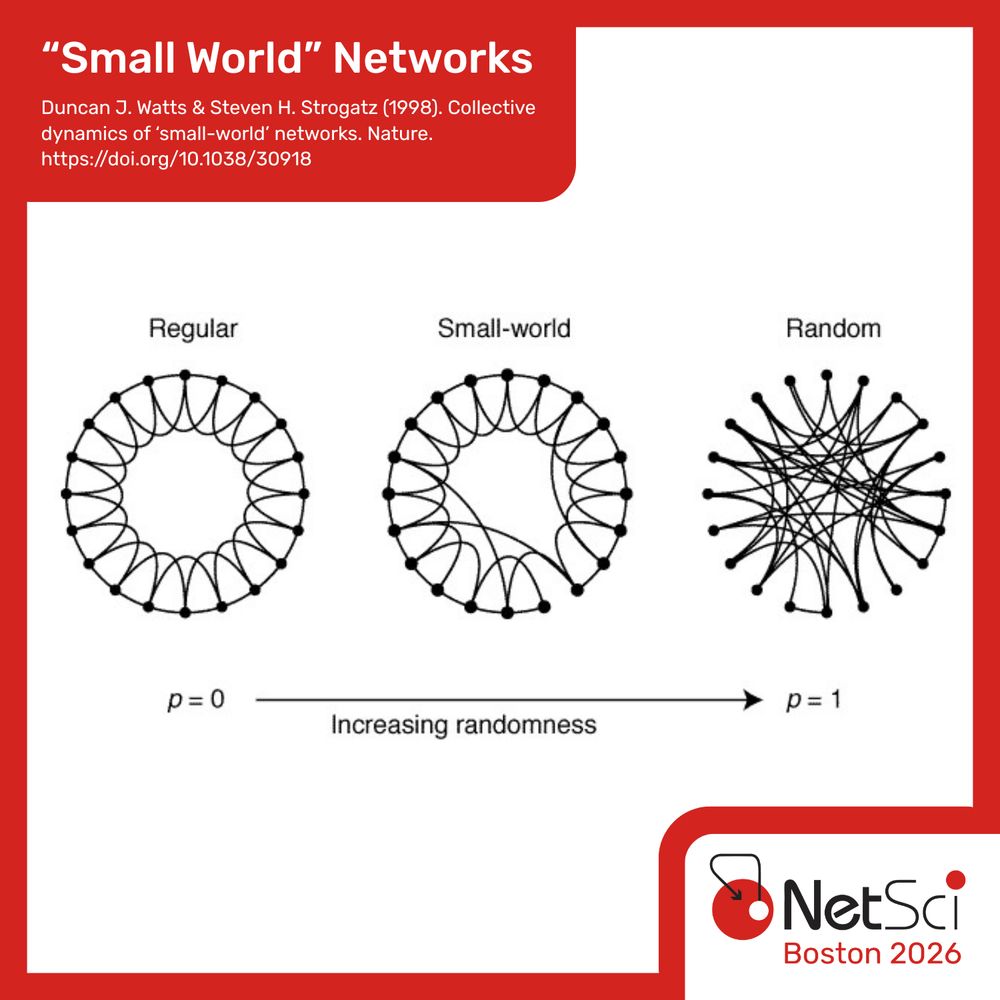

Secure your spot at the flagship conference of the Network Science Society and take advantage of the early bird registration special - www.netsci2026.com/registration

📅 June 1–5, 2026 | Hyatt Regency, Cambridge, MA

Join us as we celebrate 20 years of NetSci!

𝙎𝙩𝙖𝙮 𝙩𝙪𝙣𝙚𝙙, 𝙮𝙤𝙪 𝙢𝙖𝙮 𝙨𝙚𝙚 𝙢𝙤𝙧𝙚 𝙨𝙢𝙖𝙡𝙡-𝙬𝙤𝙧𝙡𝙙 𝙣𝙚𝙩𝙬𝙤𝙧𝙠𝙨 𝙞𝙣 𝙩𝙝𝙚 𝙉𝙚𝙩𝙎𝙘𝙞 𝙥𝙧𝙤𝙜𝙧𝙖𝙢 𝙨𝙤𝙤𝙣…

𝙎𝙩𝙖𝙮 𝙩𝙪𝙣𝙚𝙙, 𝙮𝙤𝙪 𝙢𝙖𝙮 𝙨𝙚𝙚 𝙢𝙤𝙧𝙚 𝙨𝙢𝙖𝙡𝙡-𝙬𝙤𝙧𝙡𝙙 𝙣𝙚𝙩𝙬𝙤𝙧𝙠𝙨 𝙞𝙣 𝙩𝙝𝙚 𝙉𝙚𝙩𝙎𝙘𝙞 𝙥𝙧𝙤𝙜𝙧𝙖𝙢 𝙨𝙤𝙤𝙣…

📍ZZ2/PSTR368.03

📍ZZ2/PSTR368.03